Table of Contents

“Queries are 10 times faster.”

This is often presented as the headline win after a data warehouse project. It shows up in steering committee updates, vendor case studies, and internal demos. Faster dashboards feel tangible and immediately rewarding. They’re easy to measure and they create the sense that something meaningful has improved, even when deeper issues remain.

Speed becomes the default success metric because it is easy to demonstrate and politically safe to celebrate. It does not require resolving ownership, semantics, or accountability. It simply requires better infrastructure.

On the surface, this makes sense. Slow queries are painful. Waiting minutes for a report feels broken. When performance improves, frustration drops and that feels like success. But there is an uncomfortable truth:

Faster queries are usually the least important outcomes of Data Warehouse Consulting.

They are visible, but shallow in organizational impact. Easy to celebrate, but rarely decisive for decision quality. Performance improvements often arrive before organizational alignment. When that happens, speed creates confidence in the platform before confidence exists in the numbers.

In many organizations, query speed improves while:

- Teams still argue over which number is correct

- Business users still export data to spreadsheets “to be safe”

- Executives still hesitate to use dashboards in real decisions

- Definitions still change without warning

In those cases, performance gains mask deeper problems instead of solving them. Speed is a local optimization, not a systemic core. It improves how fast answers arrive, but not whether those answers are trusted, understood, or usable.

A fast query on an untrusted metric is not progress. It’s just a quicker way to create doubt. This article will explain:

- Why query speed is a weak proxy for success in Data Warehouse Consulting

- What focusing on speed tends to hide about organizational health

- And what leaders should measure instead if they care about real outcomes

Measuring success correctly at the start of a data warehouse initiative determines whether progress compounds or stalls. Speed can be a requirement, but it should never be the goal.Because if faster queries are your primary success metric, there is a strong chance the hardest problems remain untouched, only harder to see.

Why Speed Became the Default Success Metric

Query speed didn’t become the default success metric by accident.

It became dominant because it is convenient for teams, for vendors, and for leadership.

Easy to Measure

Performance is simple to quantify:

- Query time before vs after

- Dashboard load time improvements

- Cost-per-query reductions

These numbers are concrete. They fit neatly into slides and status reports. They don’t require interpretation or debate.

Compared to metrics like trust or adoption, speed feels objective and largely uncontested. Speed feels objective because it produces numbers that look precise. But precision is not the same as relevance. A metric can be exact and still miss what matters.

Easy to Demonstrate

Speed improvements are instantly visible. You can:

- Run the same query twice

- Show the stopwatch

- Watch dashboards load faster

There’s no ambiguity. No stakeholder alignment required. No business context needed. That makes speed an attractive early win, especially in environments where progress must be shown quickly.

Easy to Communicate to Leadership

“Queries are 10× faster” is a message leadership immediately understands. It signals:

- Technical competence

- Return on investment

- Modernization

For leadership, speed acts as a proxy signal. It suggests that the problem is being handled competently, even when the underlying sources of confusion remain untouched. It avoids uncomfortable follow-up questions like:

- “Faster for whom?”

- “Which decisions improved?”

- “Do people trust the numbers now?”

Speed creates the appearance of progress without forcing deeper organizational conversations.

Reinforced by Vendors and Consultants

Vendors and consultants naturally emphasize what they can:

- Benchmark

- Optimize

- Demo

- Attribute directly to their work

Query speed fits perfectly. Trust, ownership, and organizational clarity do not. So success stories skew toward performance, even when performance was not the real blocker.

Why Speed Feels Safer Than Harder Questions

Focusing on speed lets organizations avoid harder, riskier topics:

- Who owns the metrics?

- Which definitions are “official”?

- Why do teams still disagree?

- Why is adoption uneven?

Those questions create friction. Speed does not. Speed is a low-conflict metric by design. By optimizing for low conflict, organizations often delay the very conversations that would unlock real value. Speed buys temporary comfort at the cost of long-term clarity. Trust and ownership are not.

That is why speed becomes the headline, while deeper problems remain unmeasured and unresolved.

Speed only matters if the answer is trusted and acted on. Speed only compounds value once an organization is willing to commit to the answer. Without commitment, performance improvements simply accelerate hesitation.

When teams do not trust the numbers, faster queries become irrelevant. A dashboard that loads in one second instead of ten changes little if the first reaction is:

“Let me double-check this.”

Faster Access to Disputed Data Is Not Progress

In many organizations, performance improves while behavior stays the same. When behavior does not change, performance gains indicate technical progress without organizational absorption.

You still see:

- Finance exporting data to spreadsheets for manual reconciliation

- Product teams ignoring dashboards in favor of their own metrics

- Executives asking for numbers in meetings but hesitating to use them

The data arrives faster, but it still does not settle anything. Speed accelerates delivery, not confidence.

In disputed environments, speed scales disagreement faster than alignment, because unresolved ambiguity is delivered more efficiently to more people. If the underlying data is disputed, faster queries simply reduce the time it takes for disagreement to surface.

Why Broken Decisions Persist Despite Better Performance

Decisions break down when:

- Definitions are ambiguous

- Ownership is unclear

- Changes appear without explanation

- No one can confidently say, “This is the number we stand behind.”

None of these are performance problems. They are organizational problems. Query speed optimizes how quickly data is returned to the user. Decision quality depends on whether people believe it.

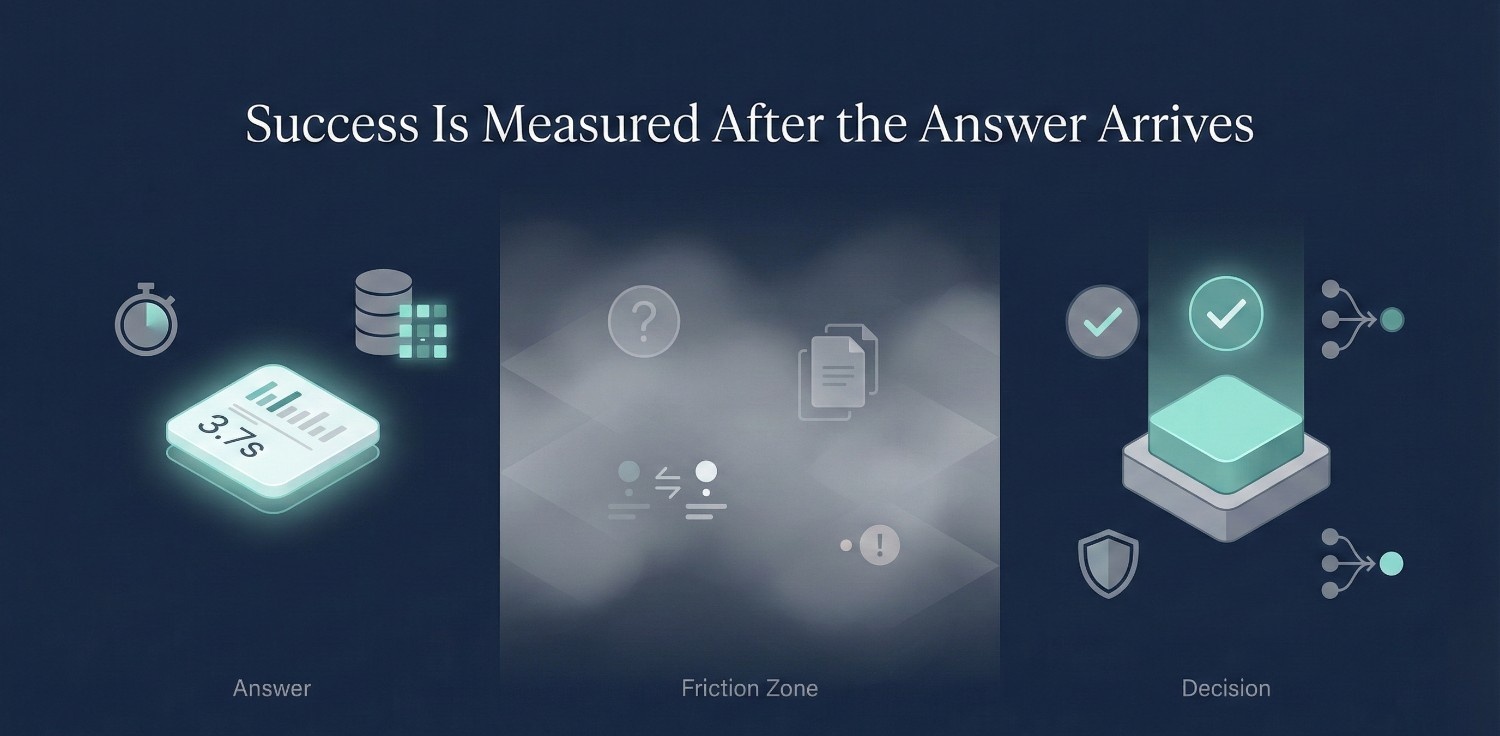

Decision Latency Is the Metric That Actually Matters

The more meaningful question is not:

“How fast can we run this query?”

It’s:

“How long does it take to confidently make a decision using this data?”

Decision latency includes:

- Time spent reconciling numbers

- Time lost debating definitions

- Follow-up meetings to “validate” outputs

- Delays caused by uncertainty or lack of ownership

A system with slightly slower queries but stable, trusted metrics often outperforms a fast system that no one believes.

The Hidden Cost of Optimizing the Wrong Thing

When speed is treated as the primary success metric:

- Teams optimize queries instead of clarity

- Performance gains mask unresolved disputes

- Leadership assumes progress that hasn’t actually occurred

This creates a dangerous illusion: things look better while decisions remain broken. Faster queries feel like improvement, but until decision latency drops, the organization has not actually moved faster at all.

The organization feels faster because activity increases, but outcomes do not improve. Momentum becomes theatrical rather than directional.

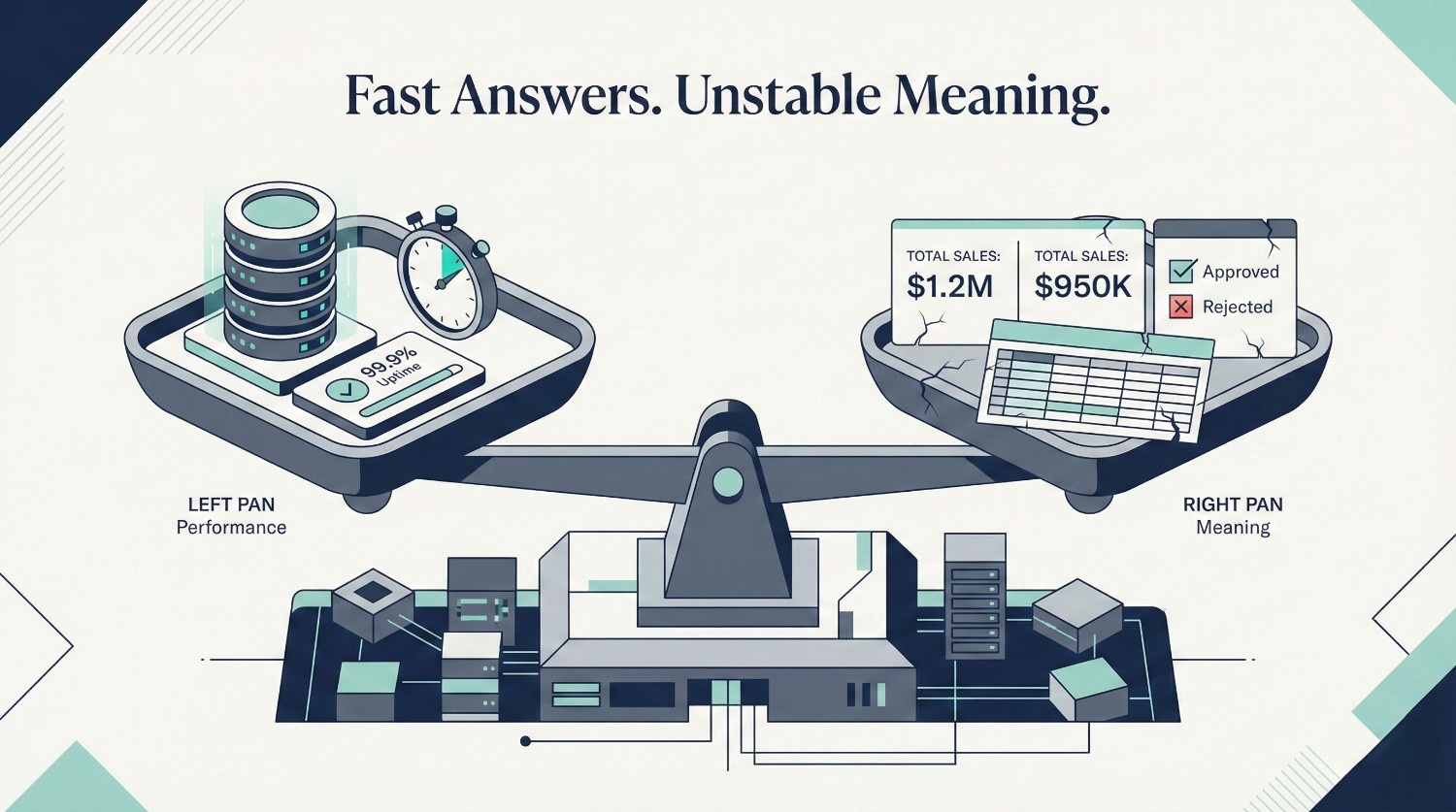

Speed Masks Semantic Problems

Fast queries can create a dangerous illusion: that the system is healthy. In reality, performance improvements often hide semantic problems instead of resolving them.

Queries Run Fast, but Definitions Are Wrong

A warehouse can return results instantly while still answering the wrong question. Common signs include:

- Metrics that look precise but don’t match how the business actually operates

- Filters and joins that silently change meaning

- Logic that is technically consistent but semantically misaligned

Because the query is fast, the result feels authoritative, even when it is not. Speed gives incorrect definitions a sense of legitimacy.

Inconsistent Metrics Across Teams

When semantics are unclear, performance makes the problem worse. Different teams:

- Use the same metric name with different logic

- Apply slightly different filters

- Interpret flags and statuses differently

All of this happens on top of fast, reliable infrastructure. Modern infrastructure does not resolve disagreement. It amplifies whatever assumptions already exist, whether they are aligned or contradict

The result is not slow confusion, it is high-speed disagreement. Two dashboards load instantly.

They just don’t agree.

“Correct” Data Producing Contradictory Answers

This is the most corrosive failure mode. From a technical standpoint:

- The data is fresh

- The pipelines are stable

- The queries are optimized

Yet stakeholders ask:

- “How can both of these be right?”

- “Why does this report contradict the other one?”

- “Which one should we trust?”

When systems are fast, these contradictions surface more frequently and with more confidence. Speed amplifies ambiguity. Faster systems surface contradictions more often, in more meetings, and in higher stakes contexts, accelerating trust erosion rather than revealing truth.

This is where many Data Warehouse Consulting engagements quietly fail.

They:

- Optimize performance

- Modernize infrastructure

- Improve reliability

But avoid:

- Defining metric meaning

- Assigning semantic ownership

- Freezing definitions before scaling

When semantics are ignored, performance gains do not compound. They decay. Business users stop trusting results. Teams revert to parallel logic. Speed becomes irrelevant.

The system appears stable because nothing breaks technically. What decays instead is confidence, alignment, and willingness to rely on the answers.

The Core Lesson

Performance optimizes execution. Semantics determine meaning.

If definitions are unstable, faster queries simply help the organization reach contradictory conclusions more quickly.Until meaning is explicit and owned, speed is not a success metric. It is a distraction.

Performance Without Adoption Is Vanity

A dashboard that loads instantly but isn’t used has zero business value. Yet this is one of the most common outcomes of Data Warehouse Consulting engagements that optimize for performance first and adoption last.

Dashboards Load Instantly, No One Uses Them

From a technical perspective, everything looks successful:

- Queries return in milliseconds

- Dashboards feel responsive

- Infrastructure scales cleanly

But behavior tells a different story.

You still see:

- Executives asking for numbers in slides, not dashboards

- Analysts exporting data “just in case”

- Teams maintaining their own spreadsheets alongside the warehouse

Speed exists. Adoption does not. Adoption is behavioral proof that the system has crossed a trust threshold. Until that happens, performance improvements remain technical achievements without organizational impact.

At that point, performance improvements are cosmetic.

Why the Business Reverts to Spreadsheets

This isn’t resistance to change. It’s self-protection. Business teams revert to spreadsheets when:

- Definitions shift without warning

- Numbers change but explanations don’t

- No one can confidently say which metric is authoritative

- Using the warehouse feels risky in high-stakes conversations. In decision-making contexts, perceived risk outweighs efficiency. People choose tools that minimize personal exposure, even if those tools are objectively inferior.

Spreadsheets offer control, familiarity, and the ability to explain logic line by line. They feel slower, but safer. A fast system that feels unsafe will always lose to a slower one that feels predictable.

Why Adoption Is the Only Performance Metric That Matters

Real performance is not query speed.

It’s whether people:

- Use the data in meetings

- Reference it in decisions

- Trust it without secondary validation

- Stop building parallel systems

Adoption answers the only question that matters:

Does this system actually change behavior?

A warehouse that is slower but trusted accelerates decisions more than a fast one that’s ignored.

The Hard Truth

Performance without adoption is vanity. Vanity metrics improve how a system looks. Value metrics change how an organization behaves. Query speed belongs firmly in the first category unless adoption follows.

It looks impressive in demos and reports, but it doesnot compound value. Until the business chooses the warehouse as its default source of truth:

- Query speed is irrelevant

- Infrastructure improvements don’t matter

- Consulting outcomes remain fragile

What Faster Queries Actually Signal (and What They Don’t)

Faster queries are not meaningless. They just mean far less than most teams assume. Understanding what performance improvements do and do not signal is critical to interpreting success correctly in Data Warehouse Consulting.

What Faster Queries Do Signal

- Infrastructure modernization Moving to cloud-native engines, separating storage and compute, and leveraging scalable execution layers genuinely improves performance.

- Better execution engines Modern query planners, vectorized execution, and improved caching reduce runtime without changing business logic.

- Improved physical design Partitioning, clustering, materialization, and cost-aware modeling all contribute to faster queries.

What Faster Queries Do Not Signal

Performance gains say nothing about whether the system is actually working for the business. They do not indicate:

- Trust

Speed does not tell you whether users believe the numbers or feel safe using them in decisions. Trust is orthogonal to performance. A fast system can still be distrusted if users do not understand its behavior or feel unsupported when discrepancies arise.

- Clarity

Queries can be fast even when metric definitions are ambiguous or inconsistent.

- Ownership

A fast system can still have no clear owner when numbers are challenged.

- Business impact

Query latency does not correlate with faster decisions, better outcomes, or improved confidence.

A warehouse can be technically excellent and organizationally ineffective at the same time. This gap often widens during successful migrations because technical capability improves faster than organizational readiness.

The Critical Misinterpretation

The mistake is not celebrating faster queries. The mistake is treating them as evidence of success.

Speed measures execution efficiency, not decision effectiveness.

When performance becomes the headline metric, it crowds out harder questions:

- Who owns this metric?

- Why did this number change?

- Which definition do we stand behind?

Are decisions actually happening faster?

The Proper Role of Speed

Query performance should be treated as:

- A prerequisite, not a goal

- A hygiene factor, not a differentiator

- A capability enabler, not a success indicator

If queries are slow, nothing else matters. But once queries are “fast enough,” continued optimization delivers diminishing returns unless trust, clarity, and adoption improve alongside it.

Beyond a certain threshold, additional performance gains improve benchmarks rather than outcomes, while unresolved semantic and ownership issues continue to tax decision-making.

Speed enables value. It does not create it.

Better Success Metrics for Data Warehouse Consulting

If faster queries aren’t the right success metric, what is?

The answer is not another technical benchmark. Speed reduces waiting, not uncertainty. If people hesitate after the answer arrives, the system has not actually accelerated decision-making.

It’s whether the organization makes decisions more confidently, more consistently, and with less friction. These outcomes are harder to quantify, but they’re the ones that actually matter.

Time to Answer a Business Question

This is not query latency. It’s the elapsed time from:

“We need to know X”

to

“We have an answer we’re willing to act on.”

Decision time is the compound cost of ambiguity, not just query latency. Most delays happen after the data appears, not before. This includes:

- Clarifying definitions

- Resolving discrepancies

- Validating assumptions

- Gaining stakeholder confidence

When Data Warehouse Consulting works, this time shrinks dramatically, even if queries were already fast. Organizations often discover that decision latency barely changes after performance improvements, which reveals that the real bottleneck was never execution speed.

Reduction in Reconciliation Effort

Reconciliation is pure waste from a decision-making perspective. It produces no new insight and exists solely because trust has not been earned.

Track whether:

- Analysts spend less time cross-checking reports

- Finance stops maintaining parallel logic

- Meetings stop reopening “whose number is right” debates

A successful engagement does not just produce numbers, it eliminates the need to verify them repeatedly. When reconciliation disappears, it is usually because ownership and definitions stabilized, not because reporting became more sophisticated.

Fewer Conflicting Reports

Conflicting reports are a visible symptom of semantic failure. They are not a reporting problem. They are evidence that the organization has not agreed on meaning.

Measure:

- How many “official” versions of key metrics exist

- Whether the same question yields different answers across teams

- How often reports are rebuilt to align definitions

Reduction here signals improved semantic alignment, not just better tooling.

Clear Ownership of Metrics

Ownership is measurable in practice, not just in documentation. Ask:

- Can people name the owner of a metric immediately?

- Is there a defined process for approving changes?

- Do disputes resolve quickly instead of looping?

Clear ownership turns disagreement into resolution. Without it, every question becomes a negotiation instead of a decision. When ownership is clear, uncertainty drops even when data isn’t perfect.

Confidence in Executive Reviews

This is subtle but powerful. Executive confidence is visible even when it is not measured. It shows up in fewer caveats, fewer backup slides, and faster commitments.

Look for:

- Fewer caveats in presentations

- Less defensive explanation of numbers

- Decisions made without follow-up validation requests

Confidence is the compound outcome of clarity, ownership, and trust over time.

Why These Metrics Matter More

These metrics:

- Reflect real behavioral change

- Expose organizational health

- Predict long-term value

Performance gains without behavioral change are local optimizations. They improve execution efficiency while leaving organizational friction intact. They are harder to measure precisely, but impossible to ignore once you start looking.

If Data Warehouse Consulting improves these outcomes, query speed becomes secondary. If it doesn’t, faster queries are simply faster noise.

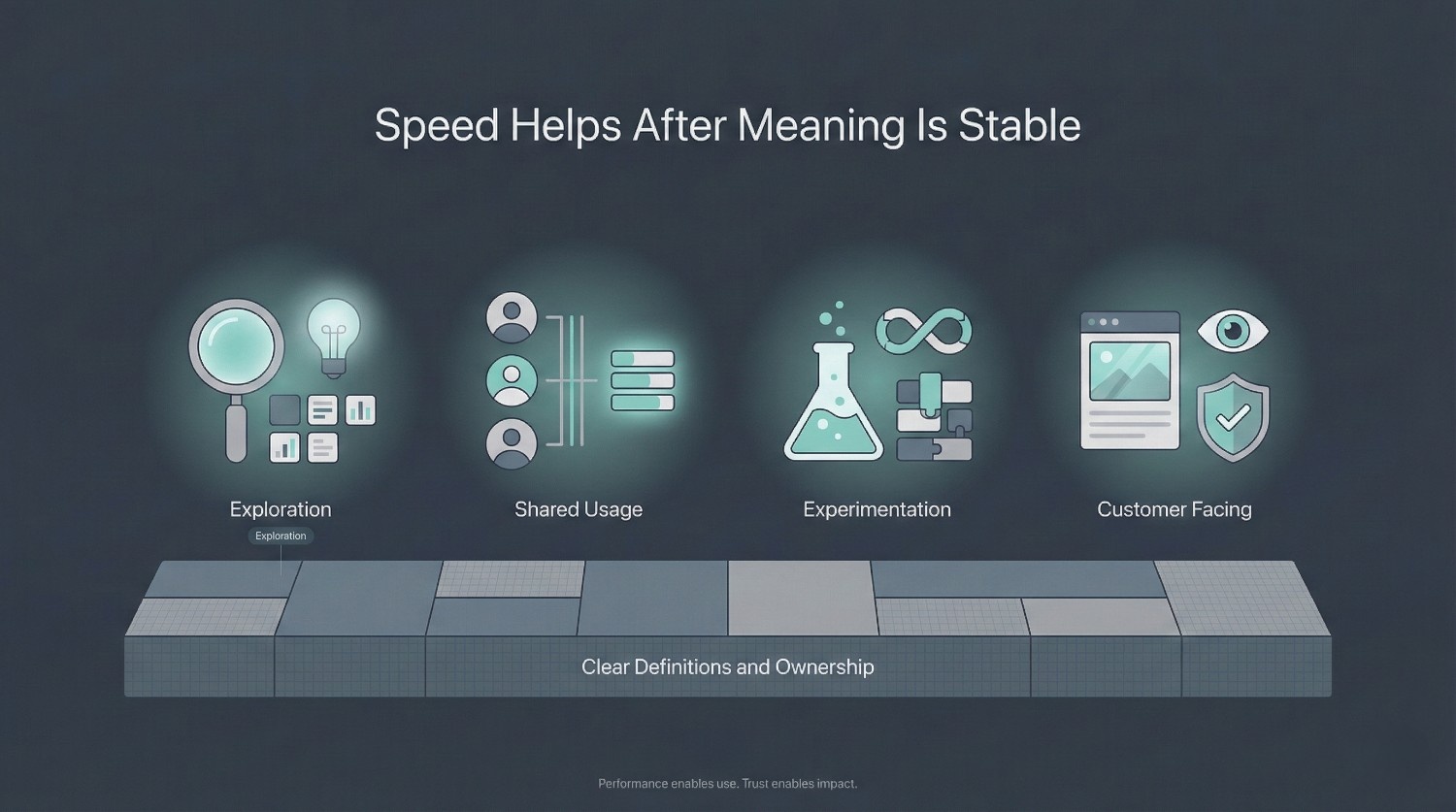

None of this is an argument against performance. Query speed absolutely matters in the right contexts. Speed matters most once clarity already exists. Without stable meaning, performance improvements simply accelerate confusion.

The problem is not caring about speed. It’s treating speed as proof of success rather than as a prerequisite.

Exploratory Analytics

When analysts are:

- Exploring new hypotheses

- Iterating on questions

- Refining logic interactively

Slow queries break flow and limit insight. In exploratory work, performance directly affects how deeply teams can reason with data. Faster queries enable better questions, but they don’t decide which answers are trusted.

High-Concurrency Workloads

In environments where:

- Many users query simultaneously

- Dashboards refresh frequently

- Shared resources are under pressure

performance is essential to prevent bottlenecks.

Here, speed is about reliability under load, not just individual query time. Without it, adoption collapses regardless of trust.

Data Science and Experimentation

Model training, feature engineering, and experimentation depend heavily on iteration speed. Slow queries:

- Reduce experiment throughput

- Discourage testing alternatives

- Increase compute waste

In these workflows, performance accelerates learning, but it still doesn’t replace semantic clarity or ownership.

Customer-Facing Analytics

When data powers:

- Embedded dashboards

- External reports

- Customer-visible metrics

Customer-visible metrics amplify trust failures immediately. When definitions shift or explanations are missing, speed only increases the damage. Latency directly affects user experience. Here, speed is table stakes. Poor performance is visible and unacceptable.

The Right Framing

In all of these cases, speed is necessary. But it’s still not sufficient. Fast systems without trust:

- Deliver answers no one uses

- Expose contradictions more quickly

- Increase skepticism rather than confidence

Performance enables usage. Adoption enables impact. The quiet success of Data Warehouse Consulting is fewer questions, not faster answers. When progress is real, the stopwatch becomes irrelevant.

The Takeaway

Faster queries matter when they remove friction from work that already has clarity.

They do not fix:

- Disputed definitions

- Missing ownership

- Organizational misalignment

Treat speed as a foundation, not a finish line.

Final Thoughts

Faster queries are table stakes. They’re necessary to remove friction, but they are not evidence that a data platform is working in the ways that matter most. A warehouse can be fast and still fail.

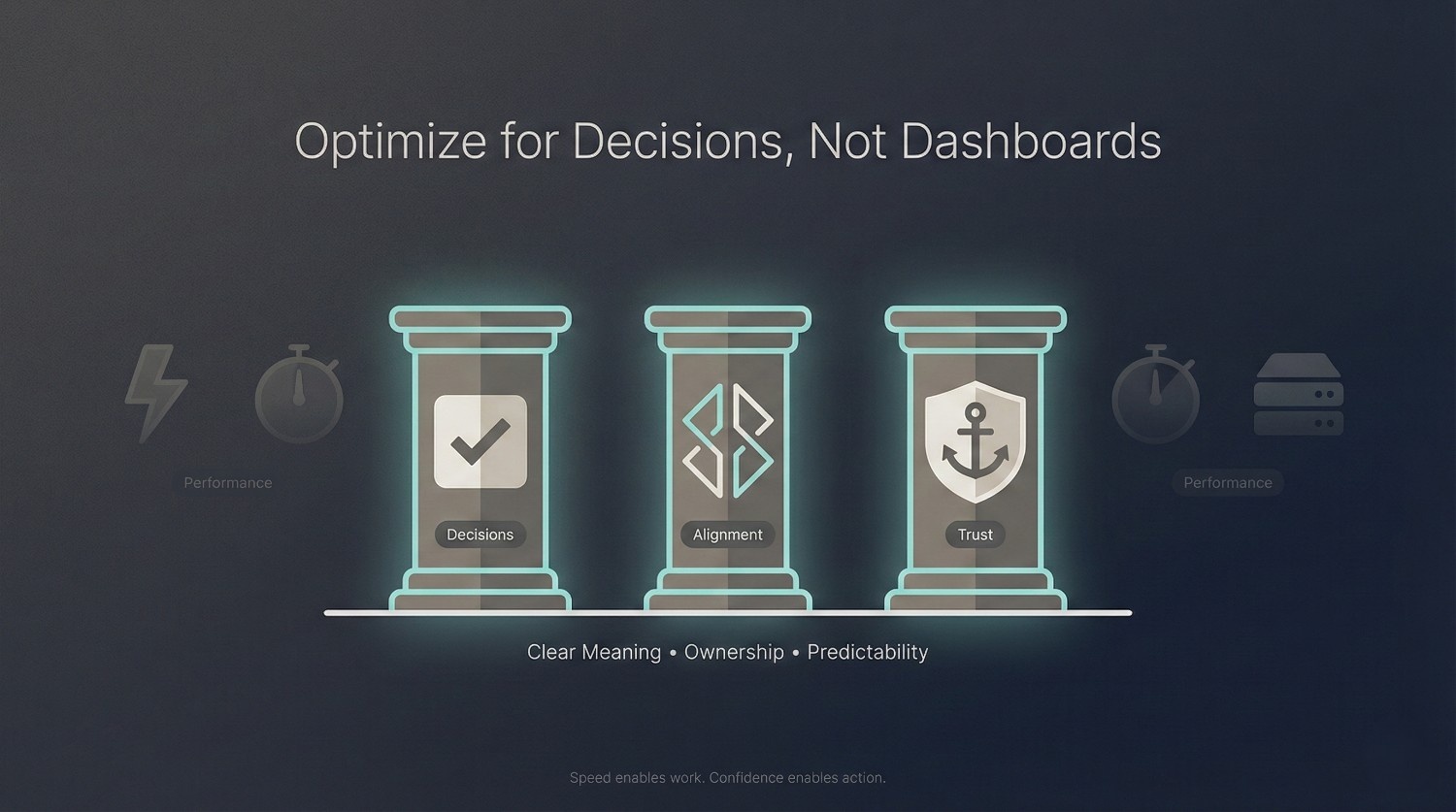

What Data Warehouse Consulting Is Actually Trying to Improve

Data Warehouse Consulting delivers value when it improves how decisions happen, not how quickly dashboards load.

It succeeds when:

- Decisions are faster

Not because queries run quickly, but because fewer meetings are needed to agree on the numbers.

- Disputes are fewer

Because definitions are stable, ownership is clear, and ambiguity is addressed once, not repeatedly.

- Trust is higher

Because changes are predictable, explanations are available, and people feel safe using the data in high-stakes conversations.

These outcomes compound. Query speed does not.

Why Dashboards Are the Wrong Optimization Target

Dashboards are interfaces. Decisions are outcomes. Interfaces can be improved endlessly without changing outcomes if the underlying decision structure remains weak.

Optimizing dashboards without fixing semantics, ownership, and trust is like polishing the speedometer while the engine misfires. The system may look better, but behavior won’t change.

The Final Takeaway

If speed is your primary success metric, you’re measuring the wrong thing. Performance should be treated as:

- A prerequisite

- A hygiene factor

- An enabler of work

Not as proof of success. Optimize for decisions, not dashboards. Organizations that make this shift stop asking how fast data arrives and start asking why decisions feel easier.

When Data Warehouse Consulting is successful, faster queries fade into the background because the real signal of progress is quieter:

- Fewer debates.

- Shorter decision cycles.

- And confidence that doesn’t need a stopwatch to prove itself.

Frequently Asked Questions (FAQ)

Because query speed measures execution efficiency, not decision effectiveness. A system can be fast while still producing numbers that are not trusted, understood, or used in real decisions.

No. Performance is necessary,but it’s not sufficient. Slow queries block usage, but fast queries alone don’t create trust, clarity, or adoption.

Query latency is how long a query takes to run.

Decision latency is how long it takes an organization to confidently act on the result. Data Warehouse Consulting should reduce decision latency, not just query latency.

Because spreadsheets feel safer. They offer control, transparency, and explainability when warehouse numbers change without clear ownership or explanation.

Leaders can look for behavioral signals:

- Fewer reconciliation efforts

- Fewer conflicting reports

- Faster agreement in meetings

- Increased use of dashboards in decision-making

These indicators are more meaningful than performance benchmarks.

Yes. Speed can amplify semantic problems by surfacing contradictions faster and making incorrect definitions feel authoritative.

Performance should be prioritized in exploratory analytics, high-concurrency environments, data science workflows, and customer-facing analytics after semantics and ownership are clear.

Because speed is easy to benchmark, demonstrate, and market. Trust, ownership, and organizational alignment are harder to showcase but far more important.

They should focus on:

- Clear metric definitions

- Explicit ownership

- Reduced reconciliation effort

- Faster, more confident decision-making

If “faster queries” is your headline win, you’re probably measuring convenience not success. Data Warehouse Consulting succeeds when it improves decisions, not just dashboards.