Table of Contents

If you’ve ever worked on a traditional data warehouse, you know how painful it is to duplicate large datasets for testing, development, or analytics experiments. Creating full copies means extra storage, more data movement, and higher costs. Enter Snowflake’s zero-copy cloning, one of the platform’s standout features for rapid, cost-efficient data duplication.

Zero-copy cloning lets you instantly create a clone of a table, schema, or even an entire

database without physically copying the data. This means no initial storage overhead, no

long wait times, and huge savings in both money and effort.

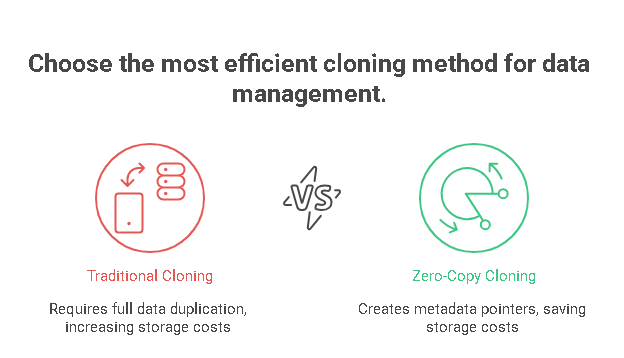

How Zero-Copy Cloning Differs from Traditional Copies

Traditional cloning makes full copies of your data, so every duplicate takes up more storage

and increases costs. Snowflake works differently. Because of the way it organizes data and

tracks metadata, it can create clones without actually copying the data. This means you get

instant duplicates without extra storage costs.

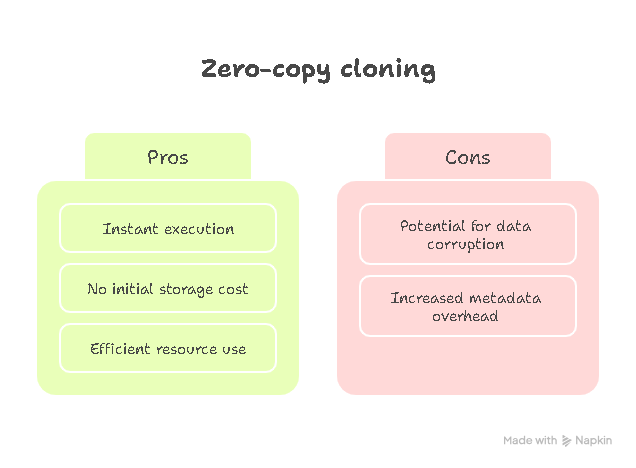

When you create a zero-copy clone, Snowflake simply records a metadata pointer to the

existing data. Only when you modify data in the clone (through updates, inserts, or deletes) does Snowflake start writing new data blocks. This is known as copy-on-write,

which means:

● Clones are created instantly, regardless of data size.

● You pay for extra storage only when data diverges from the original.

For teams running multiple dev, QA, or experimentation environments, the cost benefits are massive.

How Zero-Copy Cloning Works

Technically, Snowflake stores your data in immutable micro-partitions. When you create a

clone, Snowflake just points new metadata references at those same partitions.

Imagine you have a 5 TB table. Cloning it traditionally means paying for another 5 TB. In Snowflake:

CopyEdit

CREATE TABLE dev_orders CLONE production. orders;

This executes instantly and costs $0 extra storage at first. Only changes to dev_orders would start consuming additional space.

Practical Use Cases for Zero-Copy Cloning

Development & Testing:

Spin up a safe playground for developers to test schema changes or run destructive queries without risking production data.

Data Science Sandboxes:

Data scientists can explore large datasets or train models on clones without heavy provisioning.

Fast Point-in-Time Analytics:

Want to analyze your data exactly as it was yesterday? Clone a historical state (even

leveraging Time Travel) and run your analysis, without affecting current workloads.

Interested in Time Travel for querying historical data? Check out our guide on Snowflake

Time Travel & data recovery.

Practical Use Cases for Zero-Copy Cloning

1. No upfront storage duplication:

You avoid doubling your storage footprint. Clones only use extra space when data

actually changes.

2. Fewer data pipelines:

Need to prepare dev/test copies? No heavy ETL scripts — just a simple CLONE

command.

3. Faster, cheaper experiments:

Because clones are instant, your team spends less time waiting (or consuming

compute) to build copies, indirectly lowering compute costs too.

Best Practices for Using Zero-Copy Cloning

Keep an eye on divergence:

Remember that as soon as you start changing data in the clone, it begins to

consume additional storage. Regularly monitor clone size.

Combine with transient tables if short-lived:

If your clone is purely for temporary analysis, consider using transient tables to avoid

long-term Fail-safe storage costs. Curious about managing short-lived data? See our blog on transient tables and Snowflake

cost efficiency.

Pair with warehouse right-sizing:

Cloning might let multiple teams work in parallel. Ensure your warehouses are

right-sized so increased activity doesn’t spike your compute costs. (Tip: Check our

Snowflake query optimization tips here.)

Monitoring Clones and Their Impact

While cloning saves initial storage, it’s important to monitor clone growth over time. Use:

- ACCOUNT_USAGE.CLONES to track created clones.

- WAREHOUSE_METERING_HISTORY to see if parallel work on clones increases warehouse utilization and credit consumption.

Need a quick overview of what metrics to monitor? Check our guide on Snowflake performance metrics.

Snowflake’s zero-copy cloning isn’t just a technical luxury it’s a smart financial tool. It lets your teams experiment, test, and analyze without paying double for storage, and without weeks of data pipeline prep.

Combined with thoughtful warehouse management and retention policies, cloning helps keep your Snowflake environment flexible, scalable, and budget-friendly.

Want Expert Help With Your Snowflake Cost Optimization?

FAQ

At creation, yes. Storage is only used once data diverges from the source.

Absolutely by combining cloning with Time Travel, you can clone data exactly as it existed at any point within your retention period.

Clones initially consume no extra space; they start taking storage only as changes diverge.