Data Engineering Services

Data Prism offers end-to-end data engineering solutions for high-performance pipelines, integration and scalable architectures for business intelligence.

Data Engineering Services we Offer

We bring clarity and control to complex data environments. From real-time ETL pipelines to modern data lakes and cloud migration, our services are tailored to drive agility, security and performance across your data stack.

Data Integration

Unify data from multiple sources and platforms into a single, consistent view. We synchronize systems, APIs and databases to ensure seamless access and consistency across your organization.

Data/ETL Pipelines

Automate the entire data journey — from ingestion to transformation and delivery. We build ETL/ELT pipelines optimized for scale, real-time streaming and efficient orchestration.

Data Warehousing

Create centralized repositories that support fast querying and scalable storage. Our solutions are designed for BI tools, dashboards and high-volume analytics.

Data Cloud Strategies

Leverage the power of cloud platforms like AWS, GCP and Azure with custom strategies that match your infrastructure, budget and growth goals.

Data Migration

Seamlessly migrate legacy systems, databases, or cloud platforms with zero data loss and minimal downtime. We ensure a secure and smooth transition to your new architecture.

Data Lake Implementation

Build modern data lakes to store structured, semi-structured and unstructured data in its raw form — enabling advanced analytics, ML and data discovery.

Data Management

Govern and maintain your data with best-in-class management practices covering accessibility, lineage, compliance and lifecycle optimization.

Technologies We Use for Data Solutions

Programming Languages

Node Js

Python

JavaScript

Data Orchestration

Apache Airflow

Azure Data Factory

Databricks

Dagster

RESTful Services

Postman

Rest

SOAP

Requests

Databases

MySQL

SQL Server

PostgreSQL

MongoDB

DynamoDB

SQLite

Redis

Firebase

Data Warehouses

Snowflake

BigQuery

Redshift

Data Visualization

PowerBI

Tableau

Looker Studio

Cloud Platforms

AWS

Azure

GCP

Heroku

Data Transformation

AWS Glue

Talend

Apache Kafka

DBT

Security

OAuth

SSL/TLS

Containerization

Docker

Kubernetes

Our Data Engineering Process

We collect data from diverse structured and unstructured sources, including databases, APIs, and files, ensuring a continuous and secure flow into your systems for downstream processing.

Before any transformation begins, we apply rigorous checks to validate data accuracy, completeness, and consistency, preventing issues that could compromise reporting or decision-making later.

Using ETL/ELT processes, we clean, enrich, and reformat raw data into a structured, analytics-ready format tailored to your specific business intelligence or machine learning use cases.

Transformed data is securely stored in high-performance storage solutions like data warehouses, lakes, or cloud-native repositories, designed to scale and support real-time or batch querying.

We automate recurring workflows and manage task dependencies using orchestration tools like Airflow or Prefect, ensuring timely, error-free, and fully governed data operations.

The final processed data is delivered through dashboards, APIs, or reporting layers, making it accessible to business users, analysts, and downstream systems in real time or scheduled intervals.

Success Stories

We’ve partnered with fast-growing startups and global enterprises to design intelligent data ecosystems that power smarter decisions and digital growth.

Amazon Vendor Central Project

Our smart security clients (August Home and Yale Security) are huge vendors on Amazon and needed a way to get useful insights for their traffic, sales, inventory, and sales forecasting data. The reports had to be generated on a daily basis and according to their specific criteria.

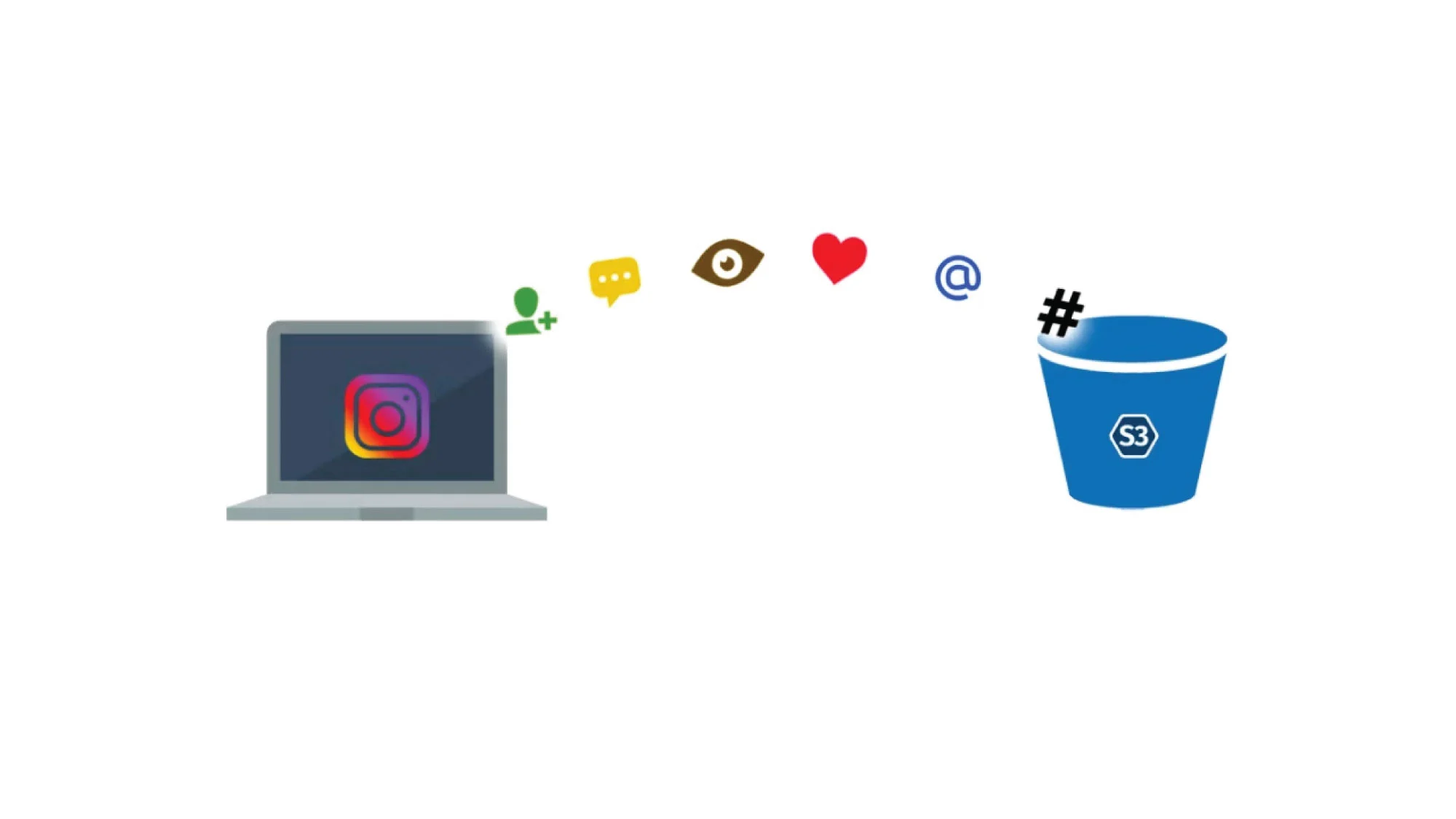

Instagram-Facebook API Integration (Freestak.com)

Freestak, a marketplace for endurance influencers, wanted to integrate key insights coming from marketing campaigns with their associated influencers. Freestak required obtaining post data and engagement metrics of posts, stories and reels of Instagram influencers.

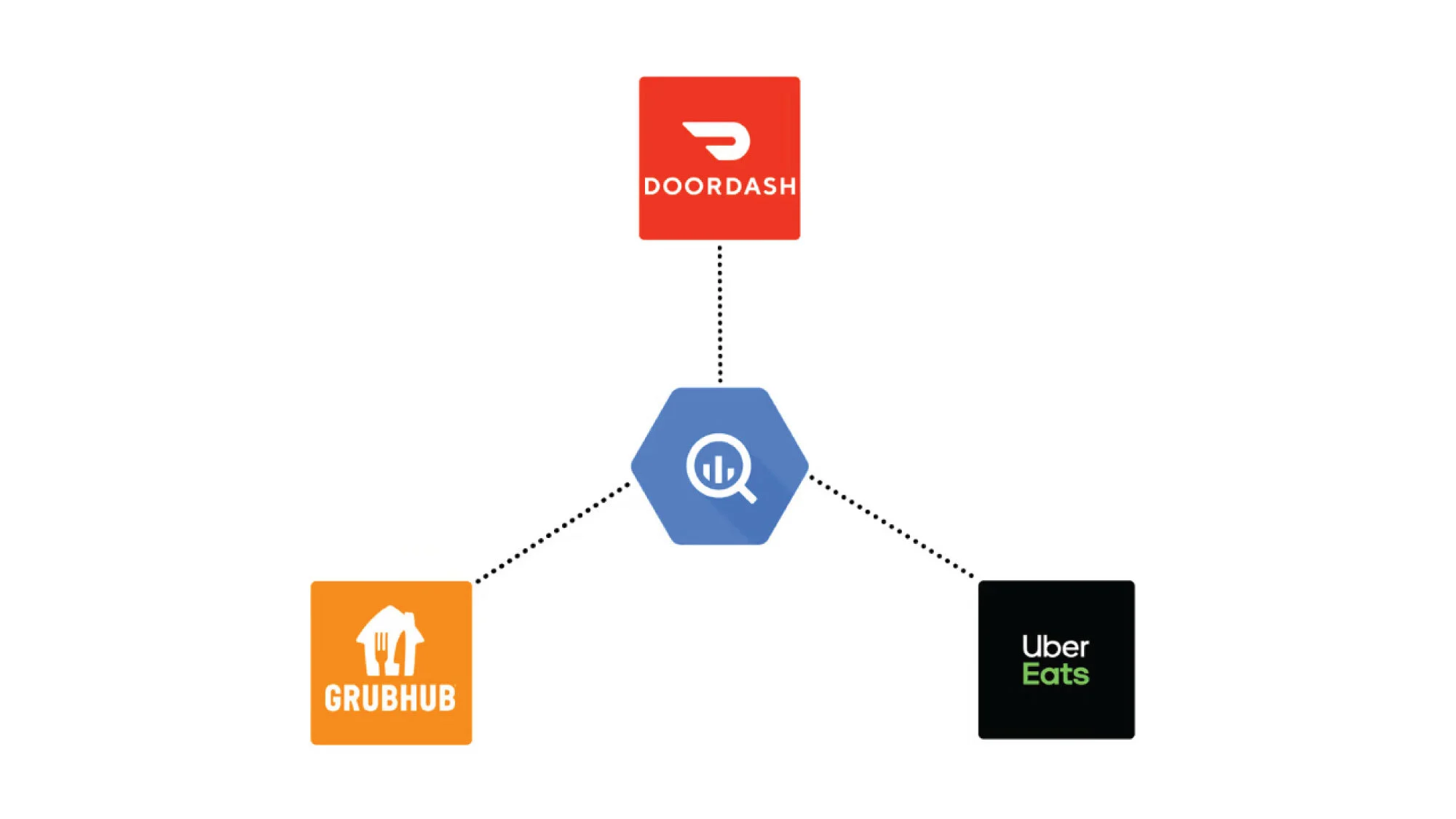

Food Ordering Scraper (Loop)

Our client needed merchant-side data of orders coming to restaurants through 3 major ordering companies (DoorDash, Uber Eats, and Grubhub). We were required to implement a smart algorithm to retrieve such a huge volume of information and prevent blocking, duplication, and other problems.

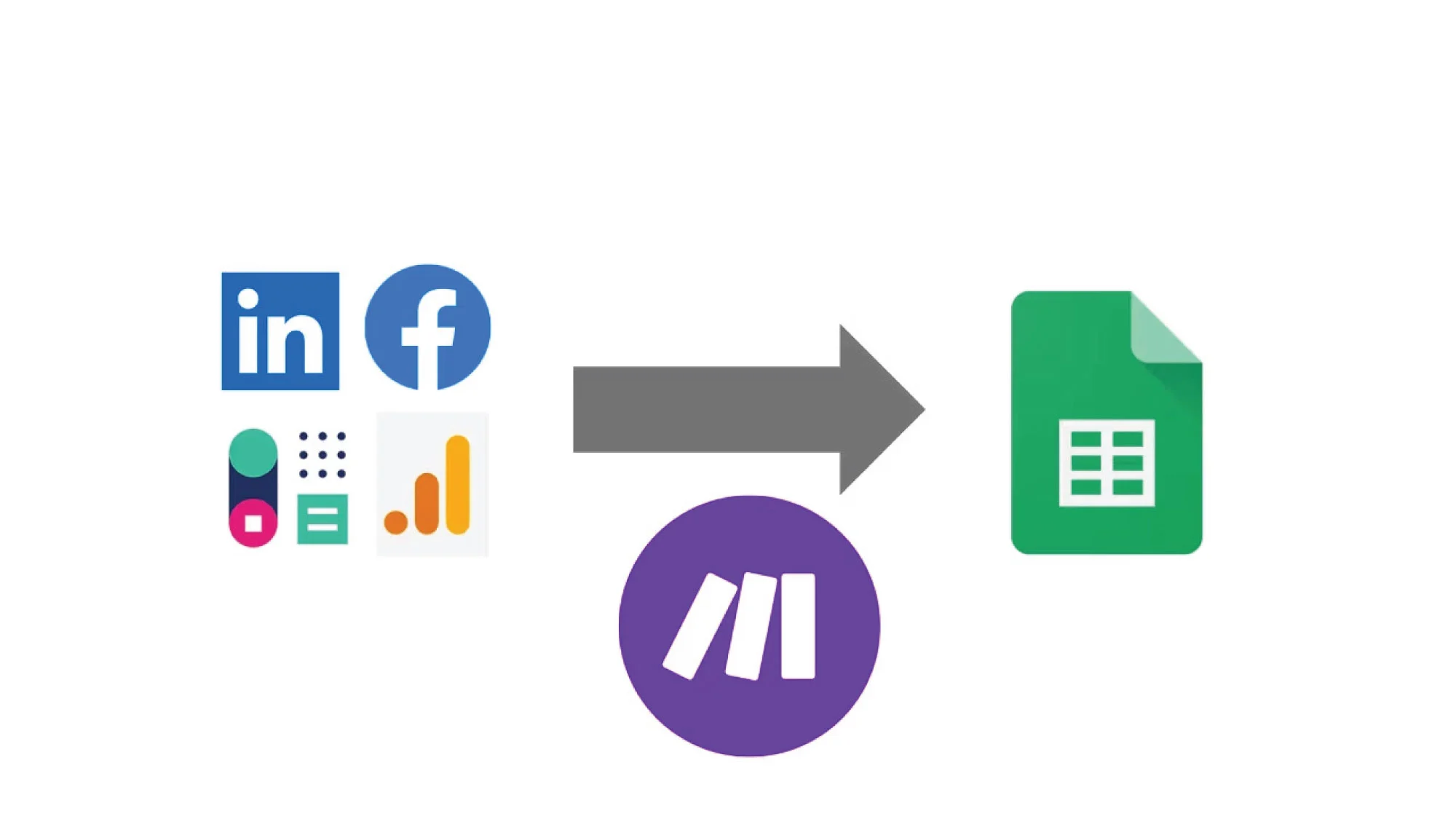

High Growth Startup Finder (Redpoint Ventures)

As a Venture Capitalist, our client found it very tiring and expensive to find startup leads that they can investigate further for potential funding rounds. We were required to come up with a smart algorithm to find startups which can possibly be the next best investment for our client.

Automated Newsletter Emails

We fetched the users’ data from an API and checked all the subscriptions of every user to create a filtered list. Once we had the list, we created a templated transactional email which was then used to send relevant newsletters to all the subscribers.