Table of Contents

Introduction

Many Data Warehouse Consulting engagements are declared successful.

The project finishes.

The warehouse is live.

Dashboards load quickly.

Pipelines are stable.

And yet, leadership keeps asking the same uncomfortable questions:

- “Are we actually getting value from this?”

- “Why do people still argue over numbers?”

- “Why doesn’t this feel settled?”

These questions are not signs of impatience.

They are signals that something fundamental is being missed. Specifically, they signal a gap between what was built and how the organization actually uses data to decide.

The Core Problem

Most organizations measure Data Warehouse Consulting the way they measure engineering projects.

They look at:

- Delivery milestones

- Performance improvements

- Tickets closed

- Systems stabilized

Those metrics answer one question well:

“Did the technical work get done?”

They do not answer the question leadership actually cares about:

“Did this change how the organization makes decisions?”

When that question goes unanswered, confidence erodes even if every technical milestone was met.

Data Warehouse Consulting is not just a build exercise.

It is an organizational change effort, one that affects ownership, trust, accountability, and behavior.

When it’s measured like pure engineering work, success looks impressive on paper while nothing meaningfully changes in practice.

Why This Mismatch Persists

Engineering metrics are:

- Familiar

- Objective

- Easy to report

Organizational outcomes are:

- Messier

- Harder to quantify

- Politically uncomfortable

So teams default to what’s easiest to measure, even when it’s not what matters.

What This Article Will Do

This article is about correcting that mismatch.

It will:

- Redefine what “success” actually means in Data Warehouse Consulting

- Introduce metrics that reflect real organizational impact, not just delivery

- Help leaders evaluate outcomes honestly, without relying on vanity metrics

Because if your warehouse is technically sound but leadership still isn’t confident, the problem isn’t execution. Confidence fails when ownership, meaning, and accountability were never treated as deliverables.

It’s measurement.

And until success is measured correctly, Data Warehouse Consulting will continue to look finished, while failing to deliver the value it promised.

Why Traditional Metrics Fail

Most Data Warehouse Consulting engagements rely on a familiar set of success metrics. They’re concrete, easy to track, and widely accepted.

They’re also deeply misleading.

Common but Misleading Measures

Teams typically point to:

- Pipelines delivered

- Dashboards built

- Queries optimized

- Tools implemented

These metrics create a sense of momentum. They show that work happened. They are useful for project management.

But they are a poor proxy for success.

Why These Metrics Are Insufficient

The core issue is simple:

They measure activity, not impact.

Activity metrics confirm that work occurred. Impact metrics confirm that behavior changed.

A team can deliver dozens of pipelines while:

- Business teams still debate numbers

- Analysts still maintain parallel logic

- Executives still hesitate to act on dashboards

Delivery volume often increases precisely because alignment problems remain unresolved.

From an engineering standpoint, the project is complete.

From an organizational standpoint, nothing has been resolved.

What These Metrics Completely Miss

Traditional metrics say almost nothing about the outcomes leadership actually cares about.

They do not tell you:

- Trust

Do people believe the numbers without validating them elsewhere? - Adoption

Are teams using the warehouse by default—or only when forced? - Decision quality

Are decisions faster, clearer, and less contested than before?

Leadership cares less about what was built and more about whether uncertainty has been reduced.

Why Organizations Keep Using Them Anyway

These metrics persist because they are:

- Easy to quantify

- Politically safe

- Familiar to delivery teams

Trust, adoption, and decision quality are harder to measure and harder to own. They force organizations to confront questions that cannot be solved through execution alone.

So organizations default to what’s easiest to count, even when it doesn’t reflect success.

The Hidden Cost

When traditional metrics define success:

- Consulting engagements are declared “done” too early

- Deeper organizational issues remain untouched

- Leadership senses the gap but can’t articulate why

The result is a warehouse that works technically but fails strategically. Strategic failure occurs when delivery success masks unresolved organizational friction.

To measure Data Warehouse Consulting honestly, teams need to move beyond delivery metrics and start measuring behavioral change.

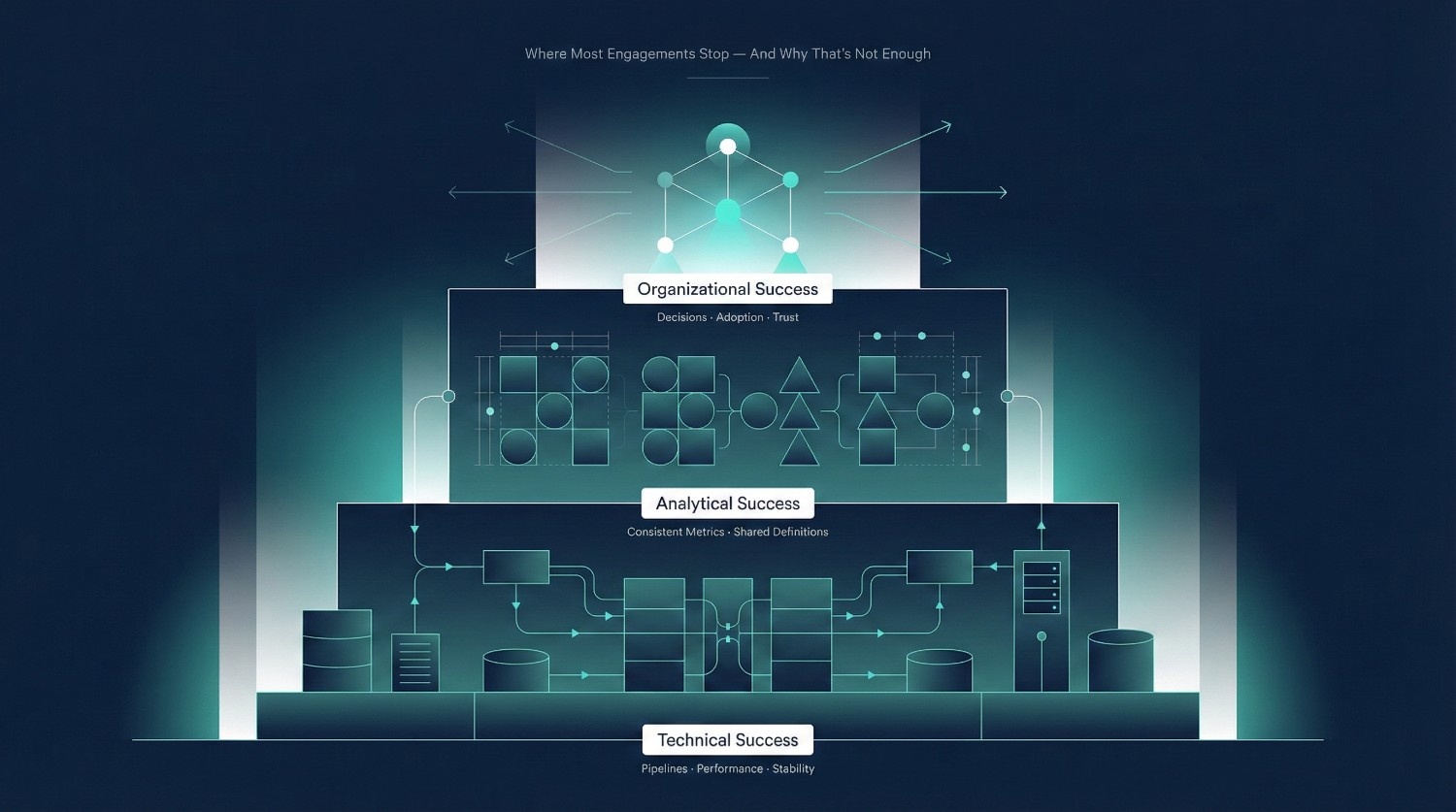

The Three Levels of Success in Data Warehouse Consulting

Not all successful data warehouse initiatives are successful in the same way.

One of the biggest reasons Data Warehouse Consulting disappoints is that organizations stop measuring success too early, often at the lowest level where progress is easiest to demonstrate.

In reality, there are three distinct levels of success. Each level represents a different kind of progress, and confusing one for another is a common source of disappointment.

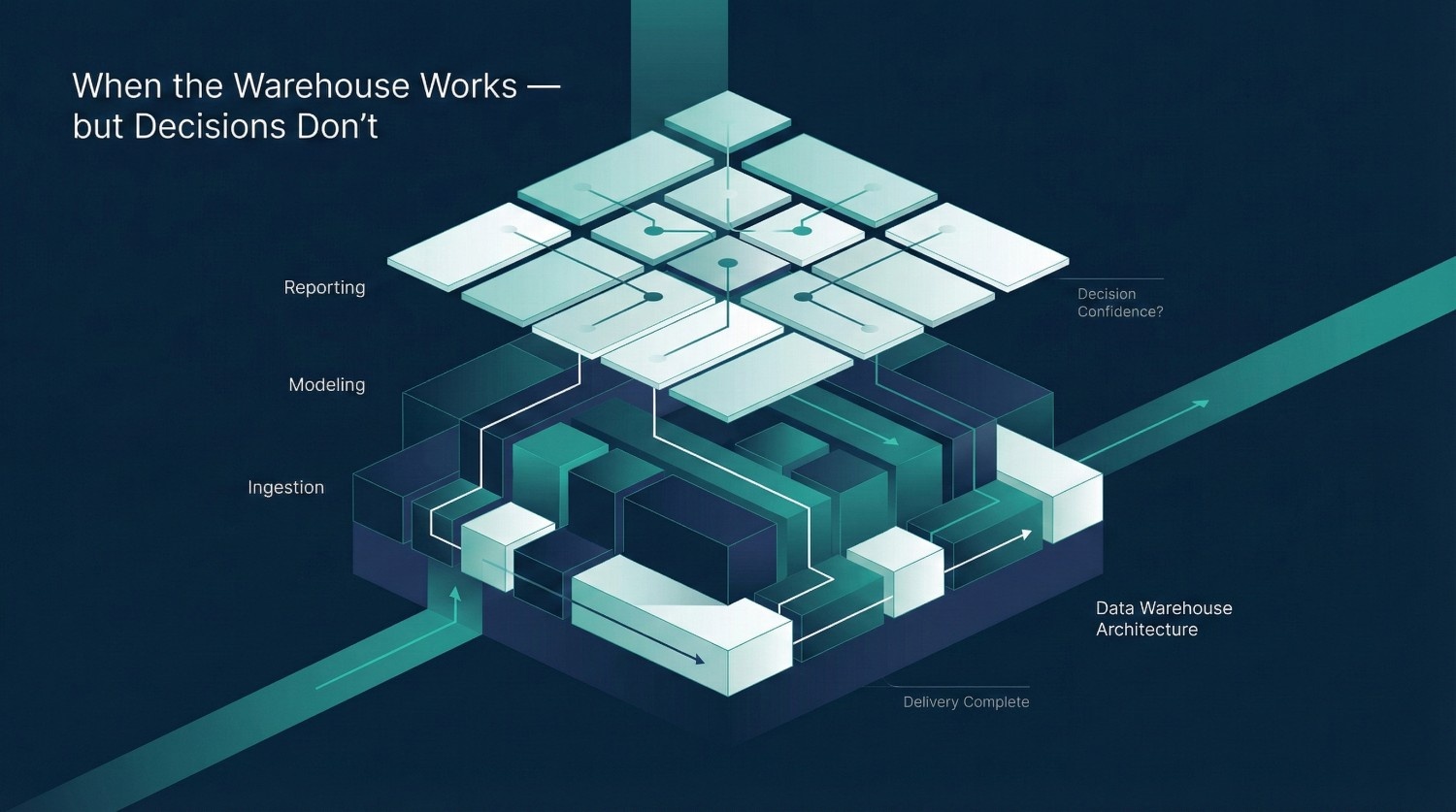

Level 1: Technical Success

This is where most engagements end. Stopping here is understandable, but it optimizes for system completion rather than organizational impact.

At this level:

- Data loads correctly and reliably

- Queries perform within acceptable limits

- Infrastructure is stable and scalable

These outcomes matter. Without them, nothing else is possible.

But technical success answers only one question:

“Does the system work?”

It does not answer:

“Does the system matter?”

Technical success is necessary, but not sufficient.

Level 2: Analytical Success

Fewer engagements reach this level.

Here, the focus shifts from systems to meaning:

- Metrics are calculated consistently

- Reports reconcile across teams

- Definitions are shared and understood

This level reduces noise. It eliminates many of the everyday frustrations analysts and stakeholders face.

But even analytical success can fall short.

You can have consistent reports that are:

- Carefully reviewed but rarely used

- Trusted in theory but avoided in practice

- Accurate but disconnected from decision-making

Consistency alone doesn’t guarantee impact. Alignment without adoption improves reporting quality but does not change how decisions are made.

Level 3: Organizational Success

This is where Data Warehouse Consulting actually delivers value.

At this level:

- Decisions rely on the warehouse by default

- Reconciliation work largely disappears

- Meetings focus on action, not validation

- Confidence replaces debate

The warehouse becomes a decision system, not just a reporting platform.

This level reflects real behavioral change:

- People stop asking “Is this right?”

- They start asking “What should we do?”

Why Most Engagements Never Reach Level 3

The gap between analytical success and organizational success is rarely technical. It is structural.

Reaching Level 3 requires:

- Explicit ownership

- Stable semantics

- Leadership involvement

- Clear success metrics beyond delivery

Many engagements avoid this work because it’s:

- Politically uncomfortable

- Harder to quantify

- Outside traditional engineering scope

So they stop at Level 1, or occasionally Level 2, and declare success.

Leadership senses the gap.

The warehouse works, but it doesn’t change anything.

Measuring success across all three levels is the only way to know whether Data Warehouse Consulting actually delivered what it promised.

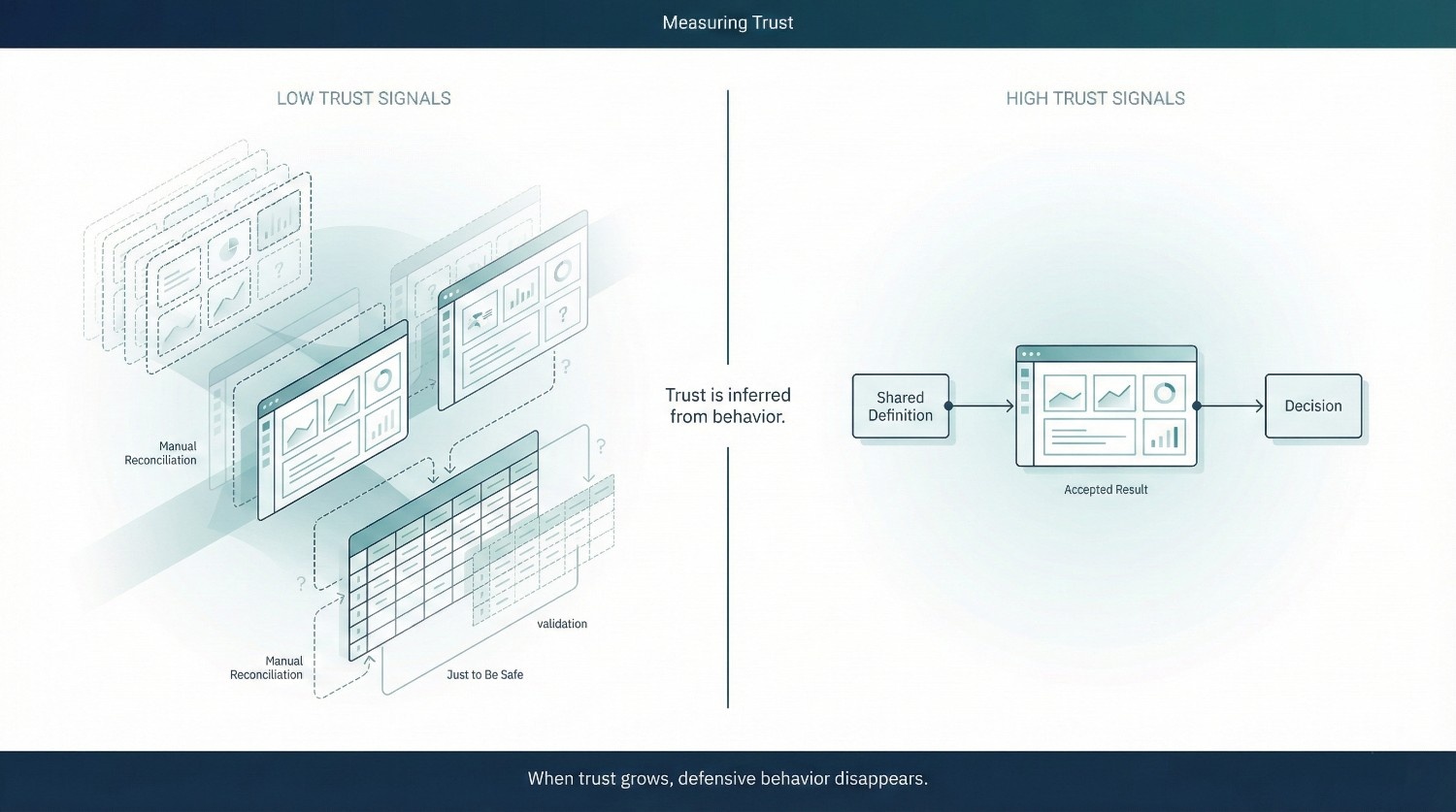

Measuring Trust

Trust is the most important outcome of Data Warehouse Consulting, and the one most teams never measure.

Not because it doesn’t matter, but because it’s uncomfortable and hard to quantify.

Yet trust leaves unmistakable traces in behavior. These traces appear before adoption metrics improve and long before leaders explicitly say they trust the system.

Behavioral Indicators of Trust

You don’t measure trust by surveying people or asking how they feel.

You measure it by watching what stops happening. The absence of defensive behavior is often the earliest indicator that trust is forming.

Strong signals include:

- Fewer “Which number is right?” conversations

Disputes over basic metrics decline. Questions shift from validation to interpretation and action. - Executives stop asking for caveats

Presentations no longer open with disclaimers. Numbers are referenced without defensive explanations. When caveats disappear, it usually means leadership is willing to be accountable for decisions made using the data. - Reports are reused, not rebuilt

Teams stop creating their own versions “just to be safe.” Existing reports become shared assets instead of temporary artifacts. Reuse signals confidence that shared definitions will hold across contexts and audiences. - Teams accept numbers even when they’re inconvenient

This is the strongest signal. When people accept results that don’t support their preferred narrative, trust exists.

These behaviors are observable, repeatable, and far more meaningful than any performance benchmark.

Why Trust Can’t Be Measured Directly

Trust isn’t a single metric you can instrument.

It doesn’t live in logs or dashboards.

It lives in:

- Meetings

- Decisions

- Escalations

- Workarounds that disappear

That makes it invisible to traditional reporting, but obvious to anyone paying attention. Teams that work closely with the business often sense trust shifts weeks or months before metrics reflect them.

Why Trust Is Usually Ignored

Trust is often ignored because:

- No single team “owns” it

- It requires leadership involvement

- It exposes unresolved organizational issues

It’s easier to point to query latency than to ask why finance still runs reconciliations.

But ignoring trust doesn’t make it optional.

It just makes failure quieter.

The Core Insight

When trust increases:

- Reconciliation work declines

- Decision speed improves

- Data becomes a default input—not a debate starter

When trust doesn’t improve, no amount of technical success will compensate.

If Data Warehouse Consulting doesn’t measurably increase trust, it hasn’t succeeded, no matter how impressive the technology looks.

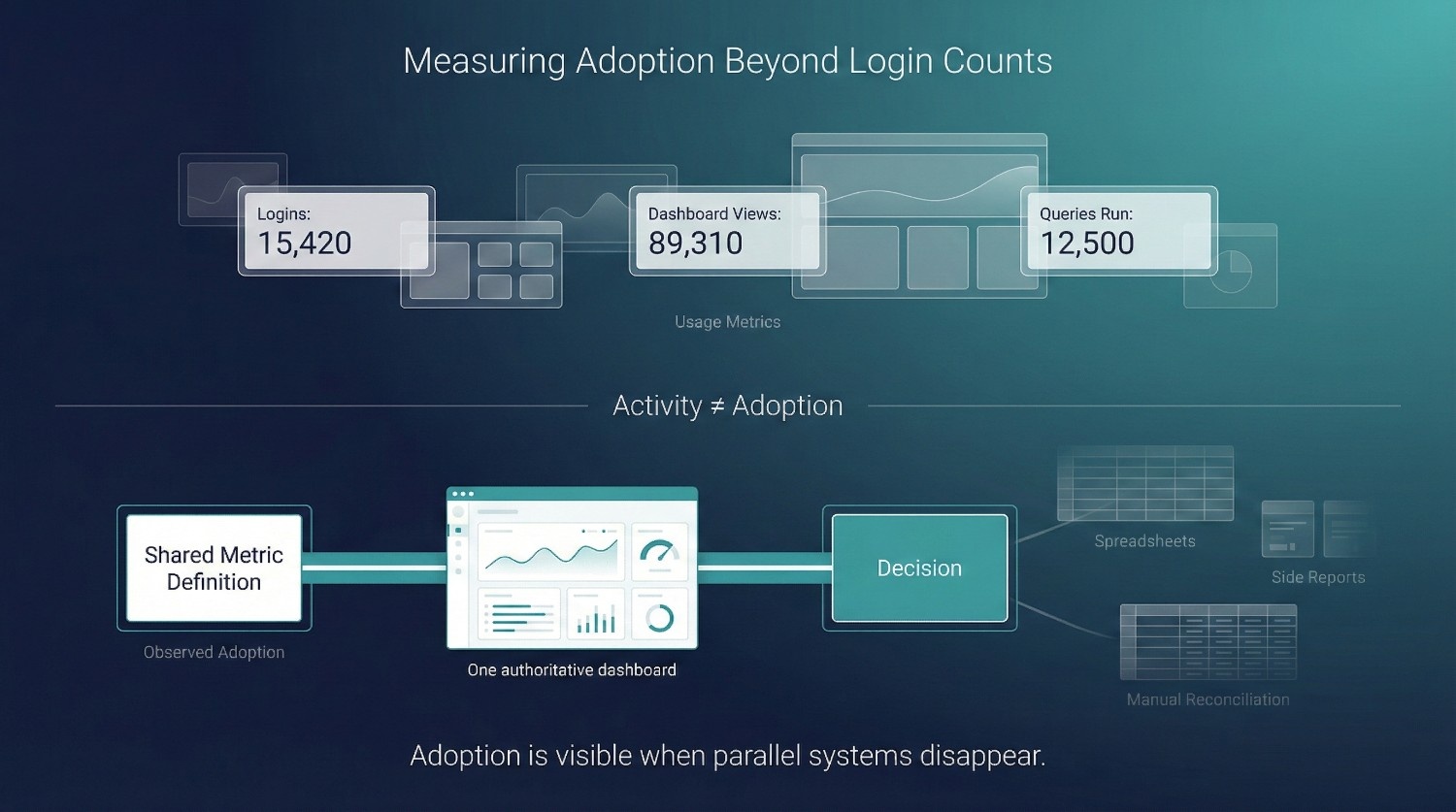

Measuring Adoption Beyond Login Counts

Adoption is often measured, but almost always measured poorly.

Most teams point to:

- Dashboard views

- User logins

- Query counts

These numbers look reassuring because they show activity.

They do not show impact. Activity can exist without belief, and belief is what changes behavior.

Why Usage Metrics Are Deceptive

A dashboard being opened does not mean it was trusted.

A query being run does not mean it informed a decision. In many teams, queries are executed as a defensive step rather than a decision input.

Common failure modes include:

- Dashboards opened to extract data, not to use insights. This usually signals that the warehouse is treated as a data source, not a decision surface.

- Logins driven by obligation, not preference

- Queries run to validate numbers elsewhere, not to rely on them

In these cases, usage is high, but confidence is low.

This is how organizations convince themselves adoption is improving while behavior stays unchanged.

What Real Adoption Actually Looks Like

Adoption shows up outside analytics tools.

Better signals include:

- Which meetings reference warehouse data

Meetings reveal adoption more honestly than usage logs because they expose what people are willing to stand behind publicly. - Which decisions explicitly rely on common definitions

Product bets, budget decisions, and forecasts reference the same numbers across teams. - Which spreadsheets quietly disappear

This is the strongest indicator. When shadow spreadsheets stop being maintained, trust has replaced hedging. - Who stops asking for “one more check”

Decisions move forward without parallel validation or follow-up reconciliation.

These behaviors are harder to count, but far more honest.

Why Adoption Must Be Observed, Not Assumed

Adoption is not a toggle you flip at go-live.

It emerges when:

- Semantics are stable

- Ownership is clear

- Changes are predictable

- Using the warehouse feels safe

Without those conditions, people may access the system while still avoiding reliance on it.

The Key Insight

If adoption is measured only by logins, every consulting engagement looks successful.

If adoption is measured through behavioral change, many engagements look unfinished. This is why adoption metrics often flatter delivery teams while misleading leadership.

Data Warehouse Consulting succeeds when:

- The warehouse becomes the default reference

- Parallel systems fade away

- Decisions move faster with less debate

Anything less is activity, not adoption

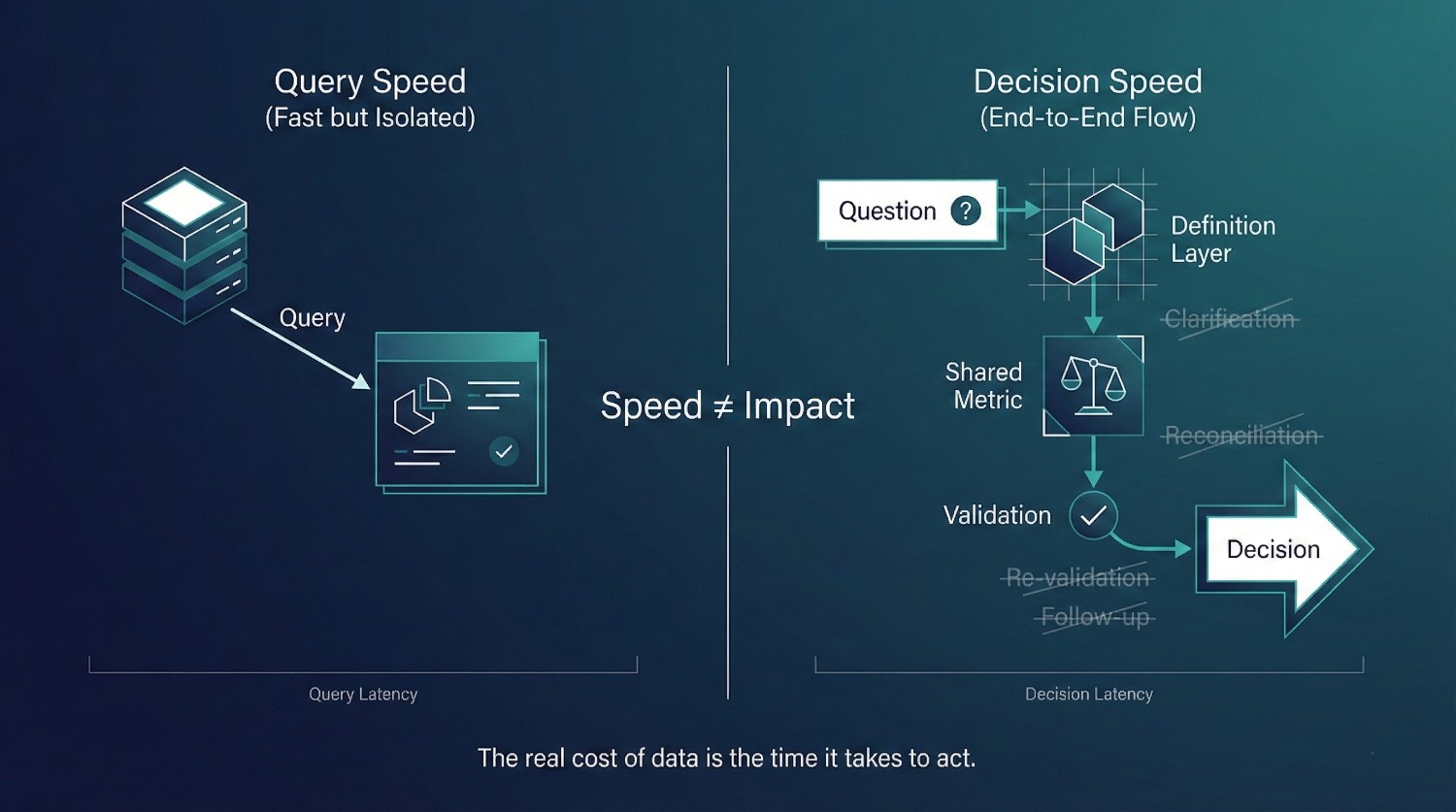

Measuring Decision Speed (Not Query Speed)

One of the most revealing metrics in Data Warehouse Consulting has little to do with how fast a query runs.

It’s how quickly the organization can reach a destination.

Query Latency vs. Decision Latency

Query latency measures:

- How long the database takes to return results

Decision latency measures:

- How long it takes to move from a question to a confident action

Between those two points sits most of the real organizational cost:

- Clarifying definitions

- Reconciling numbers

- Scheduling follow-ups

- Re-running analyses to “be sure”

A system can return results in milliseconds and still take weeks to produce a decision. This hidden time rarely appears on a bill, but it quietly compounds across teams, meetings, and missed opportunities.

What Decision Speed Actually Captures

Decision speed reflects whether the warehouse is doing its real job.

Look at:

- Time from question to answer to action

How quickly can leadership ask a question, get a number, and act without hesitation? - Reduction in follow-up queries

Fewer “Can you break this down another way?” requests signal that answers are trusted. - Fewer clarification meetings

When meetings stop being about validating numbers and start being about outcomes, decision speed has improved. - Shorter reconciliation cycles

When finance, product, and analytics stop cross-checking each other, friction has been removed.

Each of these reductions represents time returned to the organization. That reclaimed time is where the economic value of data platforms actually materializes.

Why This Is the True ROI of Data Warehouse Consulting

The real cost of poor data systems isn’t slow queries, it’s slow decisions.

Every extra:

- Validation step

- Reconciliation meeting

- Defensive explanation

is organizational drag.

Data Warehouse Consulting delivers ROI when it:

- Collapses uncertainty

- Reduces debate

- Enables faster commitment

Those gains compound daily.

Unlike query performance, decision speed:

- Reflects trust

- Reflects clarity

- Reflects ownership

If decision latency drops, value is being created, even if query speed hasn’t changed much.

The Bottom Line

If you want to measure whether Data Warehouse Consulting worked, don’t ask:

“How fast are the queries?”

Ask:

“How long does it take us to confidently act on the data now?”

When that time shrinks, the consulting delivers real, durable value.

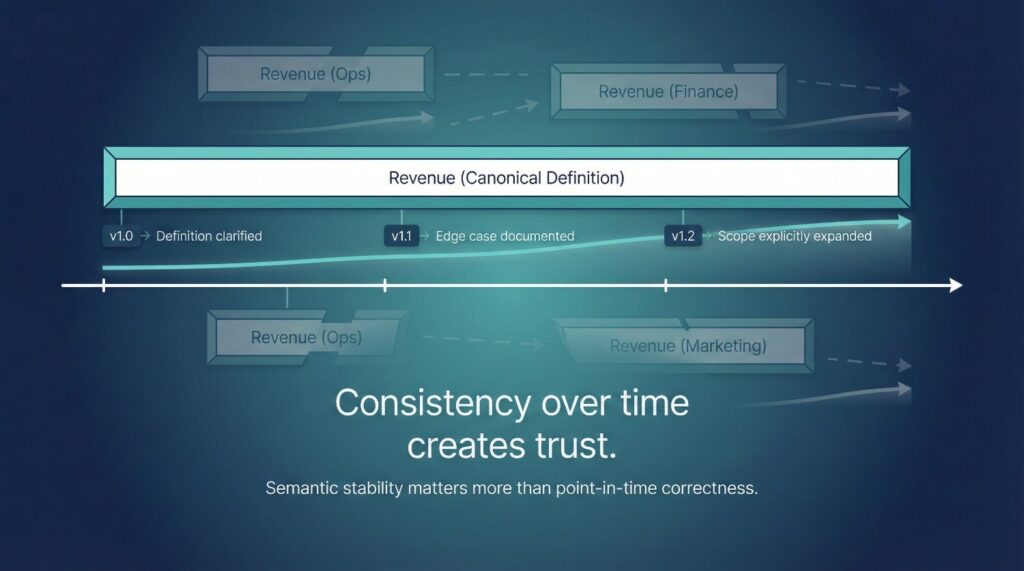

Measuring Semantic Stability

One of the clearest indicators of success in Data Warehouse Consulting is something most teams never track, semantic stability.

Not whether metrics are correct in theory, but whether their meaning stays stable long enough to be trusted. Trust depends less on correctness at a point in time and more on consistency over time.

The Right Questions to Ask

Instead of asking how many models were built, ask:

- How often do core metric definitions change?

Frequent changes signal unresolved ambiguity or shifting ownership. - Are changes intentional or reactive?

Intentional changes are planned, communicated, and justified. Reactive changes happen after complaints or surprises. - Is there a visible process for change?

Can anyone explain how a metric gets updated, who approves it, and how users are informed?

These questions reveal whether meaning is being actively managed or improvised. Improvised semantics force users to relearn the system repeatedly, which quietly erodes confidence.

Why Semantic Stability Matters

When semantics are unstable:

- Historical comparisons break

- Trust erodes quietly

- Business users hesitate to rely on data

- Every decision feels provisional

People stop asking what the data says and start asking what changed this time.

That behavior destroys confidence, even when numbers are technically correct.

What Stability Looks Like in Practice

A stable semantic layer doesn’t mean metrics never change.

It means:

- Changes are deliberate, not defensive

- Definitions are versioned and documented

- Users are warned before behavior shifts

- Ownership is clear when questions arise

Stability builds trust faster than perfection. Users will tolerate known limitations far more readily than unexpected changes.

Why This Is a Major Success Signal

When Data Warehouse Consulting works:

- Semantic churn drops

- Disputes decrease

- Users stop bracing for surprises

The warehouse starts to feel predictable, and predictability is the foundation of adoption.

If metric definitions are still changing weekly with no clear process, technical success hasn’t translated into organizational success.

Semantic stability is not a passive side effect of good engineering.

It is a deliberate outcome—and one of the strongest signals that consulting actually delivered value.

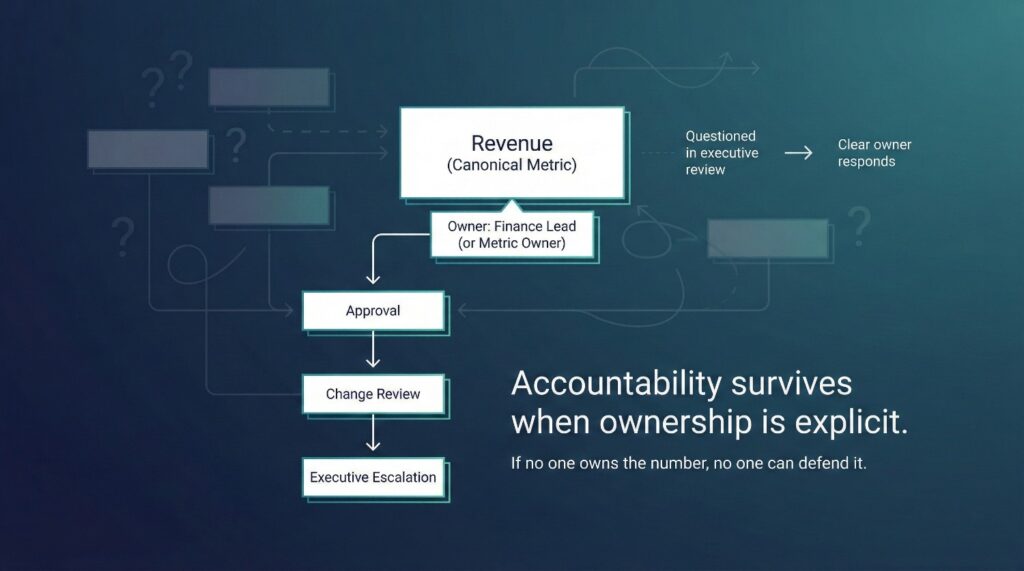

Measuring Ownership and Accountability

Ownership is one of the most durable outcomes Data Warehouse Consulting can deliver, and one of the easiest to test.

You don’t need dashboards or instrumentation to measure it.

You just need to ask the right questions. Ownership reveals itself fastest under pressure, not in documentation.

Signs That Ownership Actually Exists

Ownership is real when:

- Every core metric has a named owner

Not a team or a role, but an individual who is responsible for its definition and behavior. - Disputes escalate cleanly

When numbers are challenged, there is a known escalation path instead of endless debate or backchannel negotiation. Clean escalation prevents political negotiation from being mistaken for analysis. - Changes have explicit approval

Metric updates don’t “just happen.” They are reviewed, approved, and communicated by the owner.

If these conditions are present, accountability is functioning, even if the system isn’t perfect.

Why This Matters More Than Code

Code can be rewritten.

Models can be refactored.

Tools can be replaced.

Ownership, once established, tends to persist. Decision rights embedded in people outlast tools, processes, and org charts.

When ownership is clear:

- Engineers stop acting as referees

- Analysts stop defending logic they don’t own

- Business users know where to go with questions

- Trust survives personnel changes

This is why ownership clarity often outlasts consultants, and why it matters more than any specific technical artifact.

How to Measure Ownership in Practice

A simple test:

“If this metric is questioned in an executive meeting, who is responsible for answering?”

Hesitation or deflection at this moment is a reliable signal that ownership was never truly assigned.

Another test:

“Who approves changes to this metric, and how is that decision communicated?”

If there is no consistent answer, accountability is missing.

The Long-Term Signal

Successful Data Warehouse Consulting leaves behind:

- Decision rights

- Escalation paths

- Accountability structures

These persist long after consultants leave.

Durable ownership is the clearest indicator that the organization absorbed the engagement rather than outsourcing responsibility.

Ownership is not a governance detail.

It’s the backbone of a warehouse that people trust, use, and defend.

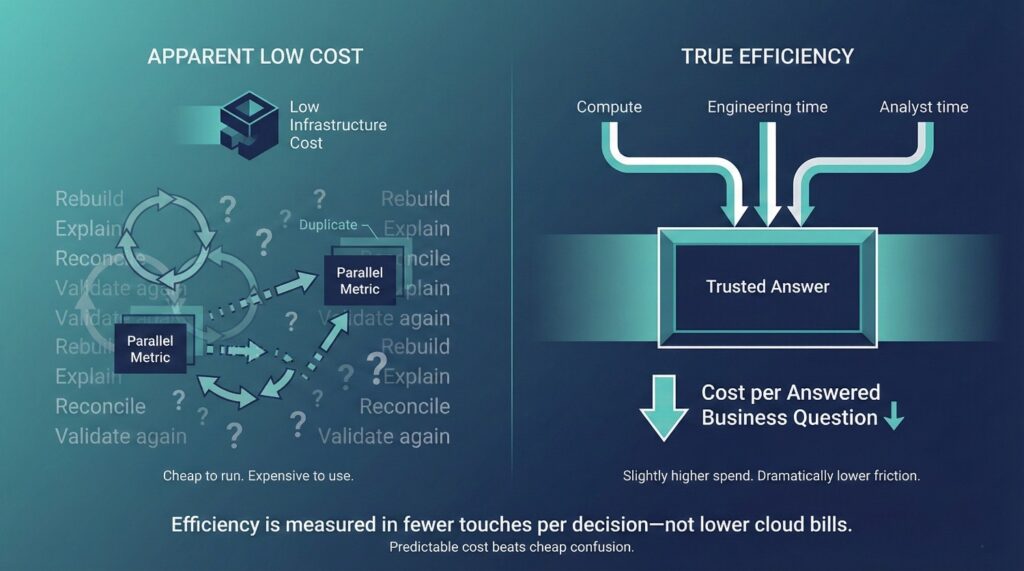

Measuring Cost and Efficiency (The Right Way)

Cost is one of the most misunderstood dimensions of Data Warehouse Consulting.

Most teams track it narrowly, looking at cloud spend, warehouse credits, or infrastructure bills. Those numbers matter, but on their own they tell an incomplete and often misleading story.

The real question is not:

“How much does the warehouse cost?”

It’s:

“What does it cost us to get a reliable answer that the business is willing to act on?”

Reliability includes confidence, repeatability, and the absence of downstream correction work.

What to Measure Instead

If you want to evaluate efficiency honestly, focus on these signals.

Cost per answered business question

How much total effort, including compute, engineering time, analyst time, does it take to answer a meaningful business question that leadership actually acts on?

When consulting works, this cost drops sharply because:

- Definitions are stable

- Reconciliation is reduced

- Answers don’t need repeated validation

Engineering hours spent on reactive fixes

Track how much time engineers and analysts spend:

- Explaining numbers

- Rebuilding models due to disputes

- Supporting one-off reconciliations

- Responding to “why doesn’t this match?” requests

A successful engagement shifts effort from firefighting to forward progress. When this shift happens, cost reduction follows naturally rather than through enforcement.

Reduction in duplicated work

Look for fewer:

- Parallel dashboards

- Duplicate metric logic

- Team-specific extracts

- Shadow pipelines

Duplication is a hidden cost. It compounds quietly because each parallel system appears locally rational but globally wasteful.

What Not to Over-Index On

Raw infrastructure cost alone is a trap.

A warehouse with lower infrastructure spend that:

- Requires constant reconciliation

- Consumes hours of manual effort

- Slows decisions

is more expensive than a slightly higher cloud bill attached to a trusted, stable system.

Infrastructure spend is visible.

Organizational inefficiency is not, but it’s usually far larger. Most cost overruns blamed on platforms are actually symptoms of unresolved coordination problems.

The Real Efficiency Signal

Efficiency improves when:

- Fewer people touch the same problem repeatedly

- Answers are reused instead of rebuilt

- Engineering time moves from reactive to strategic

When Data Warehouse Consulting works, cost becomes more predictable, not just lower.

That predictability is what allows organizations to scale data usage without scaling chaos.

Short-Term vs Long-Term Success Signals

One of the biggest mistakes leaders make when evaluating Data Warehouse Consulting is expecting all value to appear immediately.

Some outcomes appear quickly.

Others become visible only after behavior has had time to change.

Knowing the difference prevents premature disappointment as well as false confidence.

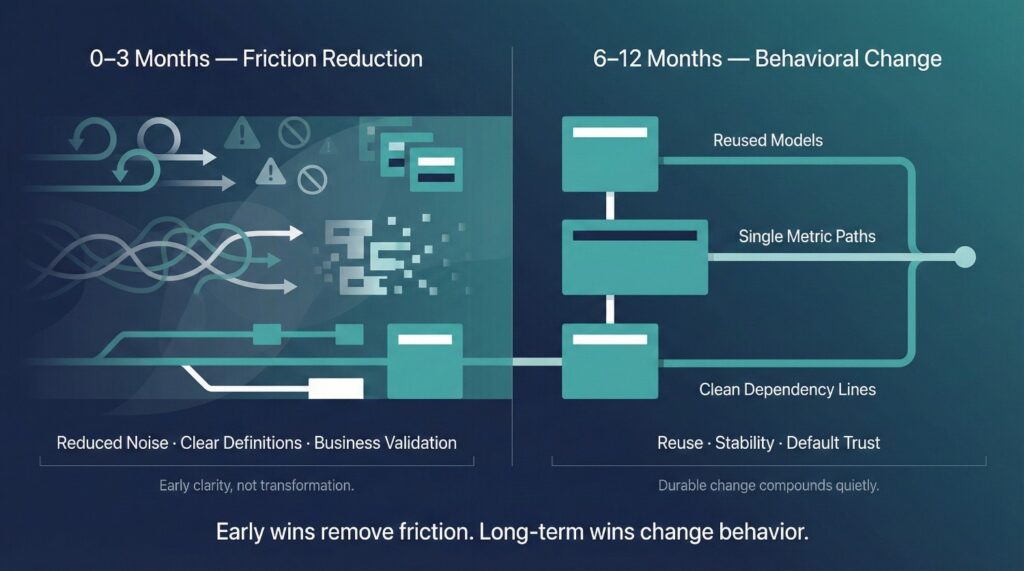

Short-Term Signals (First 0–3 Months)

In the early phase, success shows up as reduced friction rather than dramatic transformation. Reduced friction signals that decision-making barriers are being addressed, even if outcomes have not fully stabilized.

Look for:

- Reduced reconciliation noise

Fewer “why doesn’t this match?” messages, fewer emergency checks before meetings, and fewer parallel validations running in the background. - Clear definitions published

The presence of documented definitions matters less than whether people actually reference them in conversations and decisions. - Business participation in validation

Business stakeholders are involved in reviewing definitions and outputs, not just signing off at the end.

These signals indicate that the engagement is changing how conversations happen, not just what gets delivered.

If none of these appear within the first few months, the work is likely stalled at technical or analytical success rather than organizational success.

Long-Term Signals (6–12 Months)

Long-term success is quieter, but far more meaningful.

Look for:

- Fewer shadow systems

Spreadsheets, team-specific dashboards, and one-off extracts gradually disappear because they’re no longer needed. - A stable reporting layer

Core metrics stop changing frequently. When changes do occur, they are intentional, approved, and communicated. - New initiatives build on existing models

Teams reuse established definitions and structures instead of starting from scratch for every new question.

This is the strongest signal of success: the organization trusts the warehouse enough to extend it rather than work around it.

Why This Distinction Matters

Short-term signals show whether the engagement has started on the right path.

Long-term signals show whether the organization has truly absorbed the change into daily behavior.

Data Warehouse Consulting succeeds when early clarity compounds into durable behavior. Without compounding, early wins decay into temporary alignment rather than lasting change.

If you only measure early delivery, or only wait for long-term transformation, you miss the full picture.

Real success is visible across both horizons.

Red Flags That Indicate Failure (Even If the Project “Finished”)

Some Data Warehouse Consulting engagements technically conclude on time, on scope, and on budget, and still fail.

The failure is rarely loud.

It often looks like success because delivery milestones were met and visible artifacts were produced.

If you hear the following signals after “go-live”, the engagement is likely already failing, regardless of how complete it looks on paper.

“We’ll Fix Definitions Later”

This is the most common red flag.

It suggests:

- Semantic disagreements were deferred, not resolved

- Ownership was never fully established

- Temporary compromises became permanent

In practice, “later” rarely arrives. Definitions continue to shift reactively, and trust never stabilizes.

If meaning wasn’t settled during the engagement, it won’t settle itself afterward. Ambiguity that survives delivery almost always hardens into long-term disagreement rather than resolving organically.

“The Business Will Adapt Over Time”

This phrase assumes adoption is inevitable.

It isn’t.

When it appears, it usually means:

- Business teams were not meaningfully involved in validation

- Changes were explained after the fact

- Trust-building was treated as optional

What actually happens is quiet resistance: spreadsheets persist, dashboards are ignored, and parallel logic continues.

Adaptation doesn’t happen without safety, clarity, and ownership. Without those conditions, business users rationally protect themselves by maintaining parallel systems and informal workarounds.

Parallel Dashboards Persist

If multiple versions of the same metric continue to exist months after delivery, the system has not converged.

This indicates:

- No authoritative source of truth

- No enforcement of shared definitions

- No consequence for divergence

Parallel dashboards are not a temporary phase.They’re in a structural failure mode.

Consultants Leave With Critical Knowledge

This is one of the most damaging signs.

If:

- Rationale for decisions lives in people’s heads

- Only consultants can explain why logic exists

- Internal teams hesitate to change anything

Then the engagement transferred code—but not capability.

The warehouse becomes fragile the moment the consultants exit the management.

The Hard Truth

When these red flags are present, the problem is not execution quality.

It’s that:

- Decisions were avoided

- Ownership was incomplete

- Trust was never operationalized

The project may be finished, but the work is not. What remains unresolved is not technical debt, but decision debt.

True success in Data Warehouse Consulting is measured by what continues to work after the consultants are gone.

If clarity, ownership, and confidence disappear with them, the engagement didn’t fail loudly.It failed quietly.

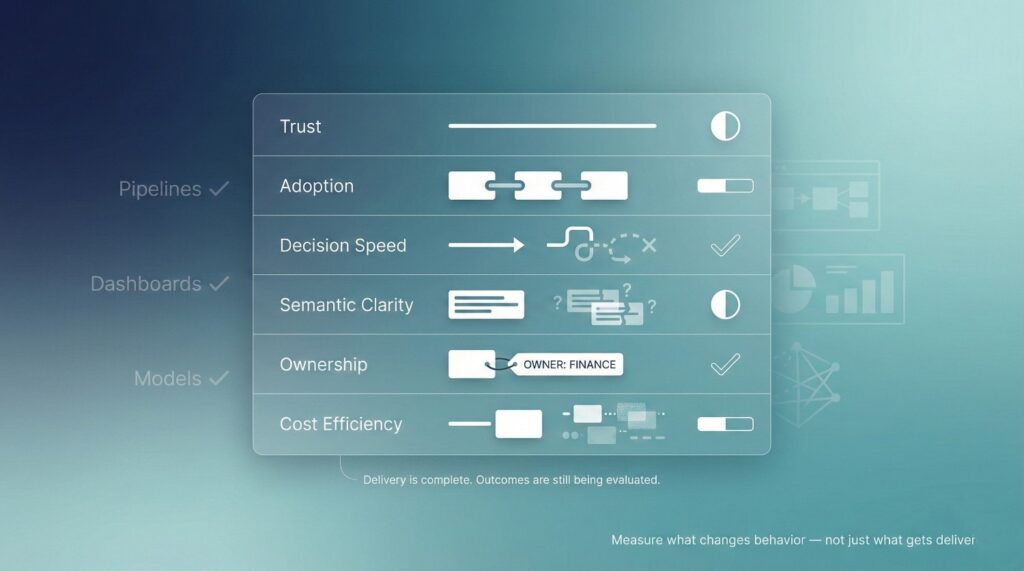

A Practical Scorecard for Leaders

One of the most effective ways to evaluate Data Warehouse Consulting is to step back from delivery artifacts and assess outcomes across a small set of organizational dimensions that reflect how the organization actually operates.

This scorecard isn’t meant to be mathematically precise or mechanically scored..

It’s meant to force honest reflection rather than optimistic reporting.

Core Dimensions to Evaluate

Trust

- Are numbers used without caveats?

- Do disagreements resolve quickly, or linger?

- Are teams willing to accept results even when inconvenient?

Trust is often the first dimension to erode and the last to recover, which makes early detection especially valuable.

Adoption

- Is the warehouse the default reference in meetings?

- Are shared reports reused instead of rebuilt?

- Are shadow spreadsheets disappearing?

Adoption failures are rarely loud. They show up as quiet workarounds rather than explicit rejection.

Decision Speed

- How long does it take to go from question to action?

- Has reconciliation work declined?

- Are follow-up meetings decreasing?

Semantic Clarity

- Are metric definitions stable over time?

- Is there a clear process for changing them?

- Do teams share the same meaning for core metrics?

Ownership

- Does every critical metric have a named owner?

- Is there a clean escalation path for disputes?

- Are changes explicitly approved and communicated?

Cost Efficiency

- Is less time spent on ad-hoc fixes and explanations?

- Has duplicated work been reduced?

- Is cost becoming more predictable, not just lower?

How to Use This Scorecard

As a mid-engagement check

Use it to identify blind spots early. If technical delivery is progressing but trust, ownership, or adoption scores are stagnant, the engagement needs to be redirected—before it’s too late.

As a post-engagement review

Revisit the scorecard three to six months after consultants exit. The most important question is not what was delivered, but what behavior changed. Durable value only exists if those behaviors persist without consultant involvement.

The Key Insight

You don’t need perfection across every dimension for the engagement to be valuable.

But if most categories score poorly, the consulting engagement hasn’t delivered durable value—no matter how impressive the technology looks.

A warehouse that works technically but scores low on trust, adoption, and decision speed has not succeeded in changing how decisions are made.

This scorecard gives leaders a way to measure what actually matters—without hiding behind vanity metrics or delivery checklists.

Used consistently, it turns Data Warehouse Consulting from a leap of faith into a measurable organizational investment with observable outcomes.

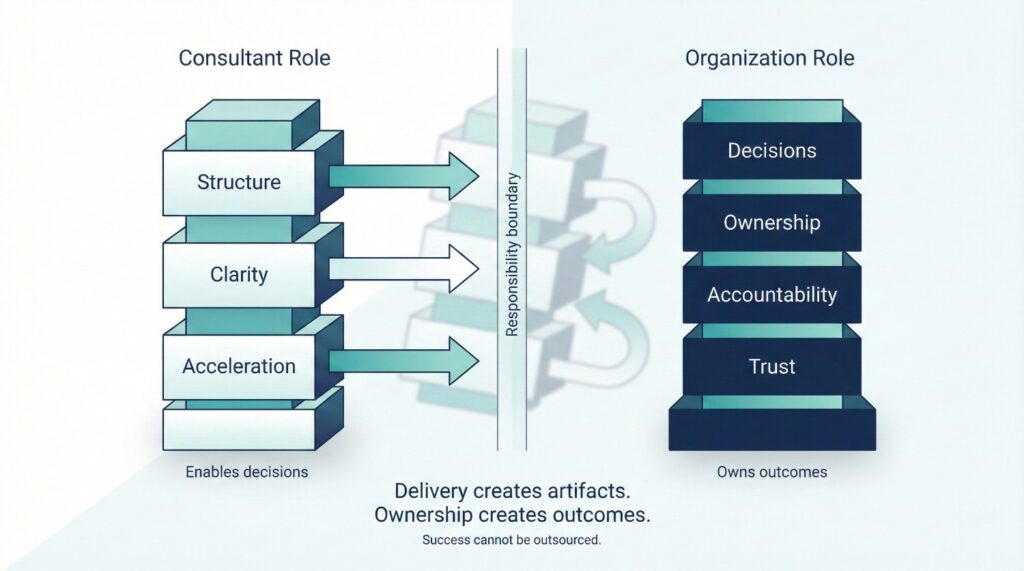

The Consultant’s Role vs the Organization’s Role

One of the most common reasons Data Warehouse Consulting fails is a quiet misunderstanding about who is responsible for what.

Consultants are often expected to “deliver success.”

Organizations assume success will follow delivery.

That assumption is wrong. Delivery creates artifacts. Ownership creates outcomes. Confusing the two is how organizations end up with functioning systems that no one is willing to defend.

What Consultants Can Enable

Good consultants create leverage by enabling things that are hard to do internally once complexity grows. The value of consulting is not additional capacity. It is compression of ambiguity into decisions the organization can no longer postpone.

They can provide:

- Structure

Clear frameworks for semantics, ownership, governance, and operating models, especially where none existed before. - Clarity

Making assumptions explicit, surfacing hidden disagreements, and forcing decisions that organizations tend to postpone. - Acceleration

Moving work forward by reducing ambiguity, highlighting trade-offs, and preventing teams from rebuilding the same logic repeatedly.

Consultants are effective when they shape how decisions are made, not when they try to make decisions themselves. Acceleration without decision authority simply moves unresolved conflict downstream, where it becomes harder and more expensive to fix.

What Only the Organization Can Own

There are responsibilities that cannot be outsourced, no matter how experienced the consultants are. These responsibilities persist long after the engagement ends, which is precisely why they cannot be delegated to temporary actors.

Only the organization can own:

- Decisions

Metric definitions, trade-offs, risk acceptance, and prioritization are inherently business decisions. - Trust

Trust comes from consistency, accountability, and leadership backing—not from external validation. - Accountability

When numbers are questioned, the organization—not the consultant—must stand behind them.

When consultants are asked to own these things, failure is almost guaranteed. They lack authority, context, and long-term accountability.

Why Success Must Be Co-Owned

Successful Data Warehouse Consulting is not a handoff. If either side treats the engagement as a transfer of responsibility instead of a transfer of capability, the outcome will degrade once external pressure is removed.

It’s a partnership.

Consultants bring:

- External perspective

- Pattern recognition

- Decision frameworks

The organization brings:

- Authority

- Context

- Ownership

- Commitment

When either side tries to carry the full load alone, outcomes suffer.

The Core Insight

Consultants don’t “deliver” success.

They create the conditions for success.

Whether those conditions turn into lasting value depends entirely on whether the organization is willing to:

- Make decisions explicit

- Own outcomes publicly

- Carry accountability after the engagement ends

When those roles are clearly understood and respected, Data Warehouse Consulting works.

When they’re blurred, even excellent consulting quietly fails, leaving behind systems that function, but don’t matter.

Final Thoughts

It’s easy to measure what ships.

Pipelines delivered.

Dashboards built.

Infrastructure deployed.

Those metrics are neat, familiar, and comforting.

They’re also incomplete. Delivery metrics measure effort. They do not measure whether the organization is now able to move with less hesitation and less internal friction.

What Real Success Looks Like

Data Warehouse Consulting succeeds when work disappears, not when more artifacts appear.

You know it’s working when:

- Reconciliation work quietly fades away

- “Which number is right?” arguments stop happening

- Meetings focus on decisions, not validation

- People trust the data enough to act on it

These changes are subtle, but they are unmistakable.

They signal that the organization has absorbed the system, not just installed it.

Why Delivery Metrics Fall Short

Delivery metrics tell you:

- What was built

- How fast it was built

- Whether it met technical requirements

They do not tell you:

- Whether behavior changed

- Whether trust increased

- Whether decision-making improved

A warehouse can be technically flawless and still fail to matter. When behavior remains unchanged, technical excellence becomes maintenance rather than progress.

The Leadership Responsibility

Leaders play a critical role in what gets measured.

If success is defined only by:

- Output

- Speed

- Completion

Then consulting will optimize for those things, often at the expense of long-term value.

If success is defined by:

- Reduced friction

- Clear ownership

- Faster, more confident decisions

then consulting has a chance to deliver outcomes that last. What leaders choose to measure becomes what teams optimize for, whether or not it reflects real value.

Final Takeaway

Measure what changes, not what ships.

If measurement never moves beyond delivery, organizations confuse motion with improvement and activity with impact.

When Data Warehouse Consulting works:

- Less work is required to get answers

- Fewer arguments consume time and energy

- Confidence replaces defensiveness

If the only thing you can measure is delivery, the engagement hasn’t gone far enough.

Because real success doesn’t show up as more dashboards.

It shows up as an organization that no longer has to fight its data to move forward.

Frequently Asked Questions (FAQ)

Delivery shows that work was completed, not that behavior changed. Pipelines and dashboards can be shipped while trust, ownership, and decision-making remain broken.

It means reconciliation, manual validation, duplicate reporting, and defensive explanations are no longer needed. When work disappears, confidence has replaced friction.

Look for behavioral signals:

- Fewer “which number is right?” debates

- Executives using numbers without caveats

- Reports reused instead of rebuilt

- Acceptance of results even when inconvenient

Trust is observed through behavior, not surveys.

It’s riskier not to. If leaders only measure what’s easy, they miss the factors that determine whether the warehouse is used or ignored. These signals are harder to quantify but far more predictive of value.

Early signals (within 1–3 months) include reduced reconciliation noise and clearer definitions. Durable success (6–12 months) shows up as fewer shadow systems, stable semantics, and faster decisions.

No. Consultants can enable structure and clarity, but leadership must own decisions, accountability, and trust. Without visible leadership backing, organizational success stalls.

When consultants leave with critical knowledge, or when teams say “we’ll fix definitions later.” Both indicate that clarity and ownership were never fully established.

Use it mid-engagement to catch blind spots early, and again post-engagement to evaluate whether behavior actually changed. If delivery looks strong but trust and adoption don’t improve, the engagement needs correction.

Because delivery metrics are familiar, objective, and politically safe. Organizational metrics force harder conversations about ownership, trust, and decision-making, so they’re often avoided.

If the only thing you can measure is what shipped, the engagement hasn’t gone far enough. Data Warehouse Consulting succeeds when arguments fade, confidence increases, and the organization stops fighting its data.