Table of Contents

Introduction

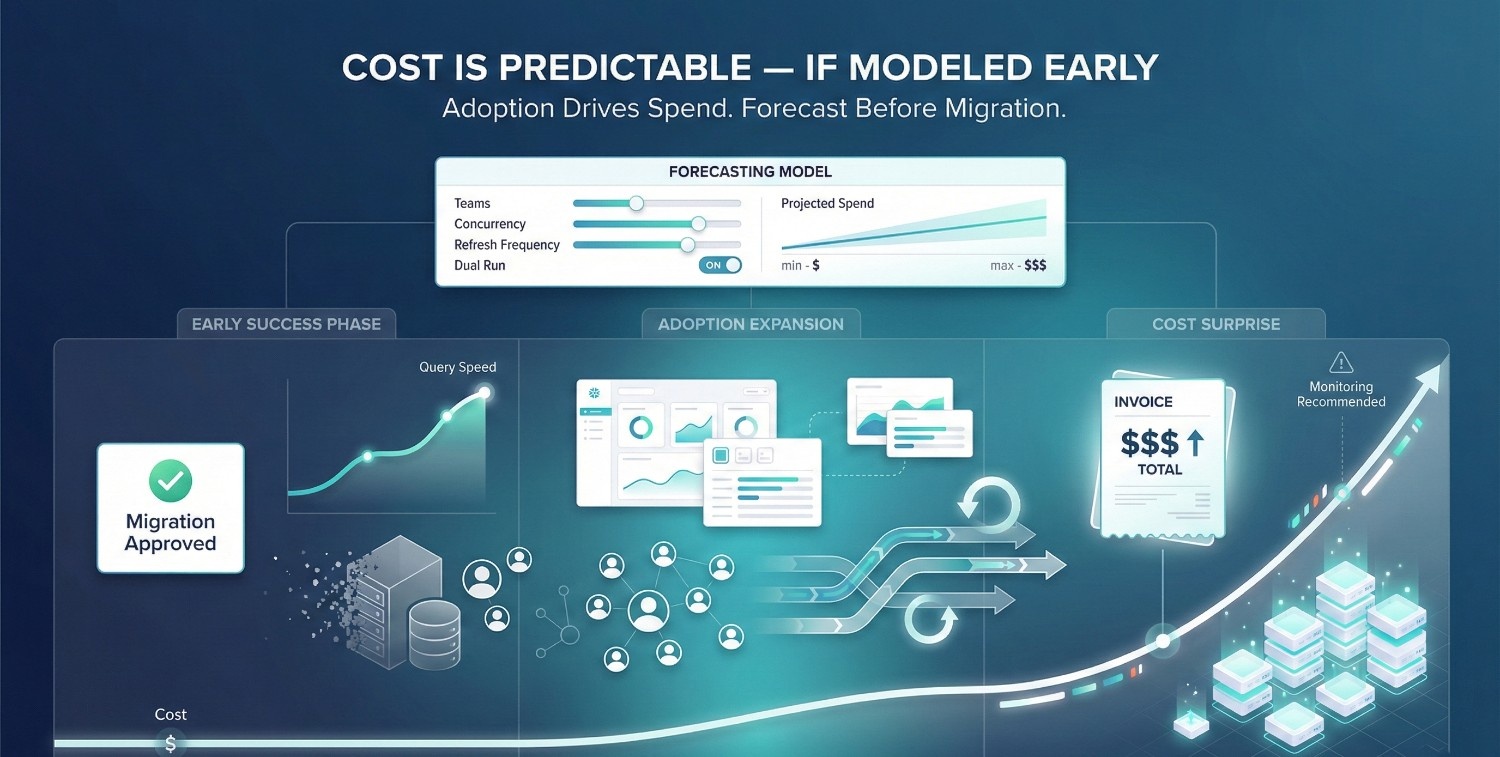

The pattern is remarkably consistent across many Snowflake migrations. First, the migration is approved. Snowflake is selected. The technical plan looks solid.

Early results are positive:

- Queries are faster

- Infrastructure headaches disappear

- Teams move quicker than before

Then, months later, the questions start:

- “Why is our Snowflake bill higher than expected?”

- “Who’s running all this compute?”

- “Did we size this wrong?”

By the time these questions surface, Snowflake is already embedded in daily workflows. Cost discussions become reactive instead of deliberate. This timing matters: once Snowflake is embedded in executive dashboards and operational workflows, cost decisions are no longer isolated technical choices, they affect trust, cadence, and decision velocity.

The Wrong Thing Gets Blamed

When Snowflake cost surprises appear, teams often blame:

- Inefficient queries

- Analyst behavior

- Poor tuning decisions

Those issues exist, but they’re rarely the primary root cause. The deeper problem is simpler and more uncomfortable:

Most teams migrate without an explicit cost model. They migrate with a technical plan and hope the bill works itself out.

Snowflake’s flexibility makes this easy to do. Its pricing feels flexible, usage-based, and forgiving. But flexibility without forecasting creates blind spots, especially as adoption grows.

Why Cost Overruns Are Predictable (Not Accidental)

Snowflake cost doesn’t spike randomly. It increases when:

- More teams onboard than expected

- Workloads multiply quietly

- Legacy usage patterns carry forward

- New use cases emerge post-migration

None of this is unusual. What’s unusual is entering migration without modeling how those behaviors translate into spend. When cost forecasting is missing:

- Leadership loses confidence after the fact

- Optimization turns into damage control

- Migration success is questioned retroactively

Not because Snowflake failed, but because expectations were never grounded.

What This Guide Will Help Leaders Do

This guide is about changing when cost conversations happen. Instead of asking “Why is Snowflake expensive?” after migration, it will help you:

- Forecast Snowflake cost before migration using realistic usage scenarios

- Replace guesswork with explicit assumptions about workloads and growth

- Enter migration with financial confidence, not just technical readiness

Snowflake cost doesn’t need to be a surprise. But avoiding surprises requires treating cost as a design input, not a post-migration metric.

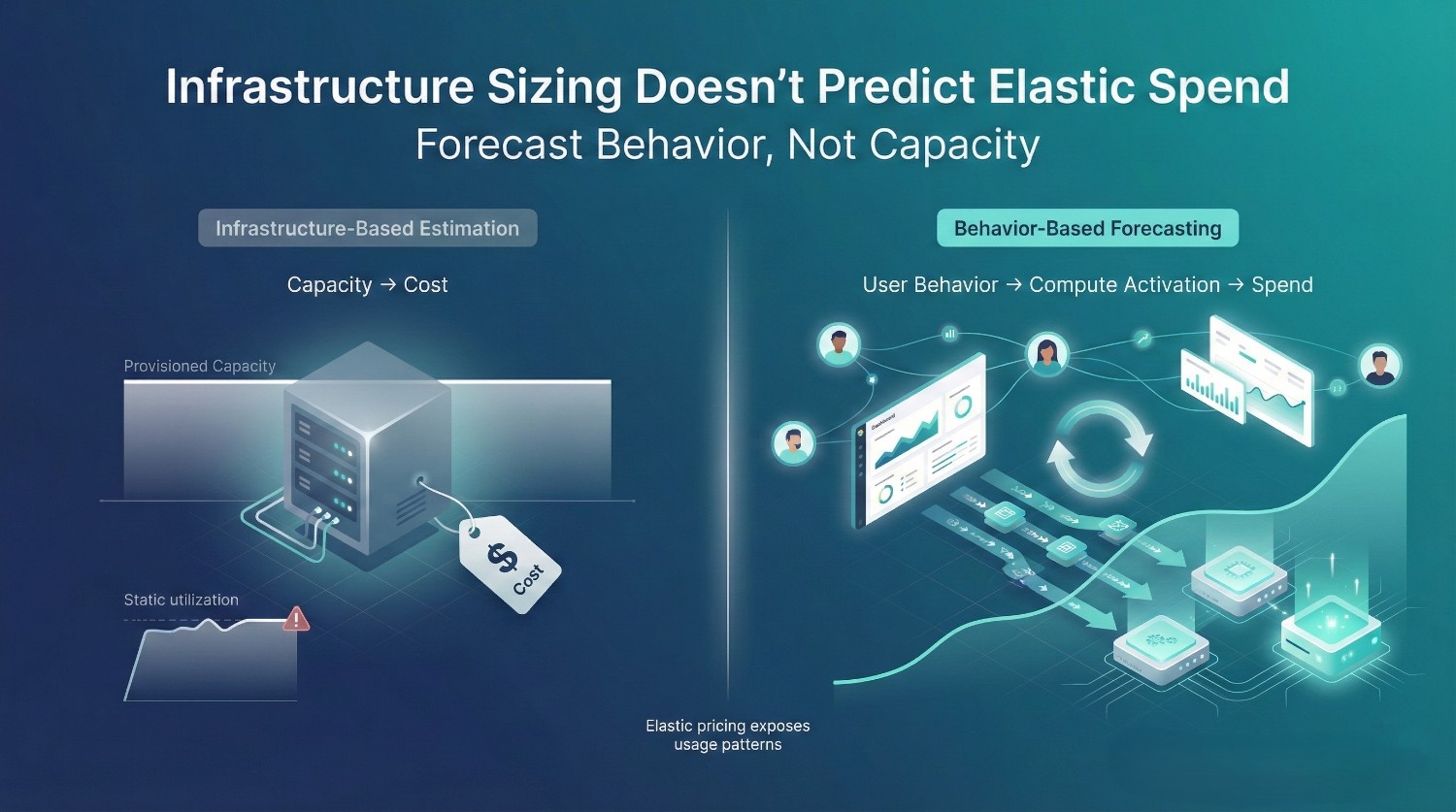

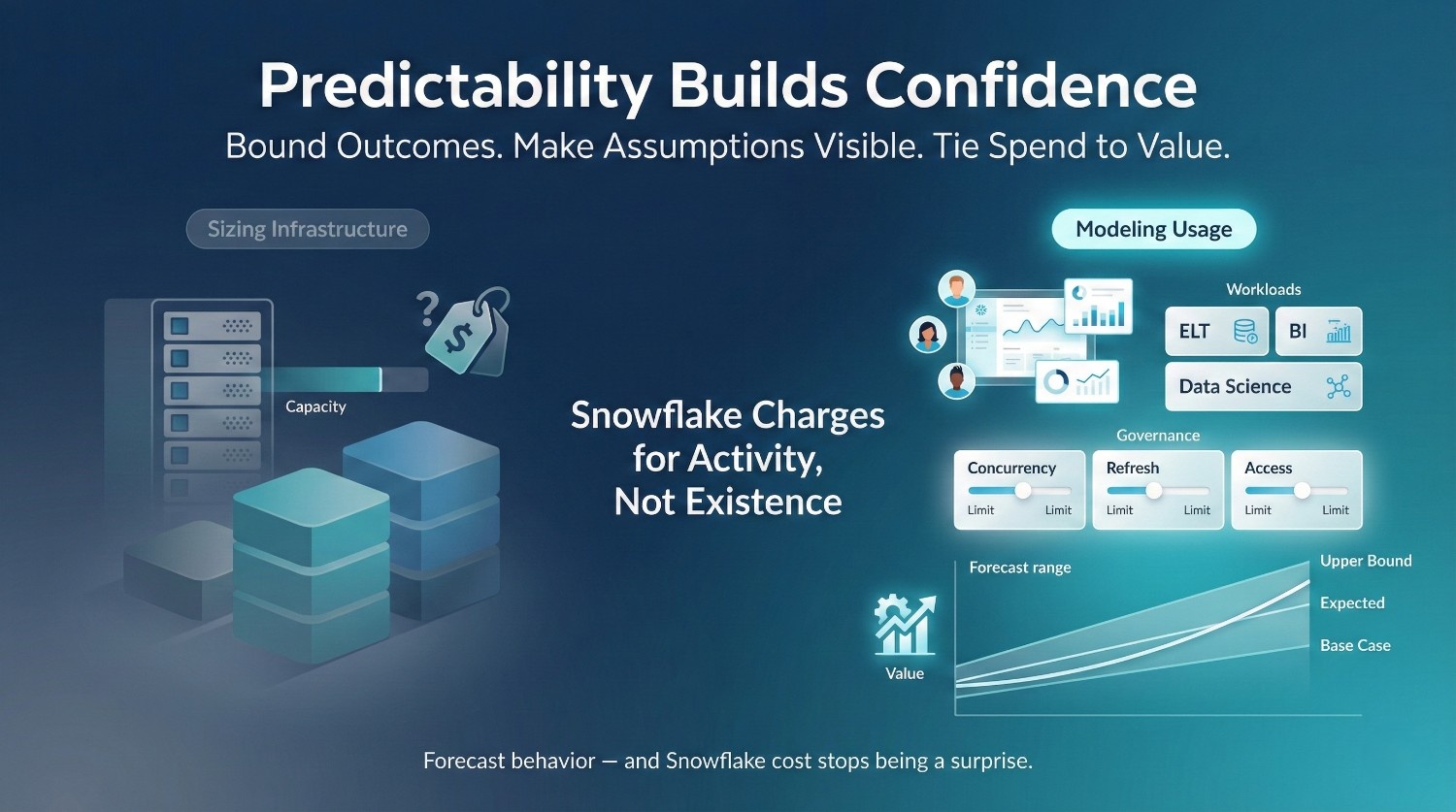

Why Traditional Cost Estimation Fails for Snowflake

Most Snowflake cost forecasting starts with the incomplete mental model. Teams try to estimate Snowflake the same way they estimated on-prem or legacy data warehouses, by mapping infrastructure size to cost. That approach breaks down quickly in Snowflake, because cost is no longer tied to capacity, but to behavior.

Why Legacy Cost Models Don’t Translate

Traditional environments were costed around:

- Fixed hardware or reserved capacity

- Predictable utilization ceilings

- Centralized access patterns

Once capacity was provisioned, marginal usage often felt “free.” Costs were sunk, not elastic.

Snowflake inverts this model. There is no fixed ceiling. Every workload, every rerun, every new use case has a direct and visible cost impact. What used to be hidden is now explicit.

The Misleading Assumptions

“We only pay for what we use.”

This sounds safe, but it hides a risk. Snowflake will happily execute anything you ask it to. If usage patterns aren’t forecasted, cost becomes a reflection of unbounded behavior, not efficiency.

“Cloud is cheaper by default.”

Cloud can be cheaper, but only when usage is intentional. Without guardrails, Snowflake replaces fixed waste with variable sprawl. Both assumptions are technically true, but practically dangerous without discipline. The danger is not in the pricing model itself, but in assuming that flexibility automatically produces efficiency.

Why Snowflake Cost Is Harder to Estimate

Snowflake cost is influenced by:

Usage-driven

Cost scales with how often compute runs, not how much data exists.

Behavior-dependent

Analyst habits, dashboard refresh rates, and trust levels all influence spend.

Organizationally influenced

Who gets access, how decisions are made, and how conflicts are resolved all shape usage patterns.

This is why two teams with identical data volumes can have radically different Snowflake bills. In practice, variance is driven far more by query frequency, trust in shared metrics, and workload isolation than by schema or storage size.

The Core Insight

Snowflake cost forecasting fails when teams try to predict infrastructure needs instead of predicting behavior.

Without modeling:

- Who will query

- How often

- On which workloads

- With what confidence

Any cost estimate becomes a guess.

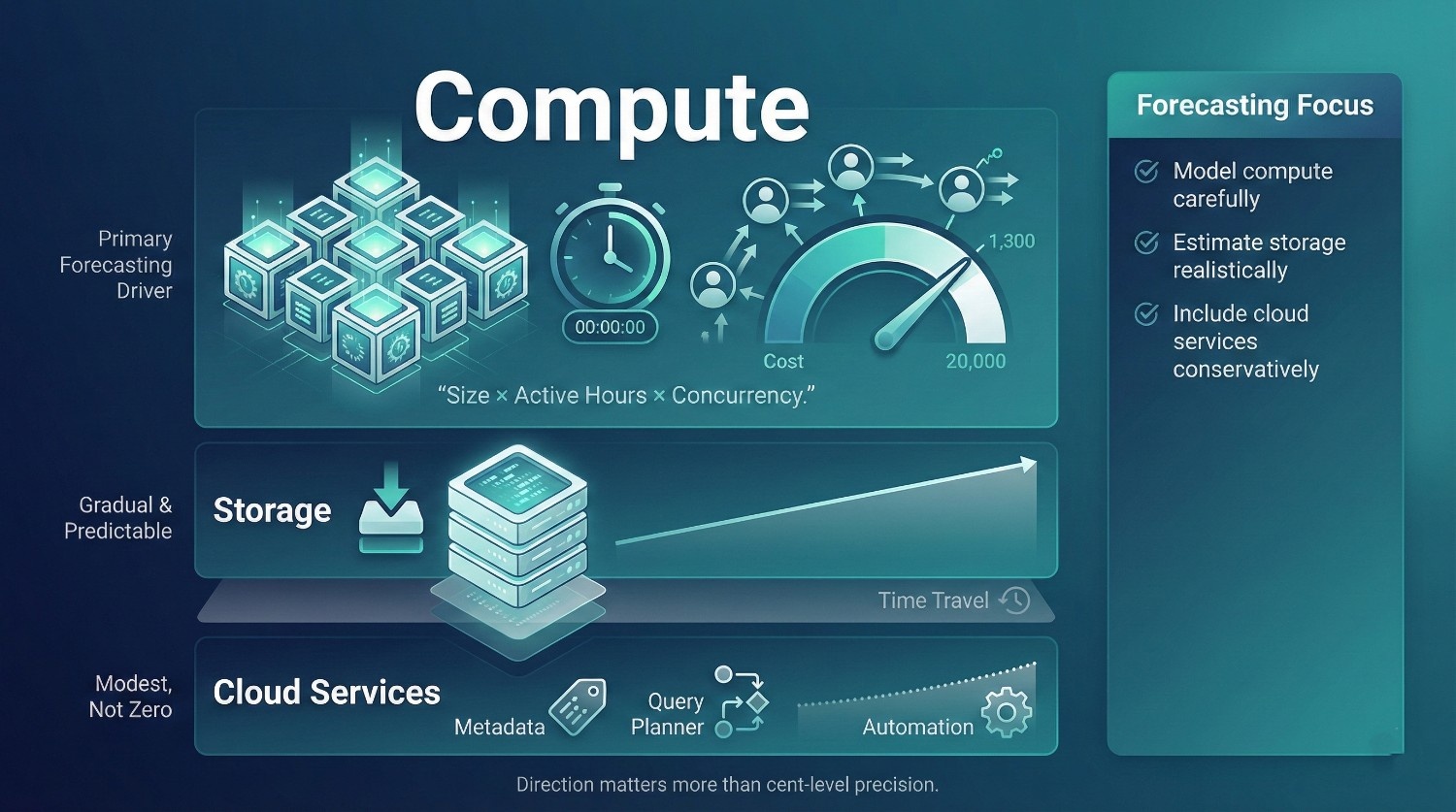

Understanding Snowflake Cost Components (Only What You Need for Forecasting)

Accurate Snowflake cost forecasting does not require modeling every pricing detail.

It requires understanding which components actually move the bill, and which ones rarely do. For forecasting purposes, you only need to focus on three cost buckets, in the right order of importance.

1. Compute (Primary Forecasting Driver)

Compute is where Snowflake cost lives, and where forecasting effort should be concentrated.

Snowflake compute cost is driven by virtual warehouses, and more specifically by the interaction of three factors:

Warehouse size

- Each size (XS → 6XL) has a fixed credit burn rate

- Doubling size roughly doubles cost per second

- Bigger warehouses don’t just cost more, they cost more continuously while active

Runtime

- Credits are consumed for every second a warehouse is active

- Idle time matters just as much as query time

- Auto-suspend behavior directly affects spend

Concurrency

- Multiple users, dashboards, and jobs hitting the same warehouse increase runtime

- Concurrency scaling can multiply compute consumption if not modeled explicitly in forecasts

For forecasting, compute should be modeled as:

(Warehouse size × expected active hours × expected concurrency patterns)

Most forecasting errors come from underestimating active hours, not from misjudging warehouse size.

This is where most teams underestimate Snowflake cost, by modeling peak usage but ignoring how often compute will actually be running.

2. Storage (Predictable, Usually Secondary)

Storage is the most misunderstood, and least dangerous, component of Snowflake cost.

Snowflake storage is:

- Compressed

- Columnar

- Linearly priced

Key components:

- Active data – what queries read today

- Time Travel – historical versions retained for recovery

- Fail-safe – short-term recovery layer

Why storage rarely causes budget shock:

- Growth is gradual and visible

- Cost scales with data volume, not usage behavior

- Compression ratios are typically high

For forecasting:

- Estimate data volume growth

- Apply expected retention policies

- Validate assumptions around Time Travel

If storage dominates your forecast, you’re likely overestimating risk, or underestimating compute

3. Cloud Services Cost (Often Ignored)

Cloud services cost is the wildcard most forecasts forget.

It covers:

- Metadata management

- Query optimization and planning

- Authentication and access control

- Query compilation

Why it matters for forecasting:

- It’s not tied to warehouse runtime

- It increases with:

- High query volumes

- Complex schemas

- Heavy automation and orchestration

For most environments, cloud services cost is modest, but not zero. Ignoring it entirely can create small but persistent forecast gaps that undermine confidence later.

4. The Forecasting Priority Order

When modeling Snowflake cost before migration:

- Model compute carefully

- Estimate storage realistically

- Include cloud services conservatively

You don’t need precision at the cent level.

You need:

- Directional accuracy

- Explicit assumptions

- Scenarios leadership can reason about

The Core Principle of Snowflake Cost Forecasting

Here is the principle most Snowflake cost forecasts miss:

Snowflake cost is not driven by data volume alone , it is driven by how people and systems use data.

Two organizations can migrate the same datasets, at the same scale, onto Snowflake, and end up with radically different bills. The difference is not schema design or SQL cleverness. It’s behavior.

Why Data Volume Alone Is a Weak Predictor

Data volume tells you:

- How much storage you’ll pay for

- Roughly how large scans could be

It does not tell you:

- How often queries will run

- How many people will run them

- Whether results will be trusted or re-run

- Whether workloads will compete or stay isolated

Snowflake charges for activity, not existence. Unused data is cheap. Repeated computation is not. This is the fundamental shift teams must internalize when moving from capacity-based to consumption-based analytics platforms.

What Forecasting Actually Requires

To forecast Snowflake cost realistically, you must model usage patterns, not just infrastructure.

Usage Patterns

You need assumptions about:

- How many users will query daily

- Which dashboards refresh automatically

- How often analysts re-run similar queries

- Whether data will be explored once or repeatedly

Trust matters here. Low trust leads to:

- Duplicate queries

- Manual validation

- Higher compute usage

Workload Separation

Forecasts must assume how workloads are separated:

- ELT jobs

- BI dashboards

- Ad-hoc analysis

- Data science experiments

If these share warehouses, cost behaves very differently than if they are isolated. Most cost overruns come from mixed workloads, not underpowered hardware.

Governance Assumptions

Every forecast embeds a governance model, whether stated or not:

- Who can run what workloads

- On which warehouses

- At what frequency

- With what limits

If governance is assumed but not enforced, the forecast will be wrong.

Why Technical Sizing Alone Fails

Sizing warehouses without modeling behavior leads to false confidence:

- Warehouses look reasonably sized

- Query performance seems fine

- Costs appear manageable, on paper

Then adoption grows. Then usage patterns diverge. Then Snowflake cost “unexpectedly” spikes. The forecast didn’t fail.

The assumptions were never made explicit.

The Key Takeaway

Snowflake cost forecasting is not a math problem, it’s a design exercise. You are not predicting:

- How big Snowflake will be

You are predicting:

- How it will be used

Until usage, workload separation, and governance assumptions are explicit, any Snowflake cost forecast becomes an optimistic guess if assumptions remain implicit.

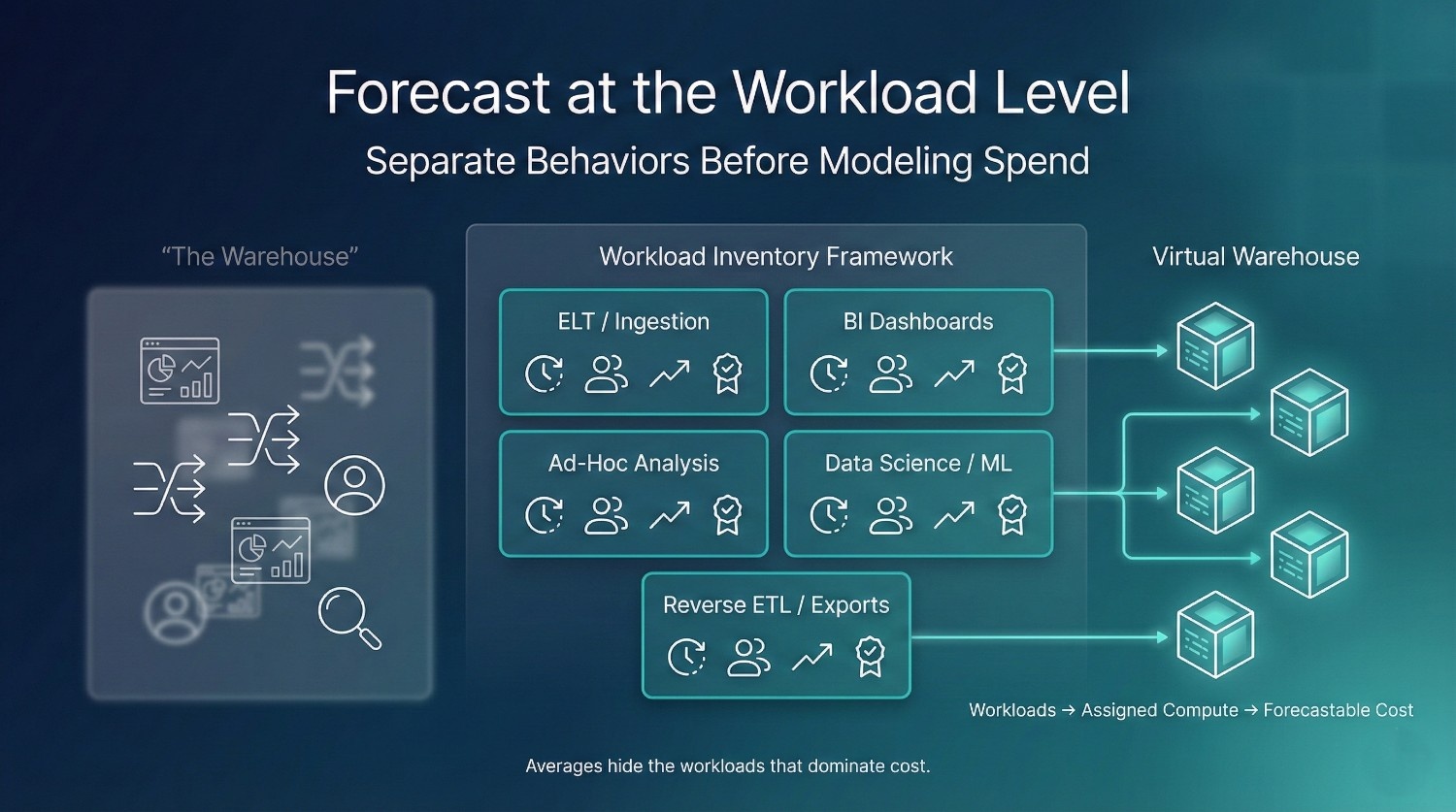

Step 1: Inventory Workloads Before Migration

Snowflake cost forecasting starts long before spreadsheets and formulas. It starts with a workload inventory.

Many migrations skip this step. Teams migrate “the warehouse” as if it’s a single thing, when in reality, Snowflake cost is driven by many distinct workloads behaving very differently. If you don’t separate them up front, your forecast will collapse into averages that don’t hold in practice. Averaged forecasts hide the very workloads that later dominate cost.

Identify and Classify All Workloads

Before migration, explicitly list and categorize workloads into clear groups. At a minimum, include:

ELT / ingestion jobs

- Batch or streaming loads

- Transformation schedules

- Backfills and reprocessing patterns

BI dashboards

- Scheduled refreshes

- Executive vs team-level dashboards

- Latency sensitivity

Ad-hoc analysis

- Analyst exploration

- One-off investigations

- Repeated “validation” queries

Data science / ML

- Feature generation

- Model training

- Experimentation workloads

Reverse ETL / exports

- Syncs to SaaS tools

- Downstream system feeds

- External reporting extracts

Each category behaves differently, and carries different Snowflake cost implications. Treating all workloads as interchangeable is one of the most common reasons early Snowflake cost forecasts collapse under real usage.

Capture the Right Attributes for Each Workload

For forecasting, you don’t need perfect accuracy. You need explicit assumptions. For every workload, document:

Frequency

- How often does it run?

- Hourly, daily, weekly, ad-hoc?

Concurrency

- How many instances run at the same time?

- Single-threaded jobs vs parallel usage?

Criticality

- Business-critical, important, or optional?

- What breaks if it slows down?

Expected growth

- More users?

- More data?

- More downstream dependencies?

These attributes matter more than raw query complexity.

A moderately expensive workload that runs once a day is usually manageable.

A slightly expensive workload that runs every five minutes is not.

Why This Step Is Non-Negotiable

This inventory becomes the backbone of your Snowflake cost model because it allows you to:

- Assign workloads to appropriate warehouses

- Model runtime realistically instead of optimistically

- Separate predictable cost from exploratory cost

- Identify where governance and limits will matter

Without this step:

- All workloads blur together

- Concurrency is underestimated

- Idle time is ignored

- Forecasts rely on averages that don’t survive real usage

The Key Insight

Snowflake cost is not forecasted at the platform level. It is forecasted at the workload level.

Once workloads are visible, cost modeling becomes concrete instead of abstract.

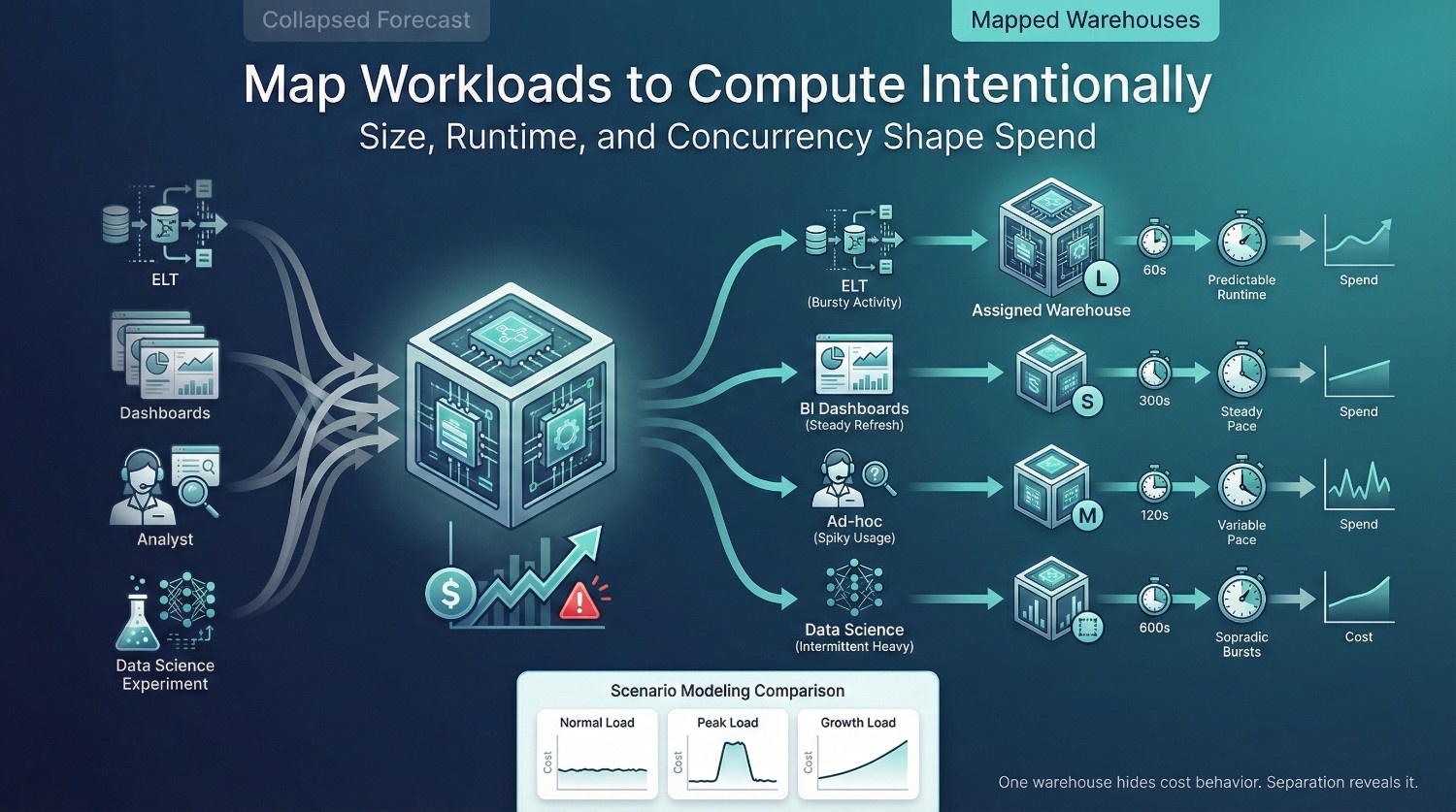

Step 2: Map Workloads to Virtual Warehouses

Once workloads are inventoried, the next mistake teams make is collapsing everything onto “a Snowflake warehouse” in the forecast. That shortcut almost guarantees an inaccurate model.

Why One Warehouse ≠ One Cost Model

Different workloads behave differently:

- ELT jobs run in bursts

- Dashboards run continuously

- Ad-hoc analysis is spiky and unpredictable

- Data science workloads are heavy but intermittent

Putting all of these into a single warehouse, even just on paper, hides the real cost drivers. Snowflake cost forecasting only works when each workload class is mapped intentionally to a compute profile.

Assign the Right Attributes Per Workload

For each workload category, explicitly define:

Warehouse size

- Small warehouses for light, frequent workloads (BI, ad-hoc)

- Larger warehouses only where parallelism actually reduces runtime (heavy ELT, backfills)

Forecasting mistake: assuming larger warehouses are safer. In reality, they burn credits faster even when underutilized.

Auto-suspend settings

- Aggressive suspend (30–90s) for interactive workloads

- Longer suspend only where cold starts would meaningfully disrupt operations

Forecasting mistake: ignoring suspend time altogether. Idle minutes are often a larger cost driver than execution time.

Expected runtime

- Average runtime per execution

- Not best-case, not worst-case, but typical behavior

This forces realism. If a job “usually runs 5–10 minutes,” model it as 10.

Account for Concurrency Explicitly

Concurrency is where most forecasts quietly fail.

You must assume:

- How many dashboards refresh at the same time

- How many analysts query during peak hours

- Whether concurrency scaling will activate

Ignoring concurrency leads to:

- Underestimated runtime

- Missed multi-cluster costs

- Surprise spikes post-migration

If concurrency is uncertain, model multiple scenarios:

- Normal load

- Peak load

- Growth load

Forecasts don’t need certainty. They need ranges leadership can reason about.

Common Forecasting Mistakes to Avoid

Oversizing “just in case”

This feels conservative but inflates baseline cost immediately, and discourages right-sizing later.

Ignoring concurrency spikes

Average usage hides peaks. Snowflake bills peak just as eagerly as steady state.

Assuming warehouses are always busy

Some are. Many aren’t. Idle time must be modeled, or your forecast will be wrong.

The Key Takeaway

Snowflake cost forecasting becomes accurate when:

- Warehouses are modeled per workload

- Runtime and suspend behavior are explicit

- Concurrency is assumed, not hoped away

You are not forecasting Snowflake. You are forecasting how your organization will consume compute.

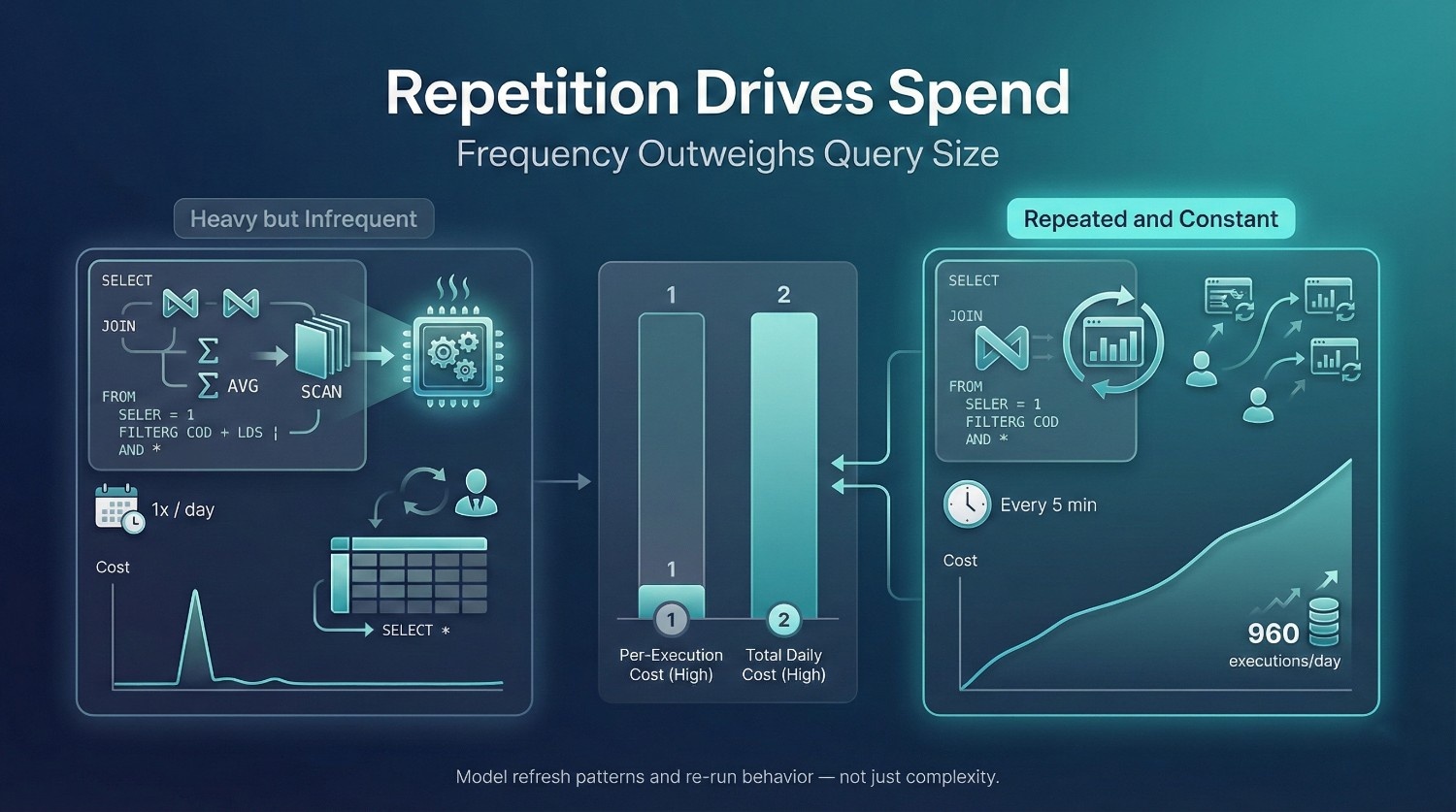

This is the step where most Snowflake cost forecasts quietly fall apart. Teams model how much data exists and how many queries will run, but they don’t model how queries are actually used. Snowflake cost doesn’t grow because queries are complex. It grows because they are repeated.

High-Cost vs High-Frequency Queries

These are not the same, and confusing them leads to bad forecasts.

High-cost queries

- Large scans

- Complex joins

- Heavy aggregations

- Backfills or historical analysis

These matter, but they’re often infrequent and predictable.

High-frequency queries

- Dashboard refreshes

- Repeated validation queries

- Slight query variations run by multiple users

These are far more dangerous. A moderately expensive query run every 5 minutes will cost more over a month than a very expensive query run once a day. Forecasts that focus only on “worst queries” miss this entirely. In practice, cost is dominated by medium-weight queries that run constantly, not by rare analytical outliers.

Dashboard Refresh Patterns

Dashboards are one of the most reliable Snowflake cost drivers, and one of the most underestimated.

You must model:

- How often dashboards refresh (5 min, 15 min, hourly, daily)

- Whether refreshes are synchronized across teams

- Whether dashboards recompute heavy logic or reuse shared models

Common forecasting mistake:

Assuming dashboards are “lightweight.”They aren’t, when they recompute joins and aggregations repeatedly. Ten dashboards refreshing every 15 minutes is 960 executions per day. That behavior, not query size, determines cost.

Re-Run Behavior During Investigations

Forecasts often assume clean, linear usage:

- Question → query → answer

Real usage is messier.

When something looks “off,” users:

- Re-run queries with small changes

- Add joins to cross-check numbers

- Validate results against multiple slices

Low trust multiplies queries. If the organization historically struggles with reconciliation, assume higher re-run behavior post-migration, not lower. Snowflake makes re-running easy, which increases compute usage when trust is fragile.

Why SELECT * Behavior Matters Financially

SELECT * isn’t just a style issue, it’s a cost amplifier.

It:

- Scans unnecessary columns

- Increases data scanned per query

- Breaks result caching when schemas evolve

- Encourages wide-table overuse

In a forecast, SELECT * behavior increases:

- Execution time

- Warehouse runtime

- Credit burn per query

If your current environment allows casual SELECT *, model that cost honestly, or plan to change the behavior explicitly.

The Key Insight

Snowflake cost grows with repetition, not just complexity.

Forecasting must account for:

- How often queries run

- How often they’re re-run

- How much logic is recomputed each time

If you only model query volume and ignore behavior, your Snowflake cost forecast will be directionally wrong, even if your math is perfect.

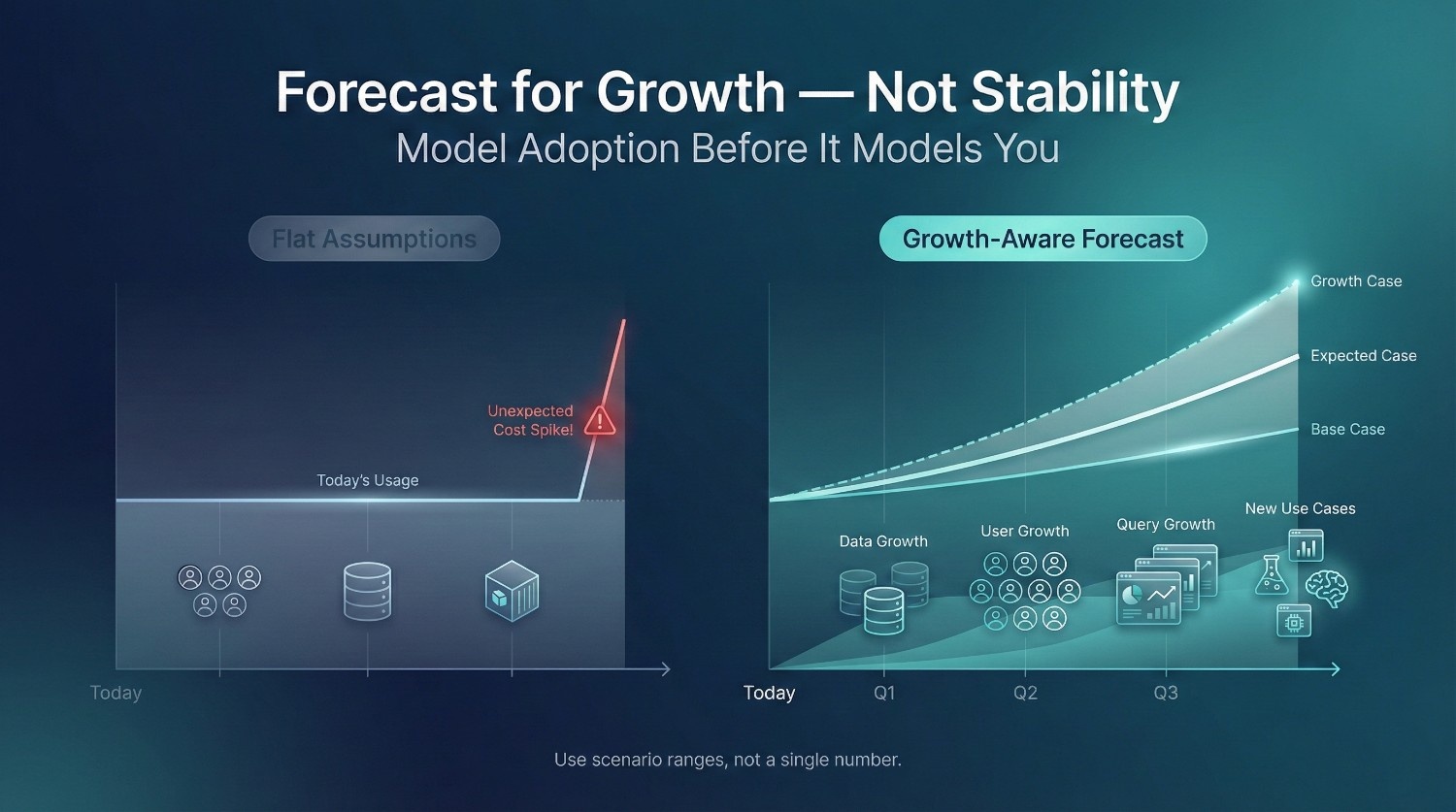

The fastest way to lose confidence in a Snowflake cost forecast is to assume today’s usage will remain stable after migration.

It won’t. Migration doesn’t just move data, it unlocks new behaviour. If growth isn’t modeled explicitly, Snowflake cost surprises are guaranteed. Forecasts that assume flat usage after migration consistently underestimate cost, because they ignore the removal of friction that previously limited exploration.

What Growth Must Be Forecasted

You don’t need perfect predictions. You need transparent assumptions. At a minimum, forecast growth across four dimensions.

Data growth

- Increase in raw data volume

- New source systems onboarded

- Higher retention expectations post-migration

Data growth affects storage, but more importantly, it increases scan sizes and execution time.

User growth

- More analysts getting access

- Business users self-serving data

- Executives exploring dashboards directly

Each new user increases concurrency and query frequency, even if individual queries are small.

Query growth

- More dashboards

- Higher refresh frequency

- Repeated validation and investigation queries

Query growth compounds cost faster than data growth.

New use cases post-migration

- Experiments that weren’t possible before

- More ad-hoc analysis

- New downstream integrations

Snowflake’s success often creates demand, and demand drives cost.

Why Conservative Forecasting Matters

Optimistic forecasts feel safe politically, but fail operationally.

When growth exceeds assumptions:

- Cost overruns feel like mistakes

- Leadership confidence erodes

- Optimization becomes reactive

Conservative forecasting does the opposite:

- It normalizes growth-driven cost increases

- It gives leadership decision context

It prevents surprise

Use Scenarios, Not a Single Number

Snowflake cost forecasting should always produce ranges, not a single figure. At minimum, model:

Base case

- Current workloads

- Minimal growth

- Best-case discipline

Expected case

- Realistic adoption

- Planned dashboards and users

- Known new use cases

Worst reasonable case

- Faster-than-expected adoption

- High re-run behavior

- Poor workload isolation

The goal is not fear, it’s preparedness.

The Key Takeaway

Snowflake cost forecasting fails when it assumes the following:

“If everything goes according to plan…”

Snowflake cost forecasting succeeds when it asks:

“What happens when this platform actually gets used?”

Explicit growth assumptions turn Snowflake cost from a surprise into a managed outcome.

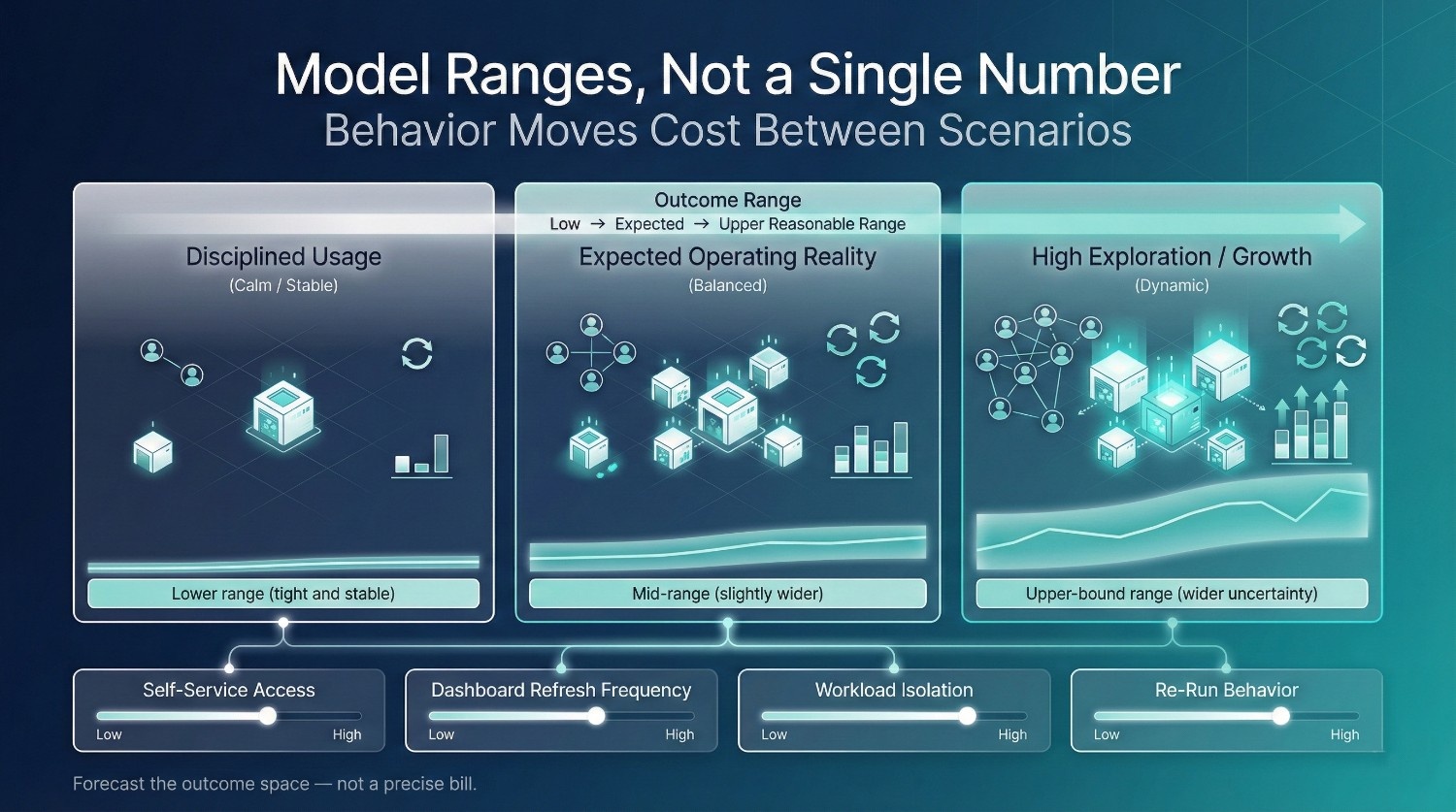

Step 5: Build Scenario-Based Snowflake Cost Models

Once workloads, warehouses, query behavior, and growth assumptions are explicit, the final step is turning them into scenarios leadership can reason about.

This is where Snowflake cost forecasting becomes credible. A single projected number creates false confidence. Scenarios allow leadership to see which behaviors, not which line items, actually change cost outcomes.

Why Scenario-Based Models Matter

Snowflake cost is not deterministic. It varies based on:

- How aggressively teams self-serve

- How much trust exists in shared models

- How quickly new use cases emerge

These dynamics appear in nearly every large-scale cloud migration: adoption accelerates precisely because friction is removed, and cost follows that acceleration unless explicitly modeled. A scenario-based model acknowledges this reality instead of pretending it doesn’t exist.

The goal is not to predict the exact bill, it’s to bound the outcome space.

Example Scenario 1: Conservative Adoption

Assumptions

- Limited self-service access

- Dashboards refresh infrequently

- Ad-hoc analysis is controlled

- Strong workload isolation

Model inputs

- Small-to-medium warehouses

- Short daily runtime

- Minimal concurrency scaling

Forecast output

- Monthly compute credits: Low

- Storage cost: Predictable and modest

- Cloud services buffer: Minimal

- Total Snowflake cost: Stable, lowest expected range

This scenario represents disciplined usage with slower adoption.

Example Scenario 2: Moderate Self-Service Analytics

Assumptions

- Analysts and business users actively query data

- Dashboards refresh regularly

- Some re-run behavior during investigations

- Workloads mostly isolated, but not perfectly

Model inputs

- Mix of small and medium warehouses

- Longer daily active hours

- Moderate concurrency during business hours

Forecast output

- Monthly compute credits: Medium

- Storage cost: Gradual growth

- Cloud services buffer: Noticeable but manageable

- Total Snowflake cost: Expected operating range

This is where most organizations actually land.

Example Scenario 3: High Ad-Hoc / Exploratory Usage

This scenario is common shortly after migration, when friction is removed faster than discipline is established.

Assumptions

- Heavy self-service adoption

- Frequent exploratory queries

- Low initial trust driving re-runs

- Mixed workloads sharing warehouses

Model inputs

- Larger warehouses running longer

- High concurrency spikes

- Concurrency scaling frequently engaged

Forecast output

- Monthly compute credits: High

- Storage cost: Secondary contributor

- Cloud services buffer: Material

- Total Snowflake cost: Upper-bound “worst reasonable” range

This scenario is not failure, It reflects genuine Snowflake usage without strong guardrails.

What to Include in Every Scenario

For each scenario, your model should clearly show:

- Monthly compute credits (by workload class)

- Estimated storage cost (with retention assumptions)

- Cloud services buffer (conservative, not zero)

- Total estimated Snowflake cost

More importantly, it should document:

- The assumptions behind each number

What behaviors would move you from one scenario to another

The Key Insight

Forecast ranges build trust. Single numbers create false certainty.

When leadership sees:

- The downside

- The upside

- The levers that move between them

Snowflake cost stops feeling unpredictable. It becomes something the organization has consciously chosen, and knows how to manage.

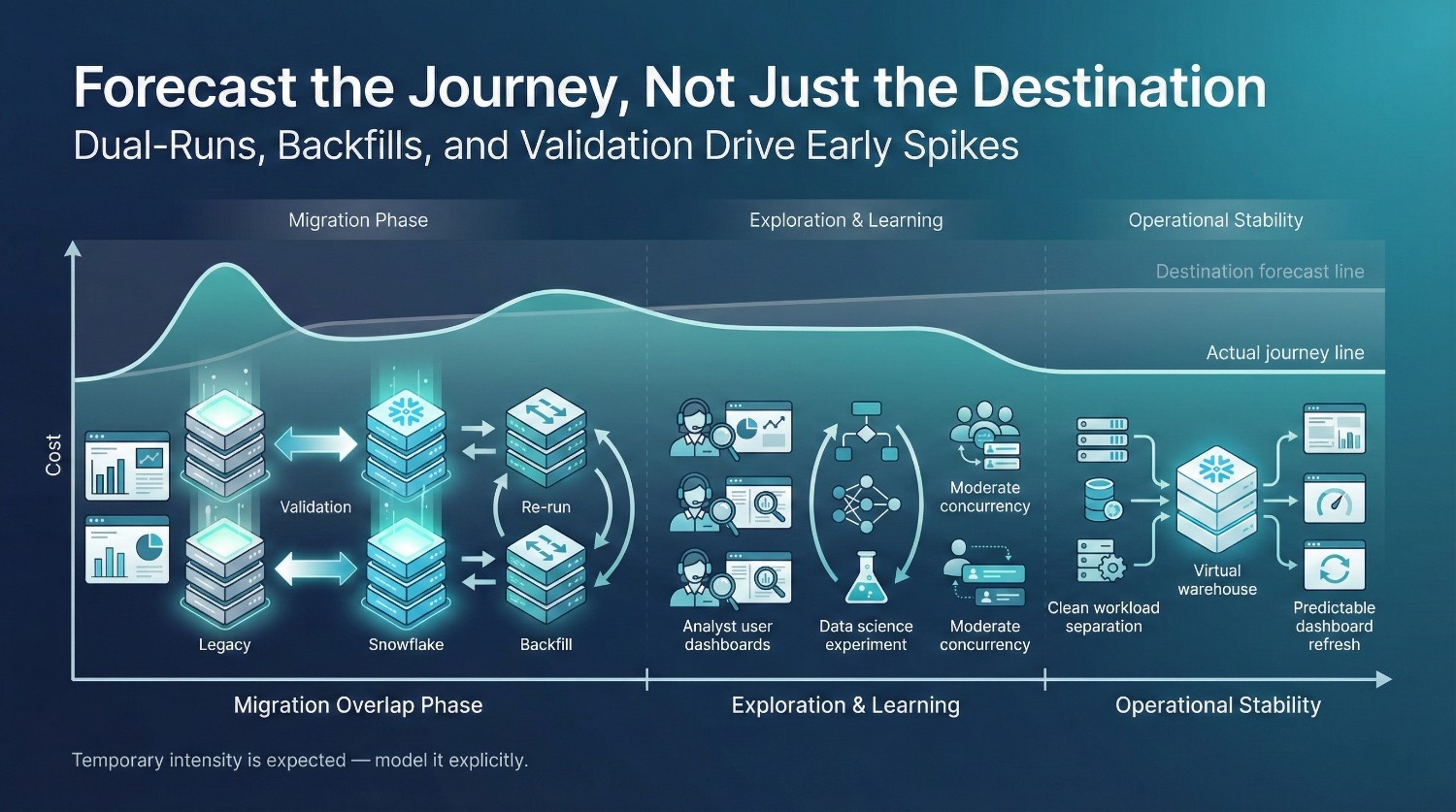

What Most Teams Forget to Include in Snowflake Cost Forecasts

Even teams that build thoughtful Snowflake cost models often underestimate one category of spend:

temporary behavior that is intense, short-lived, and expensive.

These costs don’t last forever, but they arrive early, when leadership attention is highest. If they’re missing from the forecast, confidence erodes quickly.

Dual-Run Periods

During migration, teams almost always run:

- The legacy warehouse

- Snowflake

- In parallel

This doubles compute usage for:

- Validation queries

- Reporting comparisons

- Confidence building

Most forecasts assume a clean cutover. Reality includes weeks, or months, of overlap. If dual-runs aren’t modeled explicitly, early Snowflake bills look “wrong,” even when they’re expected.

Validation and Reconciliation Queries

Validation is not light usage. It includes:

- Side-by-side comparisons

- Historical reconciliation

- Re-running queries with slightly different logic

These queries are often:

- Heavy

- Repetitive

- Time-bound but intense

Forecasts that only include steady-state usage miss this entirely. Early cost spikes are often a sign of validation and learning, not inefficiency. Forecasts that account for them preserve trust during migration.

Backfills and Reprocessing

Migration almost always triggers:

- Historical backfills

- Schema corrections

- Logic replays

These are some of the most expensive queries in the entire lifecycle. They:

- Scan large volumes

- Run on large warehouses

- Execute repeatedly as logic evolves

Backfills are temporary, but they can dominate early Snowflake cost if not anticipated.

Cost of Experimentation

Snowflake enables experimentation:

- Analysts explore freely

- Data scientists iterate quickly

- Teams test new metrics and models

This is value-creating, but not free. Forecasts that assume:

“Usage will stabilize immediately”

ignore the reality that exploration spikes before discipline settles in.

Why These Blind Spots Matter

These costs:

- Are front-loaded

- Occur when trust is fragile

- Attract leadership scrutiny

If they’re missing from the forecast:

- Migration appears “more expensive than promised”

- Optimization feels reactive

Confidence in the platform erodes early

The Key Takeaway

Snowflake cost forecasting fails when it models only the destination, not the journey. Dual-runs, validation, backfills, and experimentation are not edge cases.

They are expected phases of migration. Including them doesn’t make Snowflake look worse.

It makes the forecast realistic and credible.

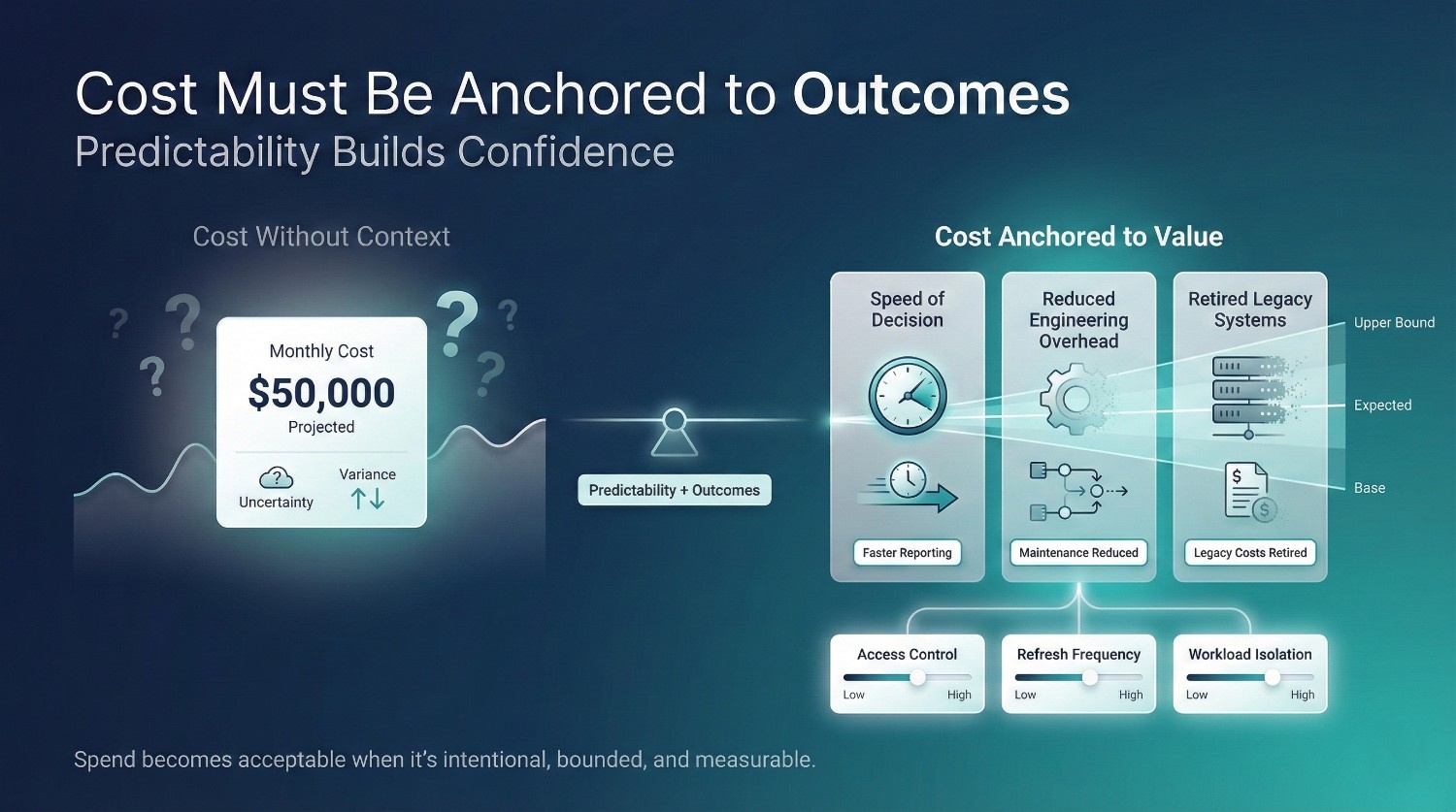

Aligning Snowflake Cost Forecasts With Business Value

A Snowflake cost forecast on its own is incomplete. Numbers without context don’t create confidence, they create fear. When leadership sees projected spend without a clear value narrative, the instinct is to delay, constrain, or second-guess the migration.

Cost only becomes acceptable when it is anchored to outcomes. Leadership approval tends to follow when cost forecasts clearly articulate what decisions become faster, cheaper, or more reliable as a result.

Why Cost Without Value Context Feels Risky

A forecast that says:

- “Snowflake will cost $X per month”

immediately triggers defensive questions:

- “Is that too high?”

- “Can we reduce it?”

- “What if it grows?”

Without value framing, Snowflake cost is interpreted as open-ended exposure, not investment.

What Snowflake Cost Should Be Tied To

Effective forecasts connect spending directly to specific, credible benefits.

Faster reporting

- Shorter time from data arrival to usable insight

- Reduced wait time for business questions

- More frequent decision cycles

This reframes cost as buying speed of decision, not compute.

Reduced engineering effort

- Fewer custom pipelines to maintain

- Less time spent on manual reconciliations

- Fewer emergency fixes and ad-hoc requests

Engineering time saved is real money, even if it doesn’t appear on the Snowflake invoice.

Retired legacy systems

In many migrations, savings from decommissioned systems and reduced maintenance offset a meaningful portion of Snowflake spend within the first year.

- Decommissioned on-prem infrastructure

- Reduced licensing and maintenance costs

- Simplified operational overhead across infrastructure and support teams

Snowflake cost should be presented net of what it replaces, not in isolation.

Why CFOs Care More About Predictability Than Minimization

CFOs rarely ask for the cheapest possible system. They ask for:

- Predictable monthly spend

- Clear drivers of variance

- Confidence that growth won’t produce shocks

A forecast that clearly shows:

- Base, expected, and upper-bound scenarios

- The behaviors that move spend between them

- The controls in place to manage that movement

is far more valuable than presenting a single optimistic number. This mirrors how CFOs evaluate other variable operating expenses. Predictability and explainability matter more than absolute minimization. In many cases, leadership will accept higher Snowflake cost if:

- It’s intentional

- It’s bounded

- It’s tied to measurable business outcomes

The Core Principle

Snowflake cost forecasting is not only a budgeting exercise. It’s a story about tradeoffs:

- What we spend

- What we gain

- What we avoid

- What we can control

When cost is aligned with value and predictability, Snowflake stops looking risky. It starts looking deliberate.

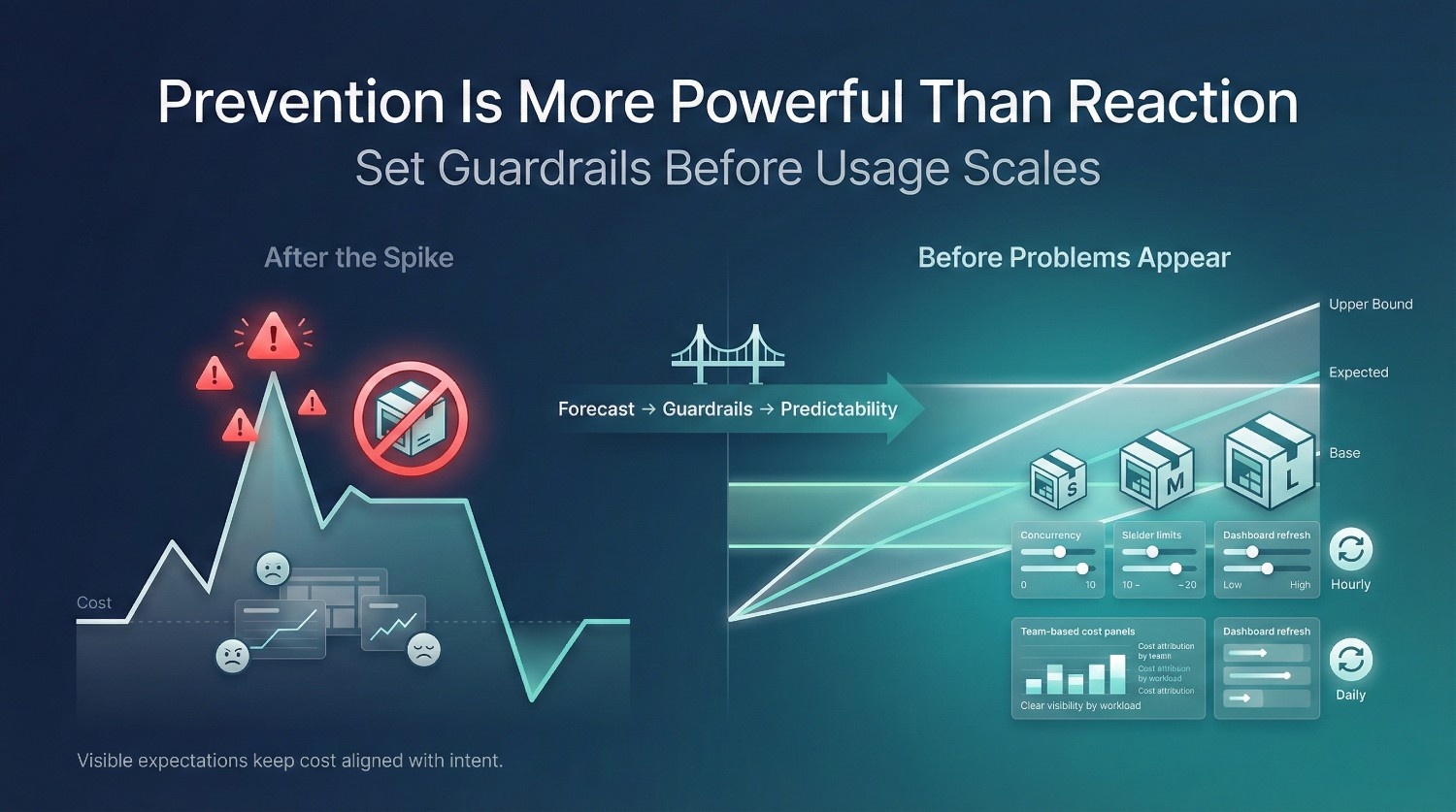

Using Forecasts to Set Guardrails (Before Problems Appear)

The real value of Snowflake cost forecasting is not prediction. It’s prevention. When forecasts are built before migration, they allow teams to set guardrails before bad habits form, rather than cutting usage after trust has already been damaged.

Budget Thresholds

Forecasts should define expected ranges, not just totals. Use them to:

- Set monthly spend bands (base, expected, upper-bound)

- Agree in advance on what triggers review vs action

- Avoid panic when spend increases within an expected scenario

This reframes cost growth as a signal, not a failure.

Warehouse Limits

Forecasts make warehouse decisions explicit. Guardrails include:

- Approved warehouse sizes per workload

- Clear rules for when larger warehouses are justified

- Limits on concurrency scaling based on forecast assumptions

When these limits exist early, warehouse sprawl never becomes normalized.

Query Governance

Forecasts expose which queries matter financially. Use that insight to:

- Require review for high-frequency or scheduled queries

- Encourage reuse through shared models and views

- Flag patterns that will push spend beyond forecasted ranges

This isn’t about blocking exploration, it’s about making repetition intentional.

Cost Visibility by Team

Forecasting enables cost attribution before billing surprises. Best practices:

- Map forecasted spend to teams or workload classes

- Share actuals against forecast regularly

- Let teams see how their usage compares to expectations

Visibility changes behavior faster than enforcement ever will. Teams adapt naturally when expectations are visible. Surprise controls tend to trigger avoidance, not efficiency.

Why This Matters

Reactive cost cuts:

- Slow teams down

- Create resentment

- Encourage workarounds

Proactive guardrails:

- Align usage with value

- Preserve trust

Keep Snowflake cost predictable as adoption grows

The Key Takeaway

Forecasting isn’t about control, it’s about consent. When guardrails are set before migration:

- Teams understand the rules

- Leadership understands the tradeoffs

- Snowflake cost stays aligned with intent. Predictable systems fade into the background. Unpredictable ones consume leadership attention.

That’s how Snowflake cost optimization becomes boring, and boring is exactly what you want.

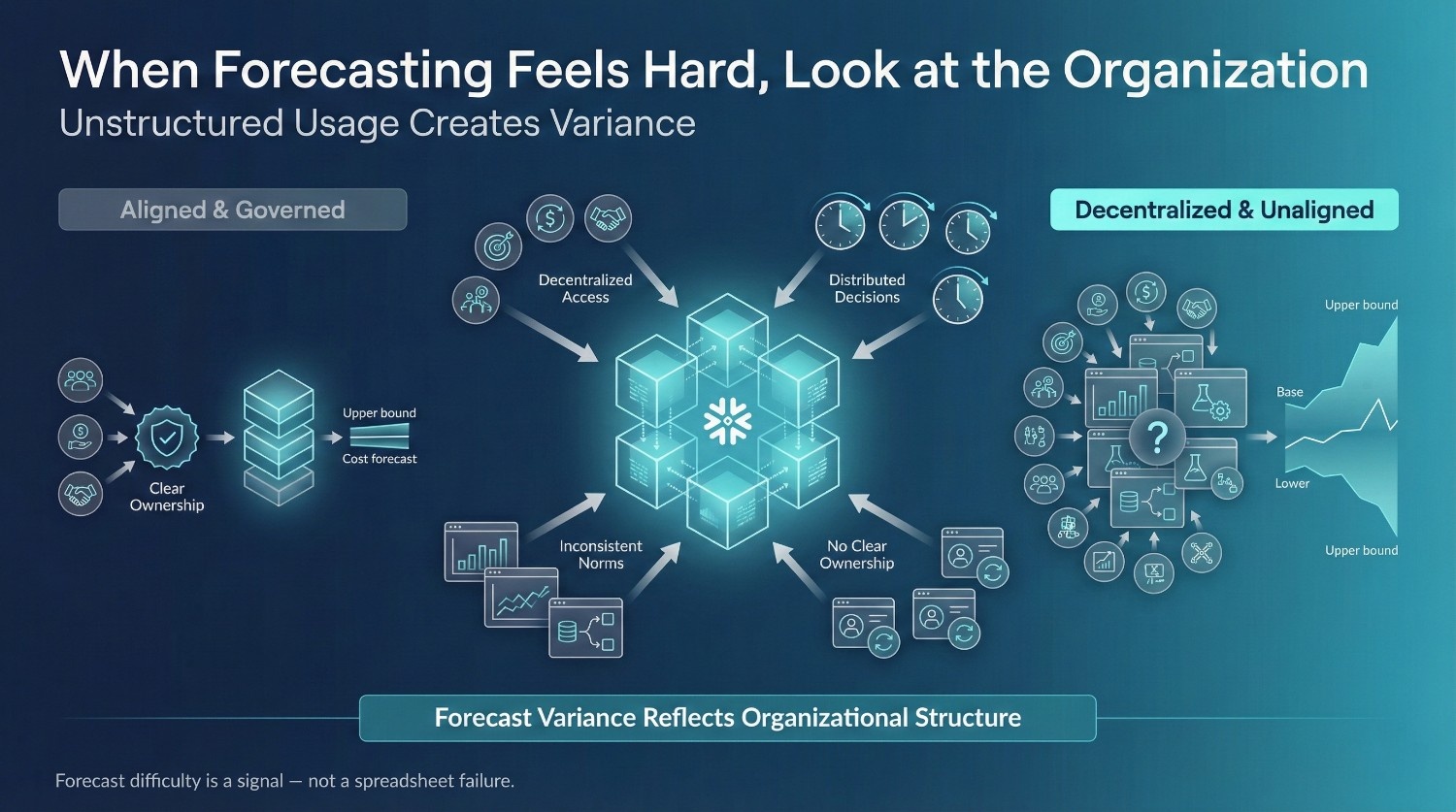

When Snowflake Cost Forecasting Gets Hard (and Why)

Snowflake cost forecasting is straightforward in theory, and frustratingly difficult in practice for certain organizations. When forecasting feels impossible, the issue is rarely spreadsheets or formulas. It’s almost always how the organization operates.

Large Enterprises

In large organizations:

- Many teams query the same data

- Workloads span time zones and business units

- Usage patterns vary widely and change often

Forecasting becomes difficult not because Snowflake is complex, but because:

- No single view of usage exists

- Decisions are distributed

- Assumptions differ by team

Without coordination, even a strong model collapses under variance. Forecast variance here reflects organizational independence, not analytical error.

Highly Decentralized Analytics

Decentralization increases value, but also unpredictability. Common challenges:

- Self-service analytics without shared norms

- Teams spinning up workloads independently

- Inconsistent modeling and query practices

In these environments, Snowflake cost reflects:

- Cultural differences between teams

- Uneven trust in shared data

- Divergent incentives

Forecasting fails when autonomy exists without alignment or shared norms.

Unclear Ownership

This is the most consistent blocker. When:

- No one owns Snowflake cost end-to-end

- Platform teams lack authority

- Finance sees numbers but not drivers

Forecasts become theoretical exercises instead of decision tools. Without ownership, assumptions can’t be enforced, and unenforced assumptions don’t forecast reality.

Ongoing Migrations

Forecasting during migration is hard because:

- Workloads are in flux

- Usage spikes temporarily

- Dual-runs distort steady-state behavior

If migration timelines are unclear or constantly shifting, forecasts must be scenario-heavy and revisited frequently. That difficulty is not a failure, it’s a signal.

The Real Reason Forecasting Feels Hard

Snowflake cost forecasting gets hard when:

- Usage is unstructured

- Decisions are implicit

- Accountability is diffuse

Those are organizational traits, not technical ones.

The Key Insight

If Snowflake cost forecasting feels impossible, the problem is not your model. It’s that:

- The organization hasn’t decided how Snowflake will be used

- Or who gets to decide

Forecasting forces those decisions into the open. Resistance to forecasting usually signals unresolved authority or misaligned incentives, not analytical difficulty.

That discomfort is not a reason to avoid forecasting, it’s the reason to do it. The next section will show how to use this difficulty as a diagnostic tool, and when external help can accelerate clarity without taking ownership away.

When to Involve External Help

Needing external help with Snowflake cost forecasting is not a failure of capability. It’s a sign that the decisions required to produce a credible forecast exceed any single team’s authority. Here are the situations where outside perspective becomes genuinely useful.

Migration Sponsors Need Defensible Numbers

When migration approval depends on:

- CFO or board-level review

- Multi-year budget commitments

- Explicit ROI justification

Rough estimates aren’t enough. At this stage, leadership is not buying numbers. They are buying confidence in how trade-offs are understood and managed. External support can help:

- Stress-test assumptions

- Build scenario-based ranges leadership can trust

- Translate technical usage into financial narratives

The value isn’t the spreadsheet, it’s confidence.

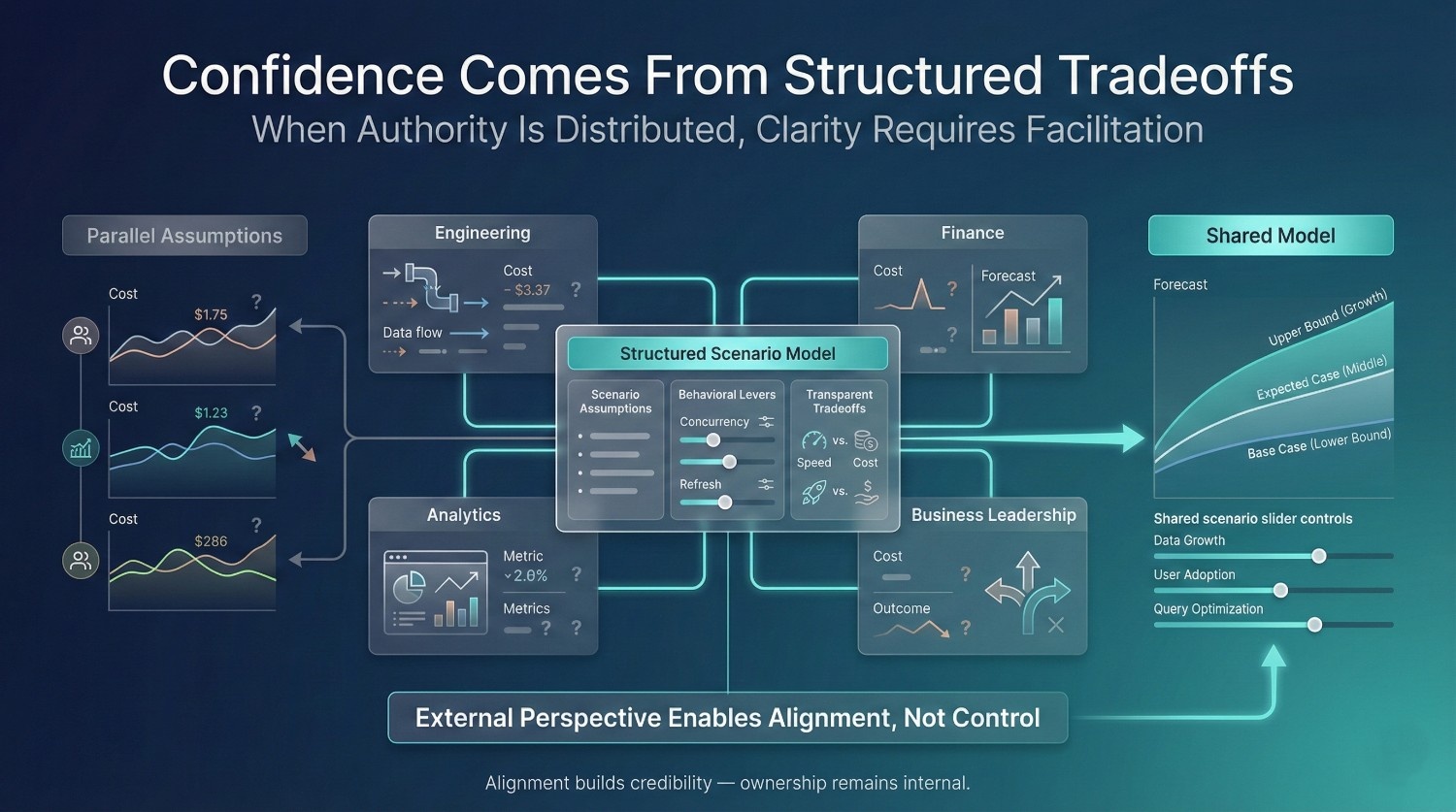

Internal Teams Lack Cross-Functional Visibility

Snowflake cost touches:

- Engineering

- Analytics

- Finance

- Security

- Business units

No single internal team typically sees all of this clearly. This mirrors findings in large platform migrations, where cost signals are distributed across technical, financial, and operational domains rather than centralized in one role.

External facilitation helps by:

- Forcing assumptions into the open

- Aligning stakeholders on tradeoffs

- Creating a shared model instead of parallel ones

Without that alignment, forecasts tend to fragment and lose credibility.

Cost Anxiety Threatens Migration Approval

When cost uncertainty becomes a blocker:

- Migrations stall

- Decisions get deferred

- Teams over-optimize prematurely

This is often less about actual cost, and more about lack of predictability. In CFO reviews, uncertainty carries a higher penalty than higher but explainable operating expense.

External help can:

- Bound worst-case scenarios

- Separate temporary migration cost from steady state

- Re-frame Snowflake cost as a managed risk, not an unknown exposure

The Important Distinction

External help should not replace ownership. The most effective engagements make internal tradeoffs clearer rather than making decisions on behalf of the organization. Its role is to:

- Surface assumptions

- Structure decisions

- Make tradeoffs explicit

Not to:

- Own Snowflake cost long-term

Shield leadership from accountability

The Key Takeaway

Bring in external support when:

- You need a forecast leadership can stand behind

- Internal coordination is the real bottleneck

- Cost uncertainty, not technology, is slowing progress

At this stage, optimization discussions shift from engineering efficiency to organizational alignment.

It’s an organizational decision-making problem, and outside perspective can accelerate clarity without taking control away.

Final Thoughts

Snowflake cost forecasting fails when it’s treated as an infrastructure sizing exercise. Snowflake doesn’t charge for how big your data is. It charges for how your organization and systems use it.

This distinction aligns with Snowflake’s usage-based design and explains why similar datasets can produce very different bills across organizations.

That’s why the most accurate forecasts focus on:

- Usage patterns , who queries, how often, and for what purpose

- Organizational maturity , trust in data, reuse of models, discipline in workloads

- Decision discipline , whether tradeoffs are explicit or deferred

When these factors are ignored, even the most detailed technical plan produces cost surprises.

The Final Takeaway

The best Snowflake cost forecasts do not promise an exact number.They:

- Make assumptions visible

- Bound reasonable outcomes

- Tie spend to behavior and value

- Enable guardrails before problems appear

In other words, they prevent surprises instead of explaining them after the fact. Snowflake cost doesn’t need to be feared. Predictability, not minimization, is what allows Snowflake spend to scale alongside business confidence.

Frequently Asked Questions (FAQ)

Because most teams migrate with a technical plan, not a behavioral cost model. They size warehouses and estimate data volume but don’t forecast how often people will query, re-run, explore, and build new use cases once Snowflake is available.

Not to an exact dollar, but it can be forecasted directionally and defensibly. The goal is to bound outcomes with scenarios, not to predict a single number. Ranges create confidence; false precision creates surprises.

Modeling infrastructure instead of usage. Data volume and warehouse size matter, but repetition, concurrency, and trust-driven re-runs matter far more.

Because Snowflake will execute whatever behavior the organization allows. Without modeling how often queries run and how workloads grow, “pay for what you use” becomes “pay for everything that happens.”

Enough to be realistic, but not obsessive. Storage is predictable and rarely causes budget shock. Compute and query behavior deserve the majority of forecasting effort.

Yes. Worst-reasonable scenarios normalize growth and prevent panic later. They don’t imply failure, they describe what happens when Snowflake is actually adopted.

Because they are temporary but intense. Early Snowflake bills are dominated by parallel systems, reconciliation queries, and backfills. If these aren’t modeled, early spend looks like a mistake instead of an expected phase.

As a set of scenarios tied to business value:

- What we spend

- What behaviors drive that spend

- What we gain in return

- How we control risk

Executives care more about predictability and control than minimum cost.

When ownership is unclear, analytics is decentralized, or migration decisions are politically constrained. At that point, forecasting forces alignment, not just math.

When leadership needs defensible numbers, cost uncertainty threatens migration approval, or no internal team has end-to-end visibility. External help should surface assumptions and structure decisions, not take ownership away.

Stop trying to predict an invoice. Start designing how Snowflake will be used.

The best Snowflake cost forecasts don’t eliminate uncertainty, they prevent surprises