Table of Contents

Introduction

Most Snowflake vs Redshift comparisons start in the wrong place.

They focus on:

- Feature checklists

- Performance benchmarks

- Architecture diagrams

- Synthetic query tests

Those comparisons are useful early on.

They become less decisive once an organization reaches real scale, because operational cost drivers start to dominate.

At scale, for many analytics workloads, both Snowflake and Redshift are fast enough, but edge cases (high concurrency, mixed ETL/BI contention, spiky demand) still matter.

Both are mature.

Both can support complex analytics.

Yet this is exactly where cost conversations start to fall apart.

Why Traditional Comparisons Break Down at Scale

Early-stage cost comparisons assume:

- A small number of users

- Predictable workloads

- Centralized analytics teams

- Clearly defined usage patterns

Most of those assumptions stop holding once:

- Multiple business units run analytics independently

- Self-service expands beyond analysts

- Dashboards, ad-hoc queries, data science, and pipelines compete for resources

- Migration, validation, and experimentation overlap

At that point, cost is less driven by:

- Raw performance

- Storage size

- Single-query efficiency

It’s driven by organizational behavior, expressed through platform mechanics (warehouses/queues, scaling policies, retries, refresh cadence, and runaway self-service).

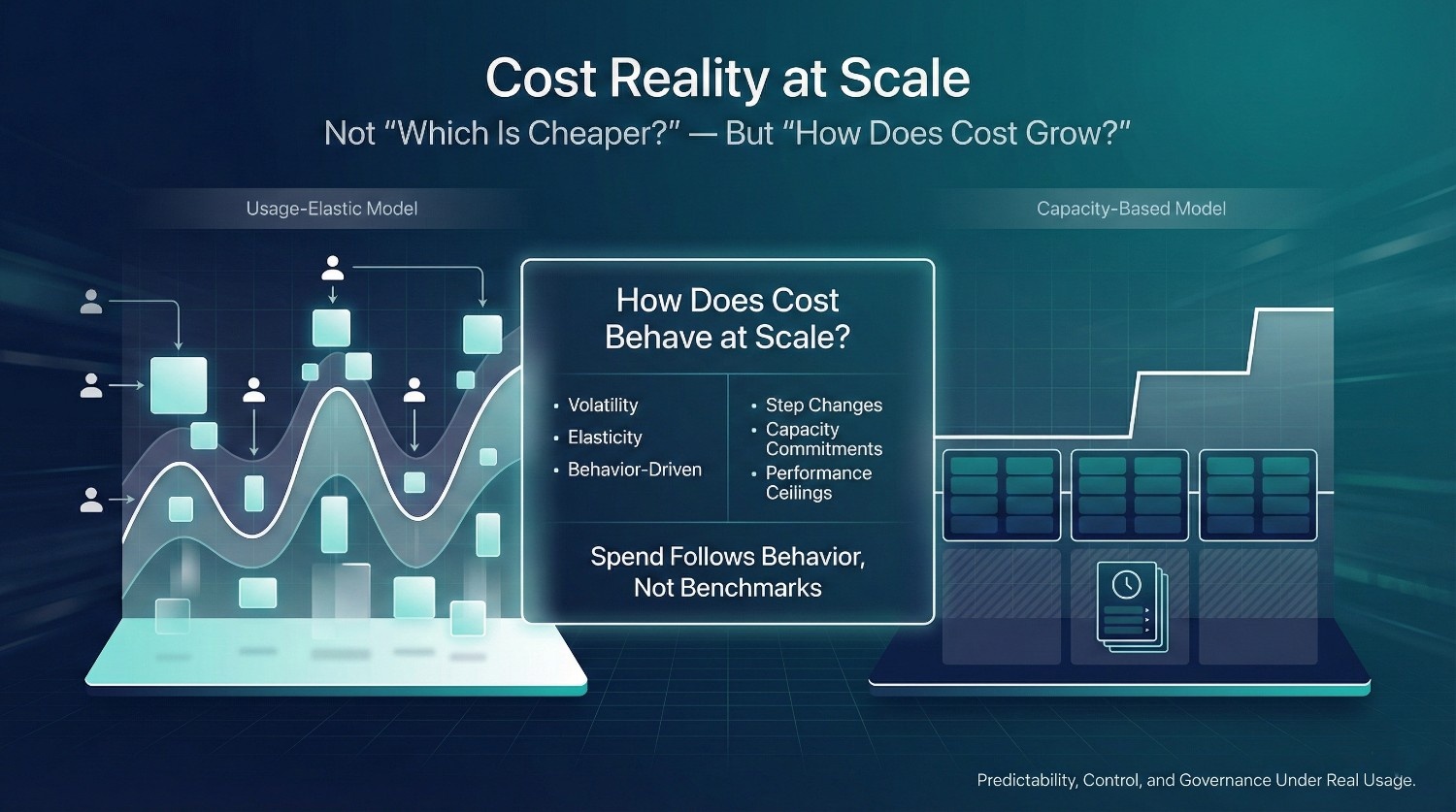

The Real Executive Question

Executives rarely ask:

“Which warehouse is cheaper per query?”

They ask:

“How does cost behave when concurrency, refresh cadence, pipelines, and self-service grow?”

That question is fundamentally different.

It’s about:

- Predictability vs volatility

- Control vs friction

- Visibility vs surprise

- Governance vs heroics

And this is where Snowflake and Redshift diverge most meaningfully.

Why This Is Not a “Cheaper Tool” Debate

At a small scale, either platform can look inexpensive, especially before concurrency and refresh automation ramp up.

At a large scale, both can become expensive.

The difference is how and why cost grows, and what knobs you get to control it.

This comparison is not about:

- Picking a universal winner

- Declaring one platform “too expensive”

- Optimizing for the lowest possible bill

It’s about understanding:

- Which cost model matches your organization’s maturity

- How each platform responds to growth in users, not just data

- Where cost surprises are likely to appear

- What kind of governance each platform requires to stay sane

How to Read This Comparison

This article is written for:

- CFOs worried about predictability

- CTOs responsible for scale and control

- Data leaders caught between adoption and spend

We’ll compare Snowflake and Redshift where it matters most:

- How cost scales with usage

- How behavior translates into spend

- How easy it is to govern cost without slowing the business

The goal is not to answer:

“Which one is cheaper?”

The goal is to help you answer:

“Which cost model aligns with how our organization actually works at scale?”

That’s the only comparison that survives real growth, because it maps spend to behavior, not benchmarks.

Why “List Price” Comparisons Are Meaningless

When Snowflake vs Redshift debates start with list price, they often end with the wrong conclusion once real world usage is introduced.

Credits versus nodes.

Hourly rates versus reserved discounts.

Per-second billing versus per-hour instances.

None of these comparisons reliably explain cost outcomes once real usage at scale is introduced.

Credits vs Nodes: Different Abstractions, Same Trap

Snowflake pricing is expressed in credits.

Redshift pricing is expressed in nodes and clusters.

This makes Snowflake feel abstract and Redshift feel concrete, but that perception is misleading from a cost control perspective.

In practice:

- Snowflake abstracts infrastructure away from users

- Redshift exposes infrastructure directly to planners

Neither abstraction guarantees lower cost.

They simply shift where cost decisions are made.

Snowflake defers explicit capacity decisions, allowing usage patterns to implicitly determine spend.

Redshift forces capacity decisions upfront, even when usage is uncertain.

Both can be expensive. For different reasons.

On-Demand vs Reserved vs Savings Plans Don’t Tell the Full Story

Redshift pricing discussions often center on:

- Reserved instances

- Savings plans

- Commitments that lower headline rates

Snowflake discussions often emphasize:

- Pay-per-second usage

- No upfront commitment

- Elastic scaling

These comparisons miss the point.

Reserved pricing lowers unit cost, but total cost still depends on utilization discipline and workload stability. AWS FinOps guidance consistently shows that reserved capacity only reduces spend when workloads are stable and predictable, otherwise it amplifies waste through underutilization.

Elastic pricing lowers idle waste, but does not automatically reduce inefficiencies caused by unmanaged usage behavior.

At scale, the dominant cost drivers are not:

- Whether compute is reserved or on-demand

- Whether billing is hourly or per second

They are:

- How often compute runs

- How many workloads overlap

- How disciplined usage patterns are

What Sticker Price Hides at Scale

List price comparisons ignore three major cost contributors that tend to dominate at scale.

Operational overhead

Redshift requires more hands-on capacity management:

- Cluster resizing

- Queue tuning

- Performance troubleshooting

That effort has real cost, even when it is not visible on a cloud invoice.

Idle capacity

Reserved or always-on clusters are paid for whether they’re busy or not.

Idle time often looks cheap on paper but becomes expensive at scale and duration increases over time.

Behavioral inefficiencies

Duplicate dashboards.

Parallel analytics.

Low trust leading to re-runs.

Exploration without guardrails.

These inefficiencies cost money on both platforms, and list price does nothing to predict them.

Why Unit Price Fails as a Decision Metric

At a small scale, unit price matters.

At a large scale, it becomes a weak signal compared to behavioral drivers.

Two organizations with identical pricing can see radically different costs because:

- One governs behavior intentionally

- The other lets usage sprawl

This is why:

- “Snowflake is expensive”

- “Redshift is cheaper”

are both incomplete statements.

The real determinant is how cost behaves under growth, not how it looks on a pricing page.

The Key Insight Executives Should Anchor On

At scale:

- Unit price optimization is secondary

- Cost behavior is primary

Snowflake and Redshift rarely fail financially because of pricing mechanics alone.

They fail when organizations adopt them without understanding how usage patterns turn into spend.

Industry case studies from Snowflake, AWS, and Google Cloud converge on a common finding: organizations that align governance, ownership, and usage policies early see flatter cost curves than those that rely on tooling alone.

The right comparison is not:

“Which platform is cheaper per unit?”

It’s:

“Which cost model aligns with how our teams actually work as we scale?”

That’s the only comparison that holds up once organizational and workload complexity arrives.

How Snowflake Cost Actually Scales

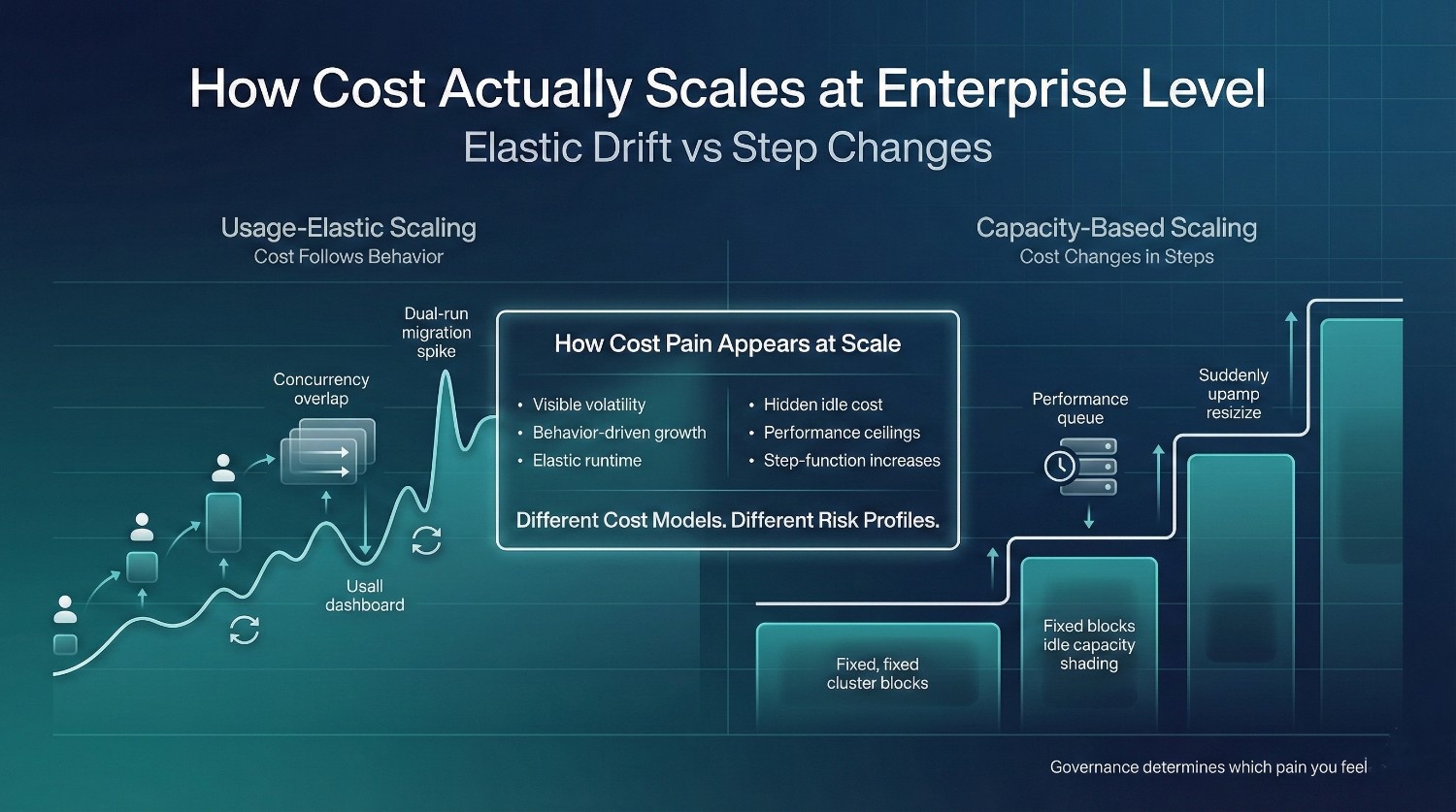

Snowflake’s appeal at small and medium scale is the same reason its cost can feel unpredictable later, because it scales primarily with usage behavior rather than capacity planning.

Understanding this distinction is critical before comparing Snowflake to Redshift at scale.

Snowflake’s Decoupled Compute Model

Snowflake separates storage from compute, and compute itself is organized into independently managed virtual warehouses.

Each warehouse:

- Runs independently

- Is billed per second while active

- Can scale up or out automatically when configured to do so

On paper, this looks ideal:

- No paying for idle infrastructure

- No upfront sizing decisions

- Easy to support many workloads

And early on, it works beautifully.

The challenge is not the model itself, it’s what happens as usage expands across teams.

Because warehouses are:

- Easy to spin up

- Easy to share

- Easy to forget about

Snowflake removes friction at exactly the point where organizations traditionally usually rely on friction to control cost.

Where Snowflake Cost Grows Fastest

Snowflake cost rarely escalates due to a poorly written query.

It grows fastest through compounding usage patterns.

The biggest accelerators are:

Concurrency

Multiple users and systems running at the same time extend warehouse runtime and trigger scaling behavior. Cost increases even when individual queries are efficient and well-designed.

Repeated queries

Dashboards, validations, and investigations re-run the same logic again and again. Frequency compounds cost faster than query complexity.

Uncontrolled self-service

As access expands:

- Analysts explore freely

- Business users run their own checks

- Teams duplicate similar work

This is value creation, but without coordination, it’s also cost multiplication.

Dual-run migrations

During migration:

- Legacy systems and Snowflake run in parallel

- Reconciliation queries spike

- Backfills and reprocessing dominate compute

These are temporary, but intense, and often under-forecasted. Large Snowflake environments studied by cloud FinOps teams show that concurrency and refresh frequency contribute more to long term spend growth than query complexity alone, a pattern echoed in Snowflake customer optimization case studies.

Why Snowflake Cost Feels “Sudden” at Scale

From a finance perspective, Snowflake cost often appears to jump overnight.

In reality:

- Usage grows incrementally

- New teams onboard quietly

- Dashboards accumulate

- Validation becomes routine

But Snowflake invoices don’t directly surface usage behaviour or decision context.

They only show totals.

Because cost is:

- Distributed across many small decisions

- Triggered by people, not procurement

- Elastic by default

Teams experience spend as a surprise rather than a clearly observable gradual drift.

This is why Snowflake cost issues often surface months after success:

- Adoption increases

- Trust-building activity intensifies

- Governance lags behind

The Scale Reality CFOs and CTOs Must Internalize

Snowflake cost scales:

- With how many people use data

- With how often they ask questions

- With how coordinated those questions are

It does not scale predictably with:

- Data size alone

- Headcount alone

- Query optimization alone

At scale, Snowflake rewards organizations that:

- Make usage intentional

- Design for reuse

- Govern behavior early

And it penalizes organizations that assume elasticity alone cost control.

This is not a flaw in Snowflake’s pricing.

It’s the economic consequence of making data frictionless at scale.

Redshift’s Capacity-Based Pricing Model

Redshift pricing is primarily anchored in fixed capacity.

You pay for:

- A cluster made up of nodes

- Chosen instance types and node counts

- Either on-demand pricing or discounted reserved commitments

Once a cluster is provisioned:

- Cost accrues continuously

- Whether the cluster is busy or idle

- Whether queries are running or not

This model forces decisions upfront:

- How big the cluster should be

- How much peak capacity to provision

- How long to commit to it

For finance, this looks clean:

- Predictable monthly invoices

- Clear contractual commitments

- Familiar depreciation-style thinking

But predictability comes from pre-paying for assumptions, not from aligning cost to actual usage.

Where Redshift Cost Hides

Redshift rarely surprises finance with sudden invoice spikes under steady state usage. Instead, cost hides in places that don’t show up as line items.

Idle capacity

Clusters are sized for peak demand but spend most of their time underutilized. You pay for unused capacity every hour, every day.

Over-provisioning “for peak”

To avoid performance complaints, teams often size clusters for worst-case concurrency scenarios. That peak may occur briefly, or rarely, but its cost is permanent.

Manual scaling effort

Resizing clusters is not free:

- Planning downtime

- Performance testing

- Risk management

- Engineering time

As a result, clusters are resized less often than they should be. Inefficiency often becomes structural over time.

Operational overhead

Redshift requires ongoing tuning:

- Workload management queues

- Vacuuming and maintenance

- Performance troubleshooting

This effort doesn’t appear on the invoice, but it is a real cost, paid in engineering time and delayed initiatives.

AWS customer case studies consistently note that engineering time spent on cluster sizing, tuning, and operational maintenance represents a material cost that is rarely included

Why Redshift Cost Feels “Predictable” , Until It Isn’t

Redshift cost feels predictable because:

- The invoice barely changes month to month

- Spend is decoupled from day-to-day usage behavior

But that predictability comes with tradeoffs.

As usage grows:

- Performance degrades before cost increases

- Teams experience slowness instead of higher bills

- Finance sees stability while the business absorbs friction

Eventually, something breaks:

- A major resize is required

- A new cluster must be added

- A migration or redesign becomes unavoidable

At that point, cost jumps in large, discrete steps, not gradually.

The predictability was real, but it masked:

- Lost productivity

- Delayed decisions

- Missed analytical opportunities

That is the hidden opportunity cost of capacity-based pricing.

The Scale Reality of Redshift Cost

Redshift scales cost by:

- Locking in capacity early

- Trading flexibility for predictability

- Absorbing inefficiency as idle spend

This can work well for:

- Stable workloads

- Centralized analytics

- Organizations comfortable with upfront commitments

It becomes risky when:

- Usage patterns change rapidly

- Self-service expands

- Teams demand flexibility faster than clusters can adapt

Redshift doesn’t surprise you with sudden invoices.

It more often surprises teams with performance ceilings and delayed adaptability.

At scale, the question is not whether Redshift is cheaper.

It’s whether your organization prefers:

- Paying steadily for unused capacity

- Or paying elastically for actual behavior

Across enterprise data platforms, cost outcomes correlate more strongly with governance maturity and workload coordination than with the underlying pricing mechanics themselves.

That choice defines how cost pain shows up, and who feels it first.

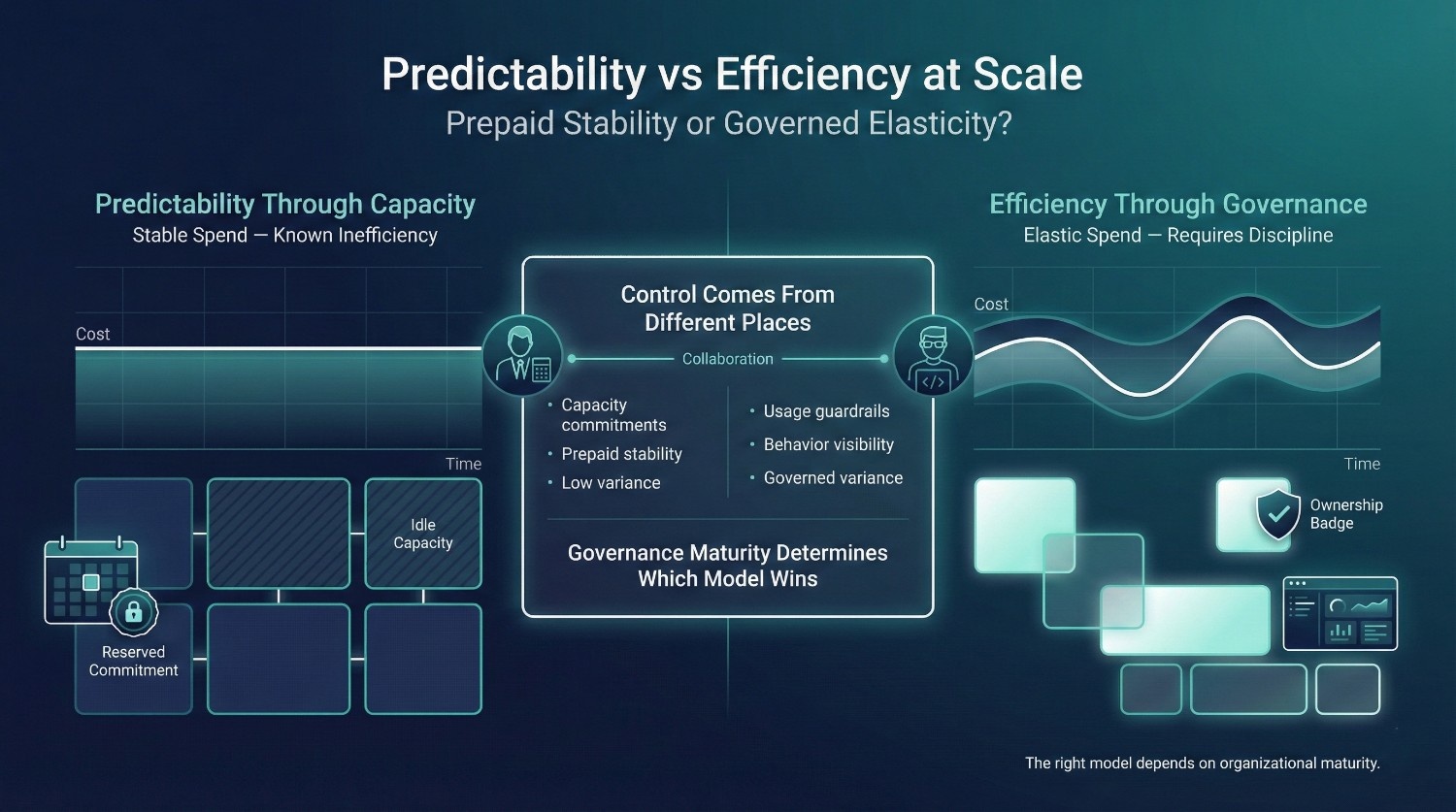

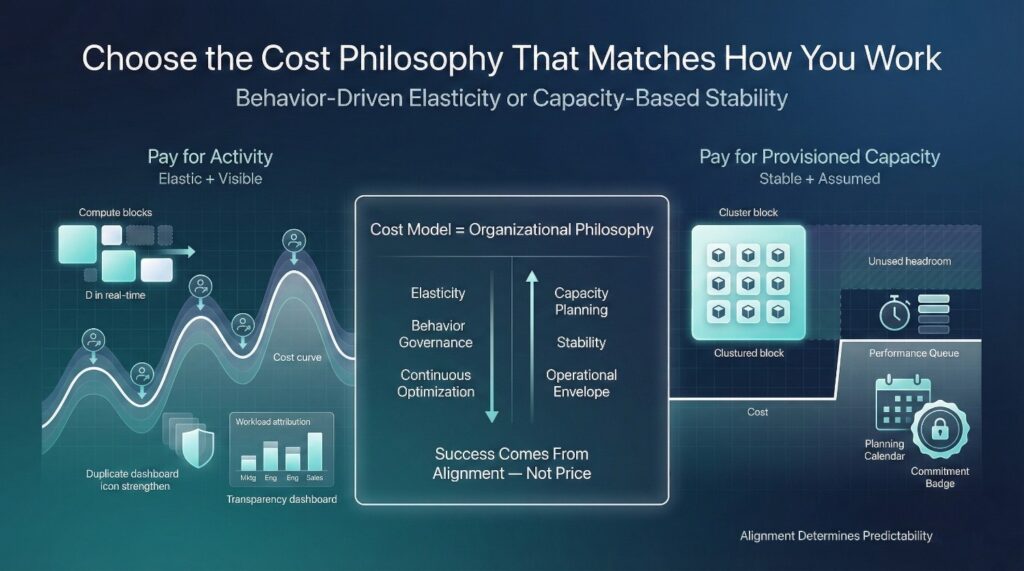

Cost Predictability vs Cost Efficiency

At scale, Snowflake vs Redshift debates often stall because finance and technology leaders optimize for different definitions of “control” and risk management.

Both platforms offer control, but in fundamentally different ways.

Redshift: Predictability First, Efficiency Second

Redshift is easier to budget because cost is closely tied to explicit capacity commitments.

From a finance perspective:

- Monthly spend is stable

- Invoices are easy to forecast

- Variance is low unless infrastructure changes

But that predictability comes at a price.

Redshift is harder to optimize dynamically because:

- Capacity decisions are coarse-grained

- Scaling requires planning and risk

- Over-provisioning becomes the default safety mechanism

Efficiency is often traded off to preserve stability and predictability.

Unused capacity becomes a known and often accepted operating cost.

For organizations with steady workloads and strong central control, this tradeoff can be reasonable.

Snowflake: Efficiency First, Predictability Requires Discipline

Snowflake is designed to optimize for elastic efficiency.

From a technology perspective:

- Compute runs only when needed

- Idle time is minimized

- Scaling is frictionless

This makes Snowflake easier to scale, but harder to budget without structure.

Predictability in Snowflake depends on:

- Clear workload separation

- Usage guardrails

- Ownership of cost decisions

- Visibility into behavior

Without these controls, efficiency can turn into cost volatility.

Snowflake customer optimization guidance consistently emphasizes that cost stability emerges only after workload isolation, ownership assignment, and usage visibility are formalized.

Why CFOs and CTOs Talk Past Each Other

CFOs often say:

“Snowflake is unpredictable.”

CTOs often respond:

“It’s only unpredictable because usage is uncontrolled.”

Both perspectives are right.

The disagreement isn’t about the platform, it’s about where predictability should come from.

- Redshift embeds predictability into the infrastructure

- Snowflake requires predictability to come from governance

One primarily relies on capacity limits.

The other relies primarily on behavioral and governance limits.

The Real Tradeoff at Scale

The choice is not:

- Predictable or efficient

It’s:

- Predictable through prepaid inefficiency

- Or efficient through governed behavior

Neither approach is objectively superior in all contexts.

Each matches different organizational realities.

Across large scale data platforms, the strongest predictor of cost outcomes is governance maturity rather than pricing mechanics or architectural choices.

The Executive-Level Insight

Snowflake and Redshift don’t just price compute differently.

They distribute responsibility differently.

Redshift shifts responsibility to planning and commitment.

Snowflake shifts responsibility to usage governance.

Cost problems emerge when the platform’s cost model doesn’t match how the organization actually operates.

At scale, the right question isn’t:

“Which platform is cheaper?”

It’s:

“Which model fits our tolerance for change, governance maturity, and growth?”

That’s where predictability and efficiency stop competing, and start aligning.

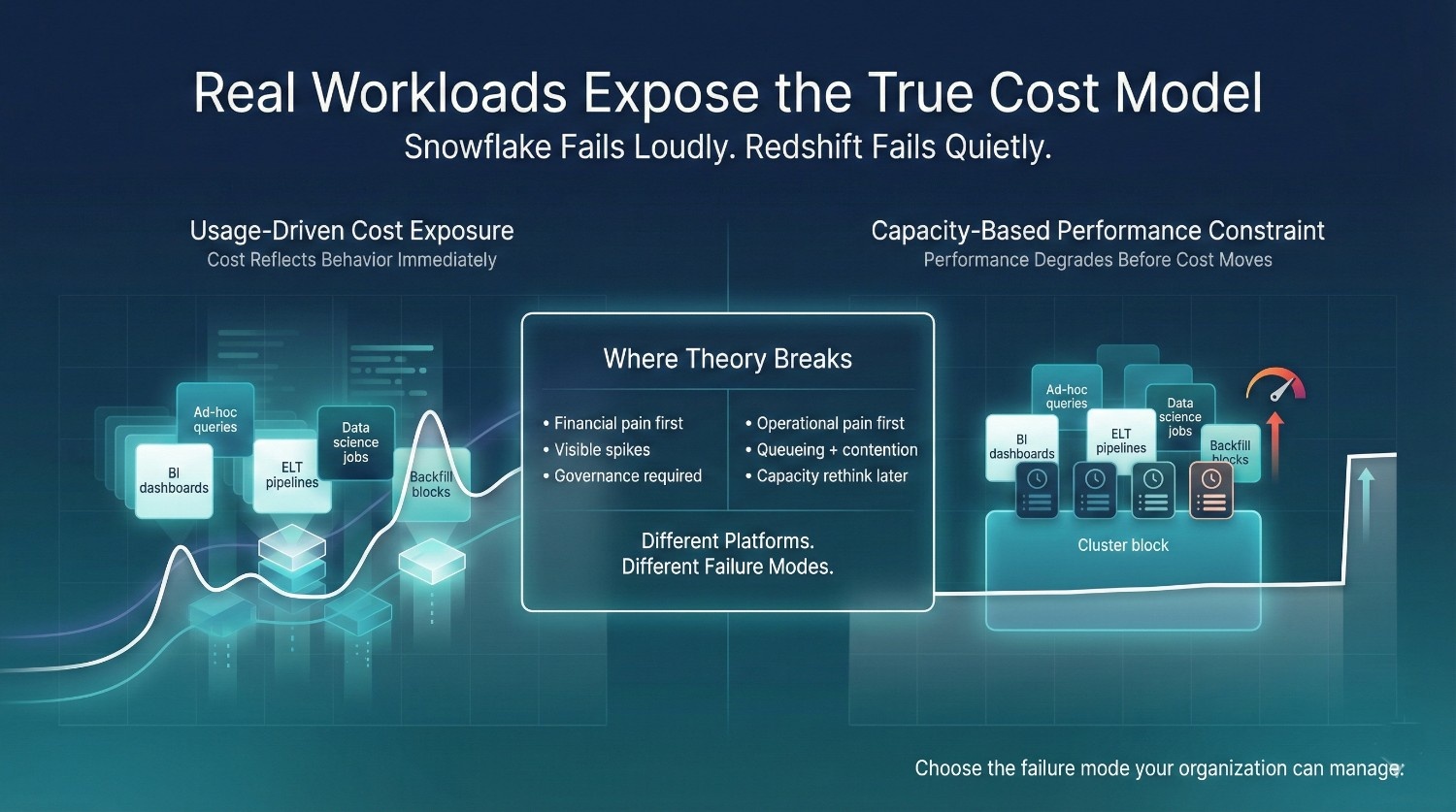

Cost Under Real Workloads (Where Theory Breaks)

Cost models often look clean in diagrams.

They break down when real workloads collide, at the same time, by different teams, with different urgency.

This is where Snowflake and Redshift tend to diverge most clearly in practice.

Real world workload studies from large analytics platforms show that concurrency overlap and refresh frequency explain more cost variance than query efficiency alone.

BI Dashboards

Snowflake

- Charges when dashboards actually run

- Cost scales with refresh frequency and concurrency

- Duplicate dashboards multiply cost quietly

Dashboards that refresh aggressively or recompute heavy logic can become meaningful cost drivers, but only while they’re active.

Redshift

- Dashboards consume capacity whether they run or not

- Refresh frequency affects performance more than cost

- Cost is already “paid” once the cluster exists

Dashboards feel cheap financially, but they compete for shared capacity and can degrade performance for other workloads.

Reality check:

Snowflake tends to expose inefficient dashboards directly as visible cost.

Redshift tends to absorb them as performance debt.

Ad-Hoc Analysis

Snowflake

- Every exploratory query has a visible cost

- Repeated “just checking” behavior compounds spend

- Self-service scales fast, and expensively, without guardrails

Ad-hoc usage becomes a first-class cost driver.

Redshift

- Ad-hoc queries don’t change the invoice

- They do increase queueing, contention, and latency

- Analysts feel friction long before finance sees a cost signal

Exploration feels “free” financially, but it taxes the system.

Reality check:

Snowflake makes exploratory behavior financially visible.

Redshift translates exploratory behavior into waiting and contention rather than direct cost.

ELT Pipelines

Snowflake

- Pipelines incur cost only while running

- Backfills and retries show up immediately

- Poor orchestration becomes visible in the billing outcomes

Operational inefficiency is financially explicit.

Redshift

- Pipelines run on prepaid capacity

- Failures consume time, not incremental spend

- Inefficiency hides unless it forces a resize

Pipeline inefficiency can accumulate silently over time.

Reality check:

Snowflake tends to penalize inefficient execution patterns directly through cost..

Redshift tolerates it, until scale forces a rethink.

Data Science Workloads

Snowflake

- Iteration-heavy workloads can get expensive fast

- Model training and experimentation drive bursty spend

- Requires isolation and explicit limits to stay sane

Well suited for controlled analytics but risky for unconstrained experimentation without limits.

Redshift

- Data science workloads strain clusters

- Performance often degrades before cost changes become visible

- Teams self-limit due to slowness, not budget

Innovation can slow before invoices increase.

Reality check:

Snowflake reflects experimentation directly in spend.

Redshift can indirectly discourage experimentation through performance friction

Backfills and Reprocessing

Snowflake

- Backfills are expensive, visible, and immediate

- Encourages careful planning and time-boxing

- Finance sees the spike instantly

Pain is financial, but clear.

Redshift

- Backfills stress clusters

- Often require off-hours scheduling

- May trigger future resizing

Pain is operational, and delayed.

Reality check:

Snowflake makes backfills a budget conversation.

Redshift makes them a scheduling problem.

The Key Contrast Under Real Workloads

The difference becomes pronounced under sustained real world workloads.

- Snowflake charges for use

- Redshift charges for capacity

Snowflake externalizes cost to usage behavior.

Redshift internalizes cost into infrastructure.

Neither approach is “better.”

Each fails differently.

Snowflake fails loudly when governance lags.

Redshift tends to fail quietly until performance degradation becomes unacceptable.

Across both capacity based and usage based platforms, unmanaged overlap between BI, experimentation, and pipeline workloads is the most common source of scale related inefficiency.

At scale, the right platform is the one whose failure mode your organization can manage.

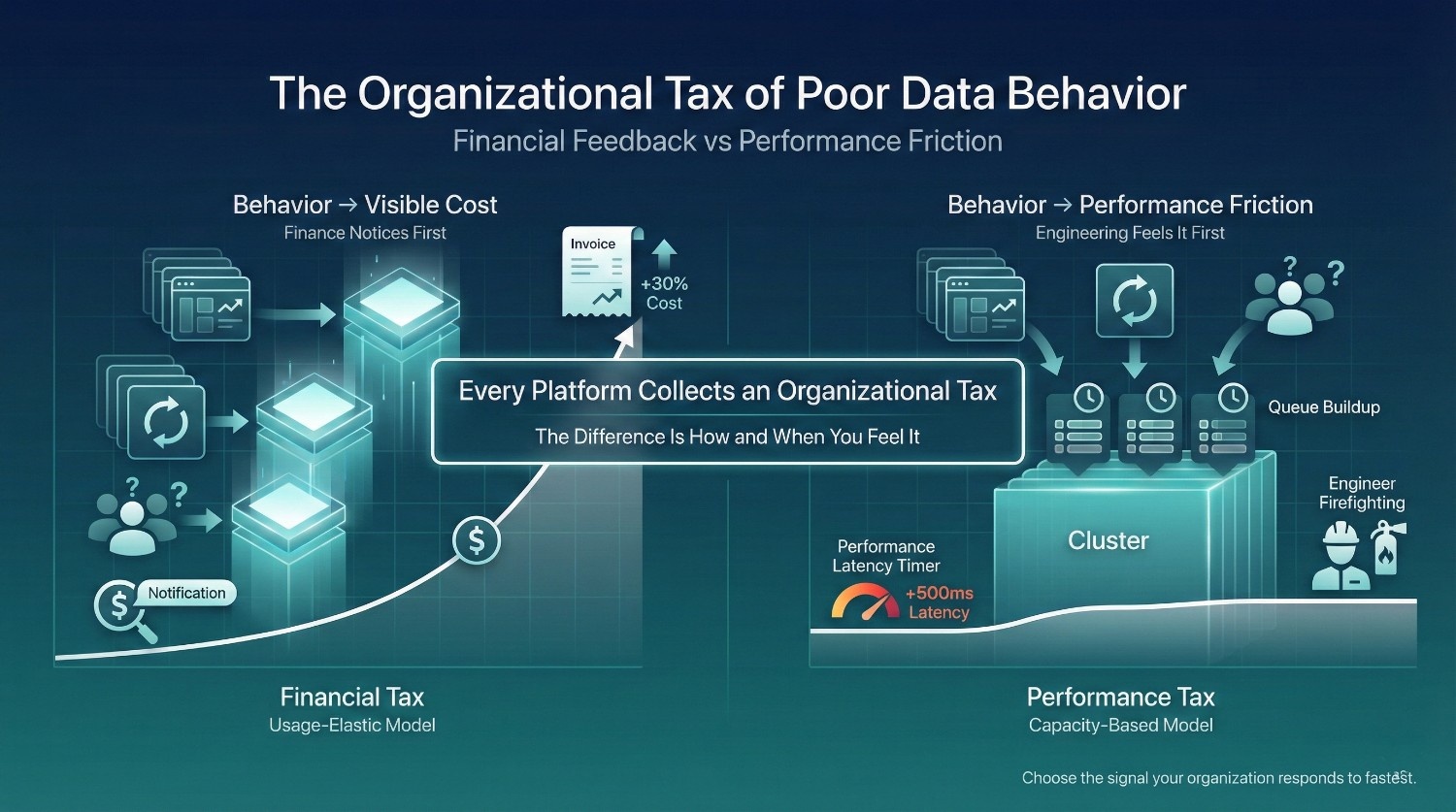

The Organizational Tax

Every large scale data platform imposes a tax on poor behavior or inefficient behavior over time.

The difference between Snowflake and Redshift is who feels that tax first, and how quickly it becomes visible.

Snowflake: Bad Behavior → Higher Snowflake Cost

In Snowflake, inefficient behavior is often translated directly into increased spend.

Examples:

- Duplicate dashboards recomputing the same logic

- Analysts re-running similar queries repeatedly

- Shared warehouses staying active due to constant concurrency

- Migration validation and backfills running longer than planned

The result:

- Snowflake cost often increases quickly relative to behaviour changes

- Finance sees the impact on the invoice

- Leadership asks questions early

This creates fast financial feedback.

Snowflake doesn’t tolerate ambiguity quietly.

It surfaces discipline problems as cost.

That can feel painful, but it’s also informative.

Redshift: Bad Behavior → Slower Performance

In Redshift, the same behaviors typically manifest differently.

Examples:

- Duplicate reporting logic increases queue contention

- Ad-hoc queries block scheduled workloads

- Inefficient pipelines consume shared capacity

- Backfills require off-hours scheduling

The result:

- Queries slow down

- Dashboards lag

- Engineers firefight performance issues

- Business teams feel friction

But:

- The invoice barely changes

- Finance sees stability

- Cost issues remain invisible

Redshift absorbs bad behavior as performance debt rather than immediate financial signals.

Who Actually Pays the Tax?

This is the key organizational difference in how cost feedback is experienced.

With Snowflake:

- Finance pays attention first

- Cost becomes the forcing function

- Governance conversations happen earlier

With Redshift:

- Engineers pay first

- Productivity erodes quietly

- Finance only notices when a resize or migration is required

Neither outcome is free.

Snowflake externalizes the cost of inefficient behavior through spend visibility.

Redshift internalizes it within shared infrastructure constraints.

Why Snowflake Surfaces Discipline Problems Faster

Snowflake is unforgiving by design:

- Elastic compute turns behavior into spend

- Per-second billing makes repetition visible

- Easy scaling removes friction that used to hide inefficiency

This doesn’t create bad behavior.

It reveals existing behaviour patterns more quickly.

Organizations with:

- Clear ownership

- Shared definitions

- Disciplined usage

See Snowflake cost stabilize as governance practices mature.

Organizations without those traits:

- See rapid cost growth

- Experience increased finance and engineering tension

- Blame the platform instead of the behavior

The Executive-Level Insight

Snowflake and Redshift don’t eliminate the organizational tax.

They collect it differently.

Snowflake collects it primarily in direct financial impact.

Redshift collects it in time, friction, and lost opportunity.

At scale, the question is not:

“Which platform is cheaper?”

It’s:

“Which kind of pain does our organization notice, and respond to, faster?”

Because the platform that surfaces problems early gives you a chance to fix them.

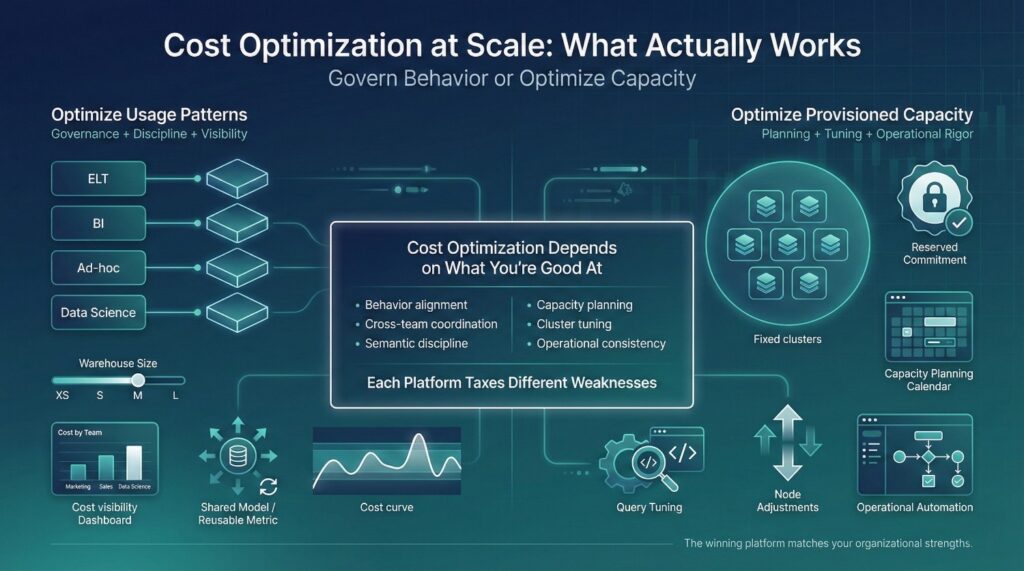

Cost Optimization at Scale: What Actually Works

At scale, cost optimization is rarely about clever tricks or one-time tuning alone.

It’s about aligning platform mechanics with organizational behavior.

Industry FinOps research shows that sustained cost optimization success depends more on aligning incentives and ownership than on isolated technical tuning.

Snowflake and Redshift both support cost optimization, but the effort shows up in very different places.

Snowflake: Optimize Behavior First

Snowflake tends to reward organizations that are willing to shape how data is used.

The most effective levers are:

Workload isolation

- Separate warehouses for ELT, BI, ad-hoc analysis, and data science

- Prevents one workload from disproportionately inflating cost for all others

- Enables attribution and accountability

Warehouse right-sizing

- Match warehouse size to workload needs

- Avoid “just in case” sizing

- Revisit sizes as usage patterns stabilize

Guardrails and visibility

- Spend visibility by warehouse or team

- Alerts on unusual behavior, not punishment

- Shared dashboards that explain cost drivers

Semantic discipline to reduce waste

- Shared metrics and definitions

- Reusable models instead of duplicated logic

- Fewer re-runs caused by distrust or ambiguity

In Snowflake, cost optimization is primarily a governance and modeling exercise rather than a hardware focused one.

Redshift: Optimize Infrastructure and Operations

Redshift optimization focuses primarily on managing provisioned capacity efficiently.

Key levers include:

Reserved instance strategy

- Commit to long-term capacity to lower unit cost

- Accept some idle spend in exchange for predictability

Cluster right-sizing

- Regularly revisit node types and counts

- Balance peak demand against average utilization

Query tuning

- Optimize distribution styles and sort keys

- Reduce contention through query design and workload management configuration

Operational automation

- Automate maintenance, scaling, and monitoring

- Reduce human effort required to keep clusters healthy

In Redshift, optimization is an engineering and operations discipline that minimizes waste inside fixed capacity. AWS architectural guidance frequently emphasizes that right sizing and reservation strategies only deliver savings when workloads remain stable over long periods.

Why Optimization Effort Feels So Different

This is where many high level comparisons lose practical accuracy.

Snowflake optimization often feels harder because:

- It requires cross-team coordination

- It forces conversations about behavior and ownership

- Cost shows up immediately

Redshift optimization often feels easier initially because:

- Cost is capped by design

- Inefficiency often hides in performance degradation rather than spend

- Teams adapt by waiting or working around issues

But over time:

- Snowflake optimization tends to reduce both cost and confusion

- Redshift optimization often reduces cost but can preserve inefficiency in how work is done

The Scale-Level Insight

Both platforms can be cost-effective at scale.

The difference is what kind of work you must be good at to keep them that way. Across large enterprises, cost optimization failures most often occur when organizations attempt to apply infrastructure era optimization habits to usage driven platforms.

Snowflake requires:

- Governance maturity

- Semantic discipline

- Willingness to confront usage patterns

Redshift requires:

- Capacity planning expertise

- Operational rigor

- Tolerance for some inefficiency

Optimization effort differs, not because one tool is better, but because each platform taxes different weaknesses.

At scale, the winning platform is the one your organization knows how to optimize realistically and consistently.

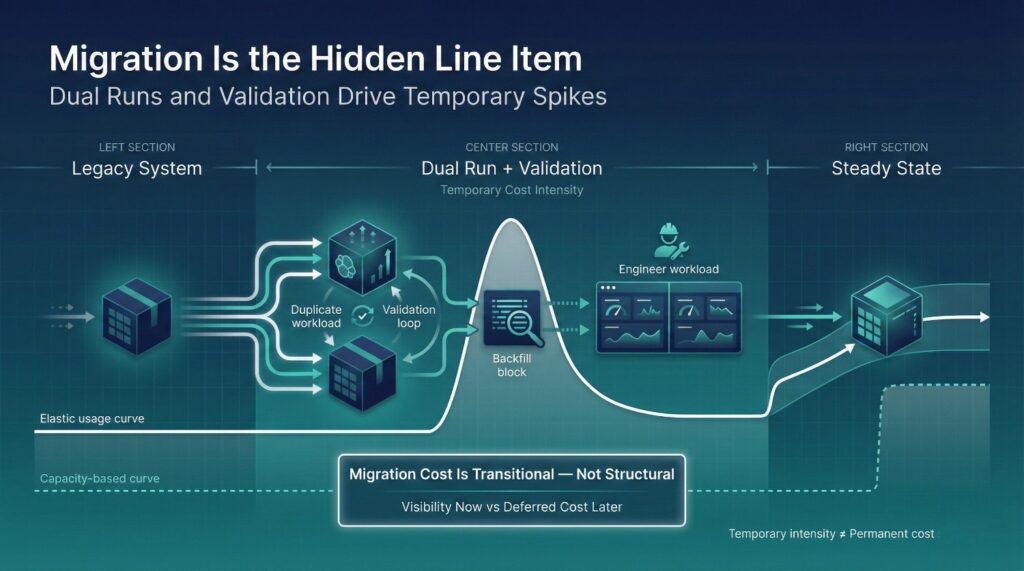

Migration Cost Reality (The Hidden Line Item)

One of the most misleading Snowflake vs Redshift cost debates ignores an entire phase of spend: migration itself.

Not steady-state.

Not “after optimization.”

But the messy, expensive middle.

Industry migration postmortems consistently show that dual run and validation phases account for a disproportionate share of total migration cost, regardless of target platform.

This is where many executives conclude, incorrectly, that Snowflake is “more expensive.”

Dual-Run Costs Are Real (and Inevitable)

During migration, organizations almost always run:

- The legacy warehouse

- The new platform

- In parallel

This means:

- Every report is computed twice

- Every pipeline runs in two systems

- Every discrepancy triggers additional queries

Snowflake makes these costs more visible because compute is billed per use.

Redshift often obscures them because provisioned capacity is already paid for.

The cost exists in both cases.

Only one shows it clearly.

Validation Workloads Multiply Spend

Validation is not a single step, it’s a phase.

Typical validation behavior includes:

- Re-running reports to reconcile numbers

- Testing multiple versions of logic

- Investigating edge cases and historical anomalies

These queries are:

- Repetitive

- Heavy

- Time-bound

In Snowflake, validation typically appears as a temporary cost spike.

In Redshift, it often manifests as slower performance and engineer time.

Neither is free.

Large enterprise migration programs often report that validation related compute and engineering effort can exceed steady state optimization savings if not time boxed explicitly.

Rewrites and Refactors Are Not Optional

Migrations rarely move logic as-is.

They involve:

- Rewriting legacy SQL

- Refactoring brittle transformations

- Redesigning schemas and models

This work creates inefficiency temporarily:

- Backfills are required

- Pipelines re-run more often

- Warehouses are sized larger than steady-state

Snowflake exposes this inefficiency primarily as increased spend.

Redshift absorbs much of this inefficiency as operational friction.

FinOps guidance emphasizes that migration spend should be labeled and tracked separately from steady state platform cost to avoid misleading early conclusions.

This is where perception breaks.

Early Snowflake invoices reflect:

- Migration intensity

- Validation uncertainty

- Parallel systems

But finance often interprets these as:

- The “new normal”

- A permanent cost problem

- Evidence the platform is too expensive

In reality, these costs are transitional, if the migration is time-boxed and governed.

Without that context, Snowflake looks expensive early.

Redshift looks stable, until the bill comes later as a resize, redesign, or migration out.

Why Snowflake Migrations Often Look More Expensive Early

Snowflake does not obscure related inefficiency.

It prices:

- Every re-run

- Every validation

- Every parallel workload

That makes early cost:

- Higher

- More volatile

- More visible

But it also makes it:

- Explainable

- Auditable

- Reducible over time

Redshift often delays cost visibility rather than eliminating it.

Snowflake accelerates it.

The Executive-Level Insight

Migration cost is not a platform flaw.

It’s the price of changing systems.

Snowflake migrations often look more expensive early because:

- They surface inefficiency immediately

- They don’t amortize pain silently

- They force discipline sooner

The real risk is not high migration cost.

It’s misinterpreting temporary cost as permanent reality.

At scale, the organizations that win are the ones that:

- Expect migration cost

- Label it clearly

- Time-box it aggressively

- Optimize after, not during, validation

Snowflake doesn’t make migration more expensive.

Cost efficiency comparisons that exclude migration dynamics systematically favor capacity based platforms by deferring rather than eliminating migration related inefficiency. Tell me exact lines.

It makes migration cost harder to ignore.

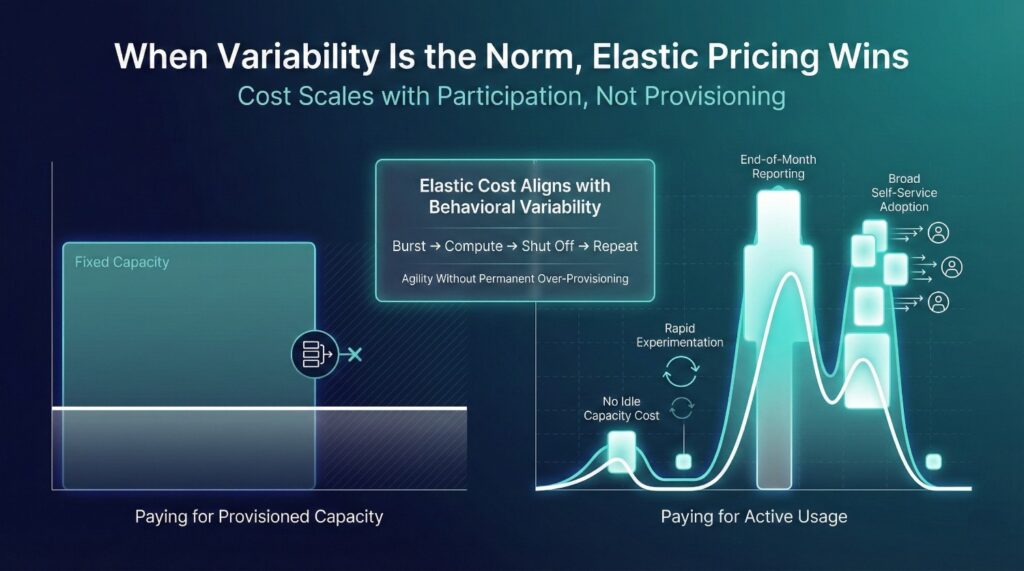

When Snowflake Cost Wins at Scale

Snowflake tends to perform better on cost when variability is the dominant characteristic of the organization.

Not variability in data volume, but in how people work with data.

Snowflake’s pricing model is designed to reward flexibility and penalize waste only when it happens. That makes it a strong fit in specific large-scale conditions.

Highly Variable Workloads

Snowflake performs best financially when workloads are:

- Bursty rather than constant

- Spiky rather than evenly distributed

- Unpredictable in timing and intensity

Examples include:

- End-of-month reporting

- Campaign analysis

- Incident investigations

- Seasonal analytics

In these cases:

- Compute runs hard when needed

- Shuts off when not

- Idle capacity is not paid for

Capacity-based systems struggle here. Elastic pricing thrives.

Many Analytical Users

Snowflake scales economically when:

- Large numbers of users query data independently

- Multiple teams explore data in parallel

- Self-service is a core goal

Because Snowflake:

- Supports high concurrency cleanly

- Avoids queueing bottlenecks

- Lets teams work without waiting

Cost increases, but it maps directly to usage and value.

For organizations that expect broad analytical adoption, Snowflake’s model aligns cost with participation.

Rapid Experimentation

Snowflake favors environments where:

- Questions change frequently

- Models evolve quickly

- Hypotheses are tested and discarded

In these environments:

- Paying only when compute runs is an advantage

- Short-lived experiments don’t require permanent capacity

- Scaling up briefly is cheaper than over-provisioning forever

Snowflake charges for curiosity, but only while it’s active.

When the Business Values Agility Over Predictability

Snowflake is often a strong cost fit when the organization:

- Accepts some spend variability

- Values speed of insight over fixed budgets

- Prefers governance to come from behavior, not capacity

Finance teams that:

- Are comfortable with forecast ranges

- Review cost trends, not just totals

- Partner closely with data leadership

Find Snowflake easier to justify at scale.

The Scale-Level Conclusion

Snowflake cost wins at scale when:

- Usage is uneven

- Adoption is broad

- Work changes faster than infrastructure plans

It is not the cheapest option for every organization.

But for companies where:

- Flexibility is strategic

- Analytics is a competitive advantage

- Waiting for capacity is unacceptable

Snowflake’s cost model aligns with how value is actually created.

At scale, Snowflake doesn’t aim to minimize absolute spend.

It optimizes spend to support agility.

That distinction matters.

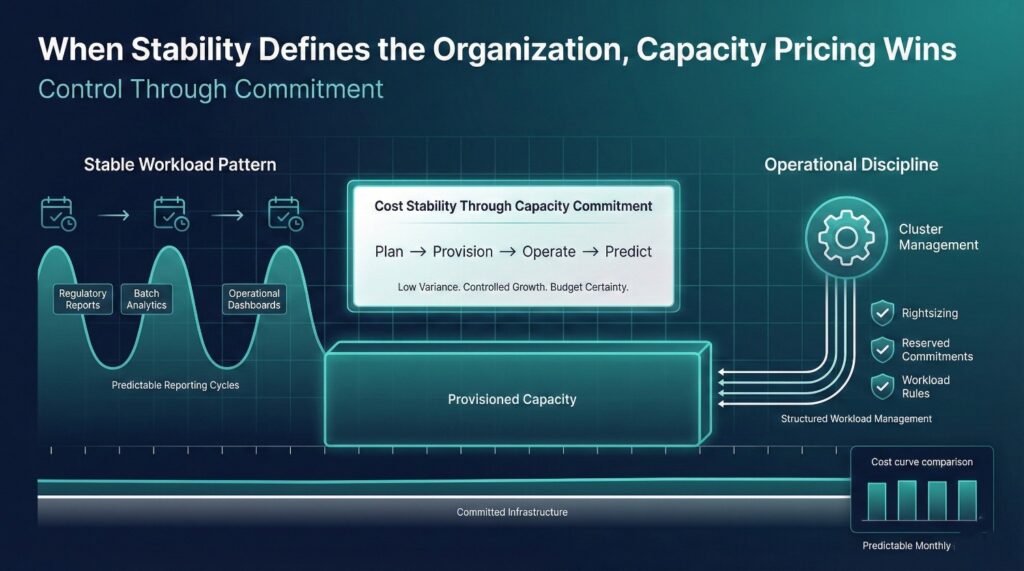

When Redshift Cost Wins at Scale

Redshift tends to perform better on cost when stability and predictability outweigh the need for elasticity.

Its pricing model rewards organizations that are willing, and able, to plan capacity in advance and operate within well-defined usage patterns.

Stable Workloads

Redshift is a strong cost fit when workloads are:

- Consistent day to day

- Similar in shape week to week

- Unlikely to spike unexpectedly

Examples include:

- Standardized operational reporting

- Fixed regulatory reports

- Batch analytics with known schedules

In these environments:

- Capacity can be sized once and reused efficiently

- Idle time is limited

- Over-provisioning risk is manageable

When usage doesn’t fluctuate much, capacity-based pricing tends to work in your favor.

Predictable Usage Patterns

Redshift performs well financially when:

- Query frequency is known

- Concurrency is controlled

- Few surprise workloads appear

This predictability allows:

- Confident reserved instance commitments

- Minimal resizing events

- Stable month-to-month invoices

For finance teams that value consistency, this reduces planning anxiety, even if some capacity goes unused.

Strong Operations Discipline

Large scale Redshift deployments frequently cite operational process maturity rather than pricing mechanics as the primary determinant of long term cost efficiency.

Redshift cost efficiency depends heavily on sustained operational rigor.

Organizations that succeed with Redshift at scale typically have:

- Dedicated teams managing clusters

- Regular rightsizing cycles

- Clear workload management rules

- Automation around maintenance and scaling

This discipline prevents:

- Silent performance degradation

- Accidental overgrowth

- Cost creeping in unnoticed ways

Without it, Redshift’s apparent predictability can mask growing inefficiency over time.

Finance Prefers Fixed Cost Envelopes

Redshift aligns well when finance leadership:

- Prefers committed spend over variable invoices

- Values budget certainty over elasticity

- Is comfortable paying for unused capacity in exchange for stability

In these cases:

- Predictable invoices reduce friction

- Planning cycles are simpler

- Variance explanations are easier

The tradeoff is accepted upfront:

“Some inefficiency is tolerated to avoid surprise.”

The Scale-Level Conclusion

Redshift cost wins at scale when:

- Workloads are steady

- Usage patterns are known

- Operational discipline is strong

- Budget predictability is prioritized

It is less forgiving of change, but very comfortable with routine.

At scale, Redshift doesn’t primarily optimize for agility.

It optimizes for control through commitment.

For organizations that operate best within fixed envelopes, that can be a feature, not a flaw.

The Question Leaders Should Ask Instead

At scale, the Snowflake vs Redshift debate breaks down because leaders ask the wrong question.

They ask:

“Which platform is cheaper?”

That question assumes cost is an inherent property of the technology itself.

It isn’t.

Cost is the outcome of how an organization behaves on top of the technology.

The Questions That Actually Matter

Leaders get better answers when they reframe the decision around maturity and behavior.

Which model matches our usage maturity?

- Do teams reuse shared models or duplicate logic?

- Is self-service structured or free-form?

- Are usage patterns intentional or accidental?

Snowflake tends to reward mature usage patterns.

Redshift tolerates immature usage longer, but charges later.

Can we govern behavior effectively?

- Do we have clear ownership of metrics and cost?

- Can we set guardrails without slowing teams down?

- Are finance and technology aligned on tradeoffs?

If governance is weak, Snowflake will surface that weakness more quickly.

Redshift will often hide it until performance degradation becomes unacceptable.

Do we value elasticity or predictability more?

- Is speed to insight strategic?

- Are workloads variable or stable?

- Is spend variance acceptable if value increases?

Snowflake primarily optimizes for elasticity.

Redshift primarily optimizes for predictability.

Neither is inherently superior. Each fits different priorities. Across cloud data platforms, governance maturity is a stronger predictor of cost outcomes than architectural choice alone.

Why This Reframing Changes the Conversation

When leaders focus only on price:

- Cost debates become defensive

- Platform choices become politicized

- Optimization becomes reactive

When leaders focus on fit:

- Cost becomes a governance problem

- Tradeoffs become explicit

- Spend becomes explainable

Snowflake cost becomes less likely to feel like a surprise.

Redshift cost becomes less likely to feel artificially “safe.”

The Executive-Level Takeaway

Snowflake vs Redshift is not only a pricing decision.

It’s an organizational design decision.

The right platform is the one whose cost model:

- Aligns with how your teams actually work

- Matches your tolerance for variability

- Exposes problems you are willing to fix

When leaders ask the right questions, Snowflake cost becomes a strategic choice, not a bill shock. Executive reviews of data platform cost failures consistently show that misalignment between organizational behavior and cost model is the root cause of perceived platform inefficiency.

Final Thoughts

At scale, Snowflake vs Redshift is not a technical comparison.

It’s a statement about how an organization chooses to pay for data work.

Each platform encodes a different cost philosophy about how work is paid for.

Snowflake Cost Rewards

Snowflake cost aligns with organizations that value:

Elasticity

Compute expands and contracts with demand. You pay for activity, not for assumptions about peak usage.

Discipline

Inefficient behavior becomes visible quickly. Duplicate work, uncontrolled self-service, and weak semantics show up as spend.

Transparency

Cost reflects real usage patterns. The bill is noisy, but explainable, if governance exists.

Snowflake works best when teams are willing to:

- Govern behavior

- Accept some variability

- Optimize continuously

It does not attempt to hide organizational inefficiencies.

It exposes them.

Redshift Cost Rewards

Redshift cost aligns with organizations that value:

Stability

Spend is anchored in fixed capacity. Month-to-month invoices are predictable.

Planning

Cost is decided upfront through sizing and commitments, not day-to-day usage.

Operational control

Engineering teams manage performance and efficiency inside a known envelope.

Redshift works best when organizations:

- Have stable workloads

- Can plan capacity confidently

- Are comfortable paying for unused headroom

It absorbs inefficiency quietly, until performance forces a step change.

The Real Decision Leaders Must Make

This is not a choice between:

- Cheap vs expensive

- Cloud-native vs legacy

- Modern vs traditional

It’s a choice between:

- Paying for actual behavior

- Or paying for assumed capacity

Snowflake charges you when people actively use data.

Redshift charges you based on provisioned capacity whether it is fully utilized or not.

Both models are valid.

Both fail when mismatched to organizational reality.

Final Takeaway

At scale, the “right” warehouse is not the one with the lowest theoretical cost.

It’s the one whose cost model:

- Matches how your teams actually work

- Aligns with your governance maturity

- Exposes the problems you are prepared to fix

When cost philosophy and organizational behavior align,

Snowflake cost stops being a surprise, and Redshift cost stops being a crutch.

That alignment, not price, is what determines success at scale

Frequently Asked Questions (FAQ)

No. Snowflake often looks more expensive because it prices usage explicitly. Redshift can appear cheaper because cost is prepaid as capacity. At scale, total cost depends on behavior, how often teams query, how coordinated they are, and how well usage is governed, not the platform alone.

Because Snowflake cost changes with usage behavior. New teams, more dashboards, higher concurrency, and validation work all show up immediately on the bill. Redshift hides these effects as performance degradation until a resize or redesign is required.

Redshift charges for capacity whether it’s used or not. That creates stable invoices but hides waste in idle capacity, over-provisioning, and operational overhead. The cost is predictable, but not always optimal.

Redshift usually wins when workloads are stable, concurrency is controlled, and usage patterns change slowly. Reserved instances and fixed clusters align well with predictable demand.

Snowflake typically performs better when workloads spike unpredictably, many users query independently, or experimentation is common. Paying only when compute runs avoids long-term idle capacity costs.

Because Snowflake exposes migration activity clearly:

- Dual runs

- Validation queries

- Backfills and reprocessing

These are typically temporary but operationally intense. Redshift absorbs much of this as performance friction instead of visible cost.

Primarily organizational rather than technological. Snowflake cost reflects:

- Trust in shared metrics

- Degree of duplication

- Discipline in self-service

- Clarity of ownership

Weak governance often shows up as higher Snowflake cost.

Yes. Redshift often converts inefficient behavior into slower performance instead of higher bills. Cost pain arrives later as a forced resize, redesign, or migration, often in larger steps.

Neither by default, just differently.

- Snowflake requires behavioral governance (guardrails, ownership, visibility).

- Redshift requires operational governance (capacity planning, tuning, automation).

The easier platform is the one that matches your organization’s strengths.

Not unit price. CFOs should focus on:

- Cost predictability vs elasticity

- Ability to attribute spend to behavior

- Governance maturity

- Tolerance for variability

Snowflake cost is easier to explain when usage is visible. Redshift cost is easier to budget when usage is stable.

CTOs should assess:

- How quickly workloads change

- How decentralized analytics will become

- Whether teams can reuse models and definitions

- Whether governance can keep pace with adoption

Snowflake tends to amplify agility. Redshift reinforces planning.

At scale, Snowflake vs Redshift is not a pricing debate, it’s an organizational design decision.

When cost model and behavior align, Snowflake cost becomes explainable and Redshift cost becomes intentional. When they don’t, both platforms become expensive in different, and avoidable, ways.