Table of Contents

Introduction

The Data Source Explosion Is Real

Modern enterprises often don’t have a data problem, They struggle more with fragmentation and inconsistency across systems.

- Dozens of SaaS tools across marketing, sales, finance, and HR, each with its own data model

- IoT devices streaming telemetry and sensor data 24/7

- Legacy on-prem databases that nobody wants to touch but everyone depends on

- Multi-cloud footprints spanning AWS, Azure, GCP, often all three

- Many mid-size companies manage data across dozens and sometimes hundreds of disconnected systems

This level of complexity often drives demand for data integration consulting, because no single tool alone resolves architectural fragmentation.

Why Most Organizations Are Struggling

Despite significant investment in data infrastructure, many companies still struggle to produce a clean and consistently trusted unified operational view. Why?

- Point-to-point connections duct-taped together with no overarching strategy

- ETL jobs built years ago that nobody fully understands anymore

- CSV exports still being emailed between departments (yes, in 2025)

- The “single source of truth” everyone references in meetings? It doesn’t actually exist.

The data is widely distributed across systems. Insights often remain difficult to operationalize. And without structural architectural thinking or experienced data integration consulting, most teams don’t even know where to start fixing it.

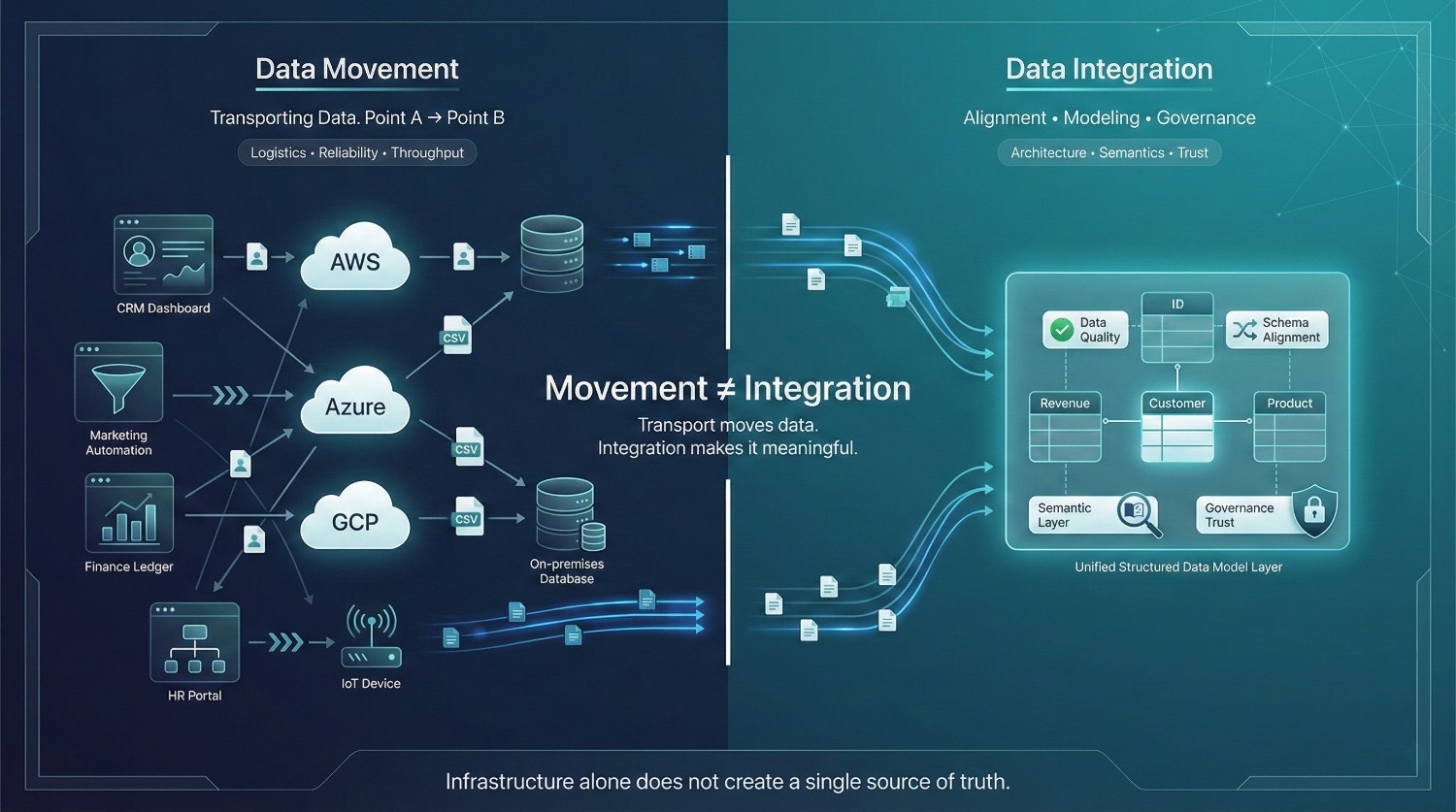

The Dangerous Conflation: Movement ≠ Integration

Here’s the root of the problem, data movement and data integration get treated as the same thing. They’re not. This confusion shows up everywhere:

- Vendor pitches that promise “integration” but only deliver replication

- Internal strategy docs that use both terms interchangeably

- Tool selections based on transport speed when the real need is semantic unification

A good data integration consulting partner will flag this distinction on day one, because every downstream decision depends on getting it right.

The Cost of Getting It Wrong

Blurring this distinction isn’t just a terminology issue, it’s a budget-burning, project-killing mistake:

- Failed migrations, teams planned for transport but not transformation

- Conflicting dashboards, data was moved but never reconciled or standardized

- Silent pipeline failures, no one accounted for schema changes or data quality rules

- Siloed insights, departments have their own “truth,” and none of them match

These aren’t edge cases. They’re the default outcome when organizations skip strategic planning and jump straight to tooling, the exact scenario data integration consulting exists to prevent.

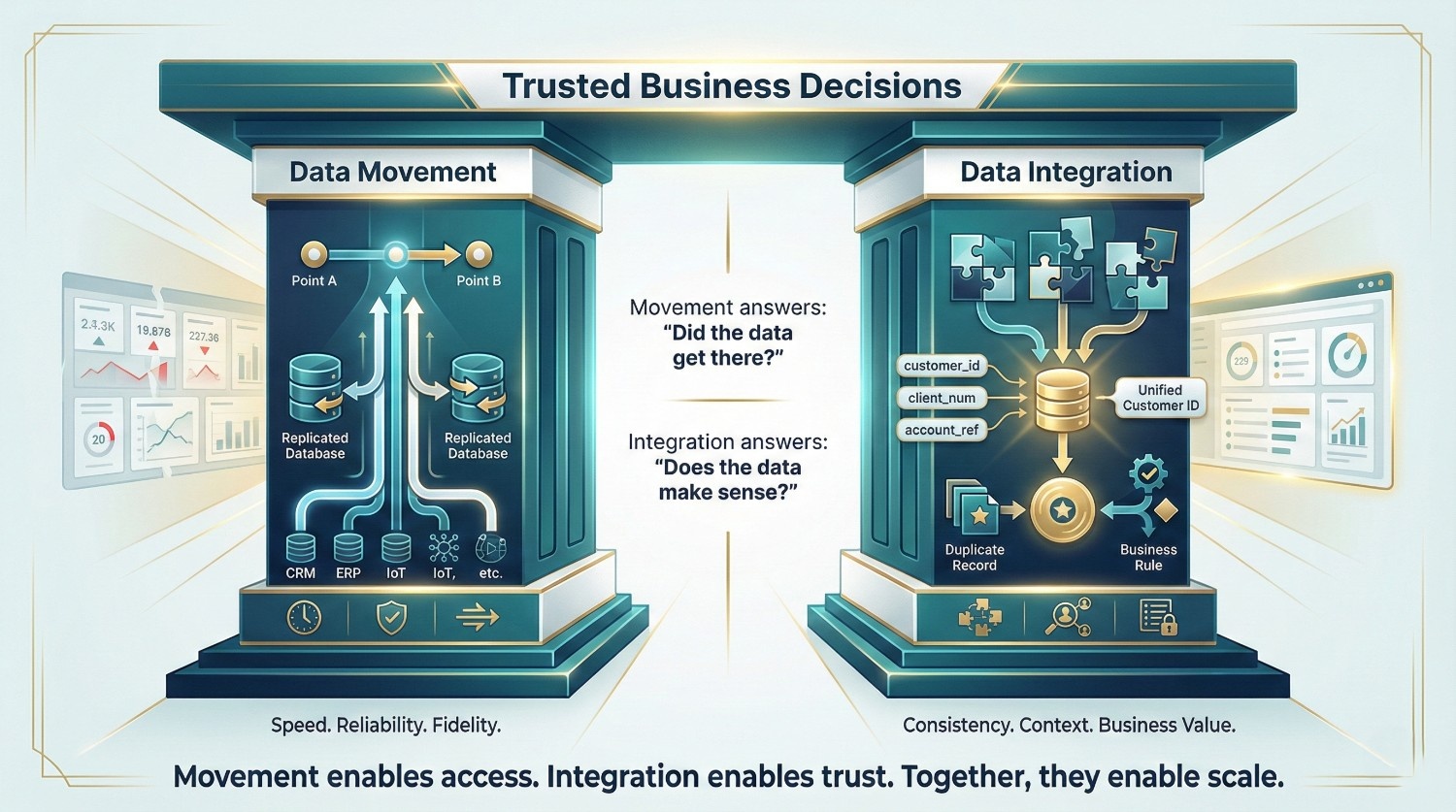

The Core Thesis

Data movement and data integration are fundamentally different concepts, different in goals, methods, technical requirements, and business outcomes.

- Data movement refers to transporting data from Point A to Point B. It is primarily a logistics and reliability problem.

- Data integration involves aligning, modeling, standardizing, and governing data so it becomes meaningful across an ecosystem. It is primarily an architectural and semantic problem.

You need both. But confusing one for the other is how companies spend millions on infrastructure that still can’t answer basic business questions.

What You'll Walk Away With

By the end of this post, you’ll clearly understand:

- What data movement actually involves, and where it stops

- What data integration demands beyond simple transport

- Where the two overlap, and where they sharply diverge

- How to evaluate which one your organization needs to prioritize right now

- Why data integration consulting is the starting point for getting this right, not an afterthought

Let’s get into it.

What Is Data Movement?

Definition and Core Concept

At its simplest, data movement is the physical or logical transfer of data from one system, location, or environment to another.

That’s it. No transformation. No enrichment. No interpretation. Just transport. Data movement solves availability. It does not solve alignment. Think of it this way:

Analogy: Data movement is like shipping a sealed package from one warehouse to another. The package arrives at the destination, but nobody opens it, inspects it, or modifies what’s inside.

Key characteristics:

- The focus is entirely on getting data from Point A to Point B

- The structure and format are not altered during transit

- It’s a prerequisite for data integration, but it is not integration itself

Confusing these two stages is how organizations end up with modern pipelines and outdated decision logic.This is one of the first distinctions any data integration consulting engagement will clarify, because most organizations assume that once data has been moved, the job is done. It’s not.

Common Methods and Techniques

Data movement isn’t one-size-fits-all. The right method depends on your volume, velocity, latency needs, and source/target architecture.

ETL (Extract, Transform, Load)

- Extracts data from source systems

- Transforms it in a staging area (cleaning, mapping, formatting)

- Loads the transformed data into the target system

- Best for: structured, batch-oriented workflows with well-defined schemas

ELT (Extract, Load, Transform)

- Loads raw data directly into the target system first

- Transforms it inside the destination (using the target’s compute power)

- Best for: cloud-native environments like Snowflake, BigQuery, or Databricks where compute is cheap and scalable

The real architectural decision is not ETL versus ELT. It is whether transformation logic is centralized, governed, and versioned over time.

Data Replication

- Creates and maintains copies of data across multiple systems

- Can run in near real-time or on a scheduled batch basis

- Best for: keeping production and analytics systems in sync without impacting source performance

Change Data Capture (CDC)

- Detects and moves only the changes, inserts, updates, deletes, since the last sync

- Dramatically reduces data transfer volume

- Best for: high-frequency sync scenarios where moving entire datasets every time is impractical

Data Streaming

- Continuous, real-time movement of data as events occur

- Powered by tools like Apache Kafka, Amazon Kinesis, or Apache Flink

- Best for: event-driven architectures, real-time analytics, and IoT data pipelines

Bulk File Transfers

- SFTP, scheduled batch exports/imports, flat file exchanges

- The oldest method in the book, and still heavily used

- Best for: partner data exchanges, legacy system feeds, regulatory file submissions

Database Migration

- Moving entire databases from one platform to another

- Example: Oracle to PostgreSQL, on-prem SQL Server to cloud-hosted RDS

- Best for: platform modernization, cloud migration, and vendor consolidation

A skilled data integration consulting firm won’t just recommend a method, they’ll help you match the right technique to each data flow based on your specific architecture and business requirements.

Primary Goals of Data Movement

Regardless of which method you choose, every data movement initiative is measured against four core goals:

|

Goal

|

What It Means

|

|---|---|

|

Reliability

|

Data arrives at the destination accurately and completely, no lost records, no corruption

|

|

Timeliness

|

Latency requirements are met, whether that's real-time, near real-time, or daily batch

|

|

Scalability

|

Pipelines handle growing data volumes without failures or degradation

|

|

Fidelity

|

The structure and integrity of the original data are preserved during transit

|

None of these goals ensure that two departments interpreting the same dataset will reach the same conclusion. Notice what’s not on this list: meaning, context, business rules, or cross-system reconciliation. That’s the domain of data integration, not data movement.

Real-World Use Cases

Here’s where data movement shows up in practice:

- Cloud Migration Moving on-premises data warehouses to platforms like Snowflake, BigQuery, or Amazon Redshift, often the first phase of any modernization initiative

- Database Synchronization Keeping production databases and analytics environments in sync so reporting doesn’t lag behind operations

- Disaster Recovery Replicating data to a secondary location or region for failover and business continuity

- Data Lake Ingestion Streaming raw event data into a data lake (S3, ADLS, GCS) for future processing and analysis

- Cross-Region Data Transfer Moving data between geographic regions for compliance (e.g., GDPR data residency) or performance optimization

Each of these use cases involves moving data, but none of them inherently involve making that data usable across systems. That’s the gap that data integration consulting helps organizations identify and close. Movement increases accessibility. Integration increases reliability of interpretation.

Tools and Technologies

The data movement ecosystem is mature and crowded. Here’s a quick breakdown of the most widely used tools and where each fits best:

|

Tool

|

Best For

|

|---|---|

|

Apache Kafka

|

Real-time event streaming at scale, high throughput, distributed architectures

|

|

Apache NiFi

|

Visual data routing and transformation, strong in government and healthcare

|

|

AWS DMS

|

Database migration within or into the AWS ecosystem

|

|

Azure Data Factory

|

Orchestrating data movement across hybrid and multi-cloud Azure environments

|

|

Fivetran

|

Automated, no-code ELT connectors for SaaS-to-warehouse pipelines

|

|

Airbyte

|

Open-source alternative to Fivetran with a growing connector library

|

|

Stitch

|

Lightweight, developer-friendly ELT for small to mid-size data teams

|

|

Talend

|

Enterprise-grade, covers both movement and integration, depending on configuration

|

Throughput is a technical metric. Trust is a business metric. Data movement optimizes the former, not the latter.

A Word of Caution

Tools alone don’t solve the problem. One of the biggest mistakes organizations make is selecting a data movement tool and assuming it handles integration too. It doesn’t.

This is a core reason data integration consulting exists, to help you build the right architecture around your tools, not just pick tools and hope for the best. Most data platform failures are not caused by weak pipelines. They are caused by strong pipelines feeding inconsistent logic.

What Is Data Integration?

Definition and Core Concept

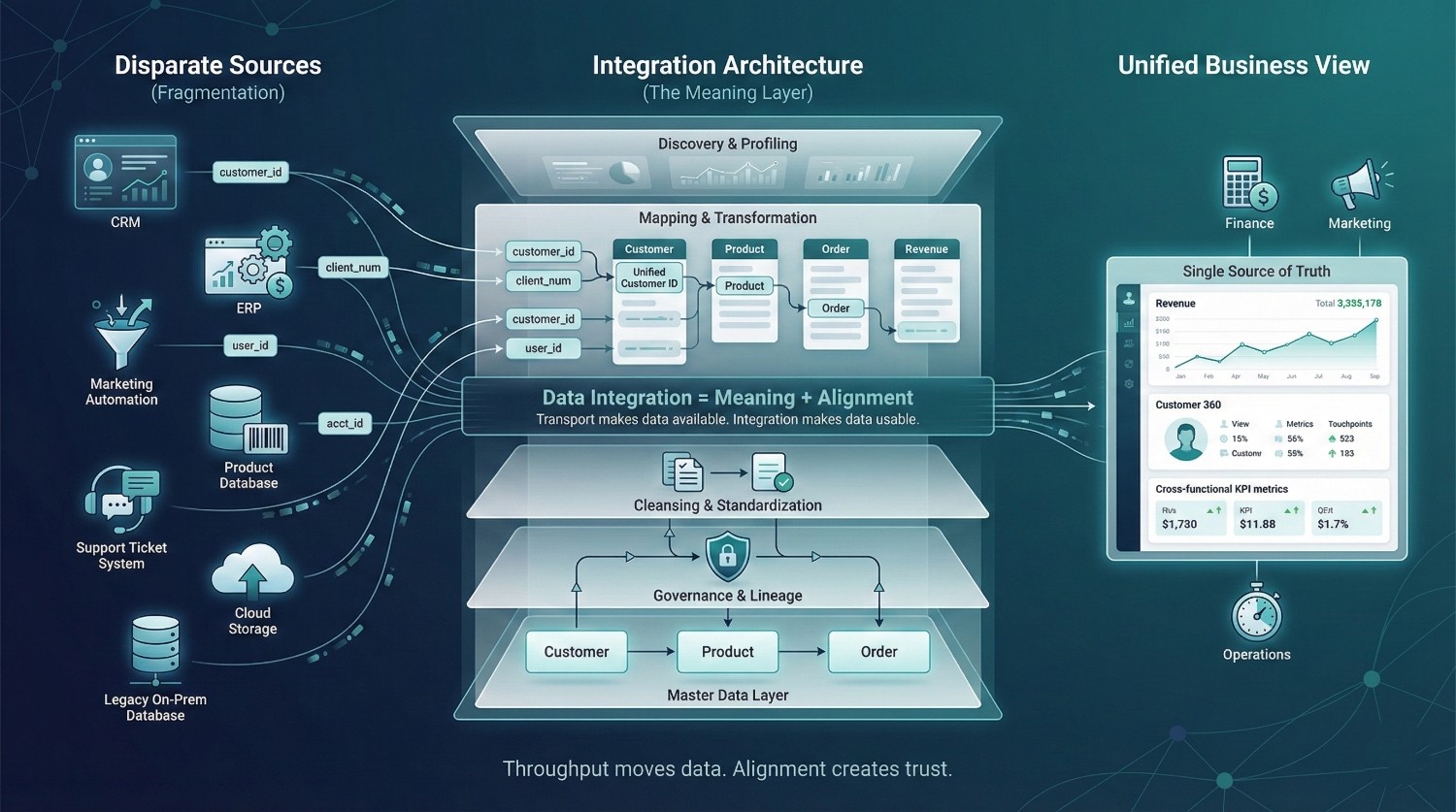

If data movement is about transport, data integration is about meaning. Data integration is the process of combining data from multiple disparate sources into a unified, coherent, and governed view that the business can reliably use.

It’s not enough to get the data there. It needs to be:

- Consistent, with aligned formats, definitions, and standards across sourcesConsistent, same formats, same definitions, same standards across sources

- Contextual, enriched with business logic, relationships, and governed metadataContextual, enriched with business logic, relationships, and metadata

- Usable, accessible to the right people, in the right shape, at the right time

The Warehouse Analogy

Data movement = shipping sealed packages to a warehouse. Data integration = unpacking those packages, organizing the contents, labeling everything correctly, and placing items on the right shelves so anyone can find what they need.

This distinction separates a functional data ecosystem from an expensive mess, and it’s the first thing any serious data integration consulting engagement addresses. Integration is where technical architecture meets organizational agreement.

Core Components of Data Integration

Data integration isn’t a single step. It’s a layered process, and skipping any layer creates downstream problems.

Data Discovery and Profiling

- Understanding what data exists, where it lives, and what condition it’s in

- Assessing completeness, accuracy, format consistency, and volume

- The foundation of any data integration consulting roadmap

Data Mapping

- Defining how fields, schemas, and structures from different sources relate to each other

- Example: “Customer ID” in Salesforce ↔ “Client_Num” in your ERP ↔ “user_id” in your product database

- Without proper mapping, you’re just stacking incompatible data in the same place

Field alignment is technical. Definition alignment is political. Integration requires both.

Data Transformation

- Converting, normalizing, enriching, and restructuring data to fit a unified model

- Includes format standardization, unit conversions, calculated fields, and business rule application

- This is where raw data becomes analytically useful

Data Cleansing

- Identifying and correcting errors, duplicates, inconsistencies, and missing values

- Dirty data in = garbage insights out, no matter how good your dashboards are

- Often the most time-consuming and underestimated phase of integration

Data Governance and Lineage

- Tracking where data came from, how it was transformed, and who has access

- Enables auditability, regulatory compliance, and trust in the data

- Without governance, integration creates a bigger mess, not a cleaner one. Without lineage, integration scales confusion faster than clarity.

Master Data Management (MDM)

- Creating a single source of truth for key business entities, customers, products, locations, vendors

- Ensures that when five systems define “customer” differently, the organization has one authoritative definition

- A cornerstone of mature data integration strategy

MDM is not a tool category. It is an organizational commitment to shared definitions.

Data Cataloging and Metadata Management

- Making integrated data discoverable and understandable across teams

- Business glossaries, tagging, search, lineage visualization

- The difference between “we have the data” and “people can actually find and use the data”

Common Methods and Approaches

Just like data movement, data integration has multiple approaches, each suited to different architectures and business needs.

Consolidation (Warehousing)

- Physically merging data from multiple sources into a central repository, typically a data warehouse

- All data lives in one place, in one model

- Best for: organizations that need a single, governed source of truth for BI and reporting

Data Virtualization

- Creates a unified view across sources without physically moving or copying data

- Queries run against a virtual layer that abstracts the underlying systems

- Best for: real-time access to distributed data without the overhead of replication

Federation

- Querying multiple sources in real time through a single access layer

- Similar to virtualization but typically less abstracted

- Best for: environments where data can’t be moved due to compliance or sovereignty constraints

Middleware and ESB (Enterprise Service Bus)

- Connects applications and enables data flow between them using a central messaging backbone

- Best for: large enterprises with complex, legacy application landscapes

API-Based Integration

- Using REST, GraphQL, or other APIs to pull and push data between systems on demand

- Lightweight and flexible, but can get messy at scale without proper orchestration

- Best for: SaaS-heavy environments and microservices architectures

iPaaS (Integration Platform as a Service)

- Cloud-based platforms for building and managing integration flows

- Examples: MuleSoft, Boomi, Workato, Celigo

- Best for: organizations that want pre-built connectors, low-code workflows, and managed infrastructure

Choosing the right approach, or the right combination of approaches, is one of the highest-value outcomes of data integration consulting. There’s no universal answer; it depends entirely on your systems, your data, and your business goals.

Primary Goals of Data Integration

Where data movement is measured by reliability and speed, data integration is measured by business impact:

|

Goal

|

What It Means

|

|---|---|

|

Single Source of Truth

|

One consistent, authoritative version of key data entities across the organization

|

|

Cross-Functional Analytics

|

Finance, marketing, ops, and product all working from the same data, not their own siloed copies

|

|

Breaking Down Silos

|

Departments stop hoarding data and start sharing unified, governed datasets

|

|

Data Consistency and Accuracy

|

Reduced likelihood of conflicting numbers in different dashboards or reports

|

|

Powering BI, AI/ML, and Operations

|

Clean, integrated data is the fuel for business intelligence, machine learning models, and real-time operational decisions

|

The difference between integrated data and aggregated data is accountability. None of these goals are achieved through data movement alone. They require the full stack of integration capabilities, which is why data integration consulting focuses on outcomes, not just pipelines.

Real-World Use Cases

This is where data integration delivers measurable business value:

- 360° Customer View Merging CRM data, support tickets, marketing engagement, transaction history, and product usage into a single customer profile, enabling personalization, retention strategies, and accurate LTV calculations

- Post-Acquisition Data Consolidation Two companies merge. Now you need to unify their ERPs, HR systems, finance platforms, and customer databases, with different schemas, different standards, and different definitions of the same entities. This is textbook data integration consulting territory.

- Regulatory Compliance Aggregating and reconciling data across systems for audits, GDPR, HIPAA, SOX, CCPA. Regulators expect consistent, reconcilable answers regardless of system fragmentations. They expect one coherent answer.

- Omnichannel Retail Integrating in-store POS, e-commerce platforms, inventory management, and logistics data so that a customer’s experience is consistent whether they buy online, in-store, or through a mobile app

- Healthcare, Unified Patient Profiles Combining electronic health records (EHR) from multiple providers, labs, pharmacies, and insurance systems into a single patient view, critical for care coordination and clinical decision-making

Tools and Technologies

The data integration tooling landscape is broad. Here’s how the major platforms break down:

|

Tool / Platform

|

Category

|

Best For

|

|---|---|---|

|

Informatica

|

Enterprise integration suite

|

Large-scale, governance-heavy environments with complex transformation needs

|

|

Talend

|

Open-source / enterprise hybrid

|

Teams that need both movement and integration in one platform

|

|

MuleSoft

|

API-led integration / iPaaS

|

API-first architectures, Salesforce-heavy ecosystems

|

|

Boomi

|

iPaaS

|

Mid-market companies needing fast, low-code integration workflows

|

|

Microsoft SSIS

|

ETL / integration (on-prem)

|

Microsoft-stack organizations with SQL Server environments

|

|

Denodo

|

Data virtualization

|

Real-time unified views without physically consolidating data

|

|

Apache Spark

|

Large-scale transformation

|

Big data processing, ML pipelines, and complex data engineering workflows

|

Choosing the Right Stack

Mergers rarely fail because of technology gaps. They fail because definitions never converge. No single tool covers every integration need. Most mature organizations use a combination of platforms, and knowing which tool fits which layer of your architecture is one of the most important decisions you’ll make.

This is where data integration consulting pays for itself. The right consultant doesn’t just recommend tools, they design the architecture that makes those tools work together as a system, not a collection of disconnected products.

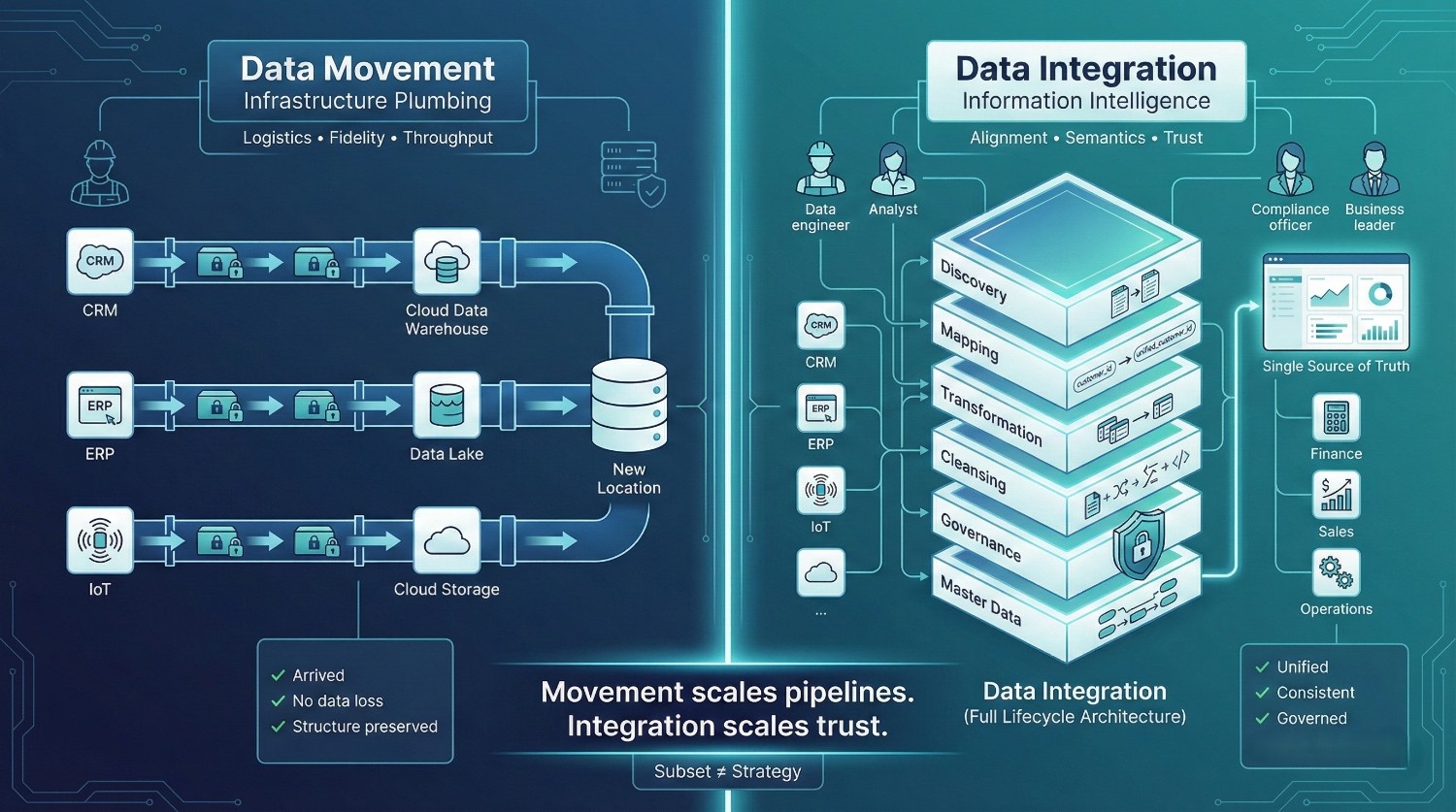

Key Differences

Now that we’ve defined both concepts individually, let’s put them side by side. This is where the distinction becomes impossible to ignore, and where most organizations realize they’ve been solving the wrong problem. Most failed transformation programs trace back to this exact misunderstanding.

Scope

Data movement is a subset of data integration, not a synonym for it.

- You can move data without integrating it

- You cannot integrate data without some form of movement

- Integration encompasses the full data lifecycle, discovery, profiling, mapping, transformation, cleansing, governance, and delivery

- Movement is just one stage within that lifecycle. Confusing the stage for the strategy is what creates architectural debt.

Think of it this way:

Every data integration initiative involves data movement. But not every data movement initiative involves integration. This is a nuance that gets lost constantly, in vendor evaluations, internal planning, and even architecture reviews. It’s one of the first misconceptions that data integration consulting engagements are designed to correct.

Purpose and Intent

The core difference comes down to what you’re trying to achieve:

|

Comparison Criteria

|

Data Movement

|

Data Integration

|

|---|---|---|

|

Question it answers

|

"How do we get data from here to there?"

|

"How do we make data from everywhere work together?"

|

|

Focus

|

Infrastructure and logistics

|

Business outcomes and usability

|

|

Mindset

|

Engineering-driven

|

Strategy-driven

|

|

Success metric

|

Data arrived at the destination

|

Data is unified, consistent, and actionable

|

- Movement is about infrastructure plumbing

- Integration is about information intelligence

When organizations hire for data integration consulting, they’re not looking for someone to set up a Kafka cluster. They’re looking for someone to answer: “How do we turn fragmented data into a business asset?”

Transformation and Enrichment

This is where the gap between movement and integration becomes most tangible.

Data Movement and Transformation

- May involve minimal or zero transformation, especially in ELT patterns where raw data lands first

- When transformation does occur, it’s typically structural, format conversion, compression, serialization

- No business logic applied during transit

Data Integration and Transformation

- Transformation is inherent and unavoidable

- Includes:

- Schema mapping across incompatible source systems

- Field-level normalization (e.g., “USA” vs. “US” vs. “United States”)

- Data enrichment with external or derived attributes

- Business rule application (e.g., revenue recognition logic, customer segmentation rules)

- Deduplication and entity resolution

- Without transformation, you don’t have integration, you have co-located chaos

Transformation is where business definitions become enforceable, not optional.

The Bottom Line

Movement asks: “Did the data get there?”

Integration asks: “Does the data make sense now that it’s here?”

Any experienced data integration consulting team will tell you, the transformation layer is where 70%+ of the real work happens. And it is also where most underbudgeted projects stall..

Complexity

Not all data challenges are created equal. Movement and integration sit on very different ends of the complexity spectrum.

Data Movement Complexity

- Relatively straightforward in many cases, especially one-to-one replication or batch transfers

- Complexity increases with volume, velocity, and the number of source/target systems

- Primarily a technical challenge

Data Integration Complexity

- Introduces an entirely different class of problems:

- Schema conflicts, different systems model the same entity in incompatible ways

- Semantic differences, “revenue” means one thing in finance and another in sales

- Data quality gaps, missing values, outdated records, conflicting duplicates

- Governance requirements, access controls, lineage tracking, compliance mandates

- Requires cross-team collaboration, data engineers, analysts, domain experts, compliance teams, and business stakeholders all need to align

- It’s as much an organizational challenge as a technical one. Alignment failures cost more than tooling mistakes.

This multi-layered complexity is precisely why data integration consulting exists as a discipline. The tooling is only 30% of the problem. The other 70% is people, processes, and business logic.

Data Quality

Here’s a distinction that catches a lot of teams off guard:

Movement → Fidelity

- Did the data arrive intact?

- Are all records accounted for?

- Is the structure preserved?

- Zero data loss during transit equals success

Integration → Quality

- Is the data accurate?

- Is it consistent across sources?

- Is it complete and usable for downstream consumers?

- Does it meet governance and compliance standards?

Where This Breaks Down

A dataset can be moved perfectly, every row, every column, zero loss, and still be completely useless for integration:

- Duplicate customer records from two CRMs? Moved successfully. Integration failed.

- Inconsistent date formats across three source systems? Moved successfully. Integration failed.

- Revenue figures that don’t reconcile because each system calculates them differently? Moved successfully. Integration failed.

This is one of the most expensive blind spots in enterprise data, and a core reason organizations invest in data integration consulting before committing to large-scale migration or consolidation projects.

Outcome

Ultimately, what do you have at the end of each process?

|

Project Outcomes

|

Data Movement

|

Data Integration

|

|---|---|---|

|

End state

|

Data exists in a new location

|

Data is unified, contextualized, and ready for consumption

|

|

What the business gets

|

A copy of the data somewhere else

|

A trusted, usable asset that drives decisions

|

|

What's still missing

|

Context, consistency, business logic

|

Nothing, if done correctly this represents the complete picture

|

Movement gives you data in a new place. Integration gives you data that actually works.

Comparison Table: The Full Picture

Here’s everything in one view:

|

Dimension

|

Data Movement

|

Data Integration

|

|---|---|---|

|

Scope

|

Subset of integration, one stage in the lifecycle

|

Full lifecycle, includes movement as one component

|

|

Purpose

|

Transport data from Point A to Point B

|

Unify data into a meaningful, usable asset

|

|

Transformation

|

Minimal or none, structural at most

|

Extensive, mapping, cleansing, enrichment, business rules

|

|

Complexity

|

Primarily technical, volume, latency, connectivity

|

Technical + organizational, schemas, semantics, governance, collaboration

|

|

Quality Focus

|

Fidelity, did it arrive intact?

|

Quality, is it accurate, consistent, and usable?

|

|

Outcome

|

Data exists in a new location

|

Data is unified, contextualized, and decision-ready

|

|

Typical Tools

|

Kafka, Fivetran, AWS DMS, Airbyte, NiFi

|

Informatica, MuleSoft, Boomi, Denodo, Talend, Spark

|

|

Who's Involved

|

Data engineers, DevOps

|

Data engineers, analysts, domain experts, governance teams, business stakeholders

|

The takeaway: If your organization is investing in data movement and calling it data integration, you’re solving half the problem and paying for the whole thing. Movement scales pipelines. Integration scales trust.

This is the exact gap that data integration consulting is built to close, aligning your architecture, your tools, and your strategy to deliver real business outcomes, not just successful file transfers.

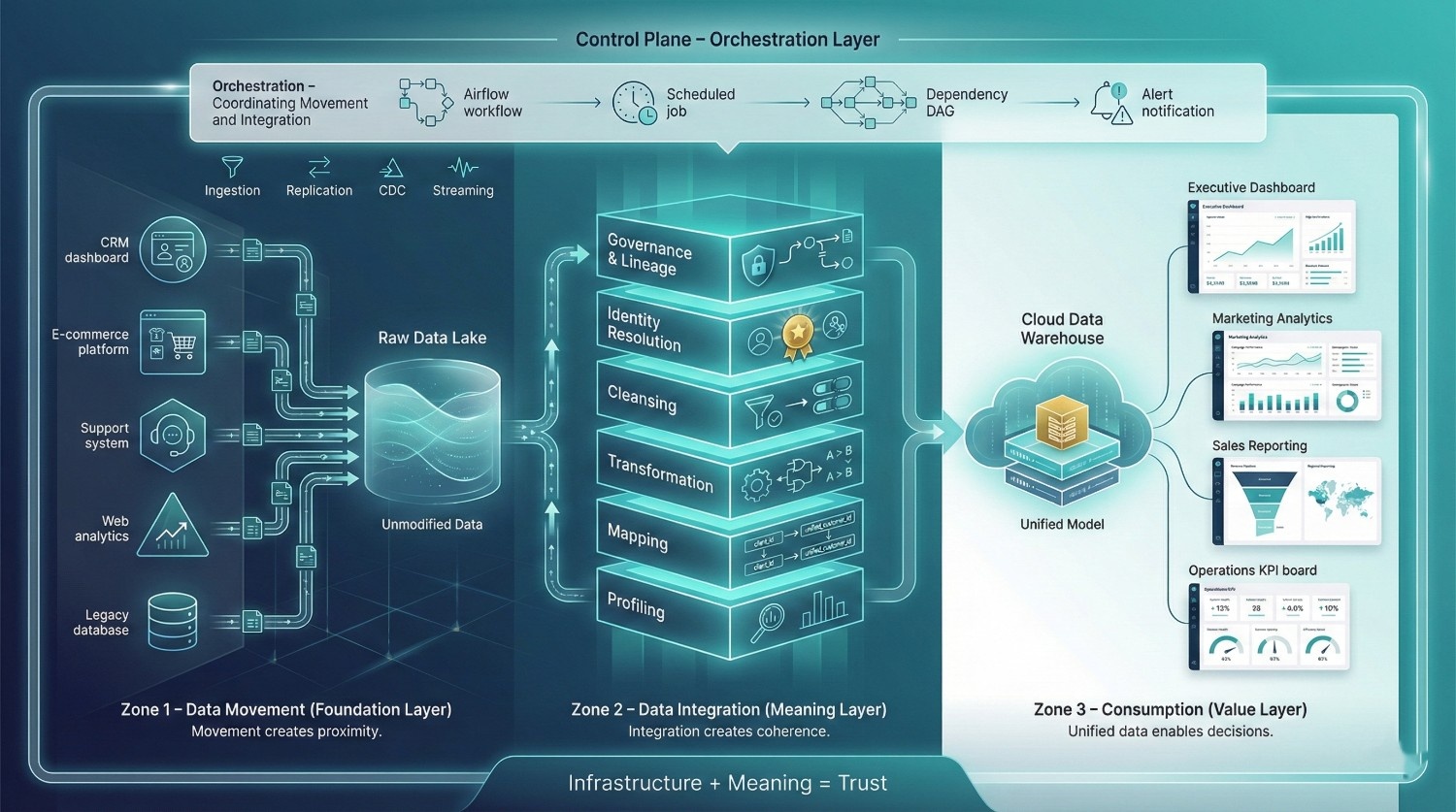

How Data Movement and Data Integration Work Together

Understanding the difference between data movement and data integration is important. But in practice, they don’t operate in isolation. They’re two halves of the same data lifecycle, and the most effective data strategies treat them as complementary, not competing. Treating them as separate initiatives is how architectures become brittle.

Movement as a Building Block of Integration

Every data integration workflow involves some form of data movement. You can’t transform, cleanse, or unify data that hasn’t been extracted and delivered somewhere first. But movement alone doesn’t get you to integration. It’s the necessary first step, not the destination.

The Pipeline Analogy:

- Data movement = the plumbing. Pipes that carry water from the source to the facility.

- Data integration = the water treatment and distribution system. Filtering, purifying, testing, and routing clean water to every tap in the building.

Infrastructure enables flow. Integration enables trust.Without plumbing, nothing flows. But without treatment, what flows is unusable. This is exactly how experienced data integration consulting teams frame the relationship:

- Movement is infrastructure, essential but insufficient

- Integration is the value layer, where raw data becomes a business asset

- Skip either one and the whole system breaks down

A Walkthrough Example

Let’s make this concrete with a real-world scenario.

The Scenario

A mid-size retail company wants to build a unified customer analytics dashboard. They need a single view of every customer, combining purchase history, support interactions, marketing engagement, and website behavior.

Their data lives in four systems: Salesforce, Shopify, Zendesk, and Google Analytics. None of these systems talk to each other natively. Each has its own schema, its own field names, and its own definition of “customer.” Here’s how movement and integration work together to solve this:

Phase 1: Data Movement

Step 1, Extract

- Pull customer data from all four source systems

- Use API connectors or CDC to capture current and historical records

- Tools: Fivetran, Airbyte, or custom API scripts

Step 2, Load into a Data Lake

- Land raw, unmodified data into a cloud data lake (S3, GCS, or ADLS)

- No transformation at this stage, just reliable transport and storage

- This is pure data movement

At this point, all the data is in one place. But it’s still four incompatible datasets sitting next to each other. Centralization without harmonization is just consolidated fragmentation.

Phase 2: Data Integration

Step 3, Profile and Discover

- Analyze each source’s schema, data types, completeness, and quality

- Identify conflicts: different field names, inconsistent formats, missing values

- Example findings:

- Salesforce uses client_name → Shopify uses customer_name

- Dates are MM/DD/YYYY in one system and YYYY-MM-DD in another

- Zendesk has email as the primary key; Salesforce uses an internal ID

Step 4, Map Fields Across Sources

- Define how fields from each system relate to each other

- Create a canonical data model, one standard structure that all sources map into

- This is where data integration consulting delivers massive value, getting field mapping right prevents months of rework downstream. Field mapping errors compound quietly and are often discovered only when executive dashboards conflict.

Step 5, Cleanse and Standardize

- Remove duplicate records within and across sources

- Standardize formats:

- Phone numbers → E.164 format

- Addresses → USPS or Google-validated format

- Dates → ISO 8601

- Fill or flag missing values based on business rules

Step 6, Resolve Entity Identity

- The hardest and most critical step

- Answer the question: Which records across four systems refer to the same real-world person?

- Techniques include:

- Deterministic matching (exact email or phone match)

- Probabilistic matching (fuzzy name + address + behavioral signals)

- Golden record creation, one authoritative profile per customer

Identity resolution is where integration shifts from technical exercise to business risk management.

Step 7, Load Unified Data into the Warehouse

- Push the cleansed, transformed, and resolved dataset into a data warehouse (Snowflake, BigQuery, Redshift)

- This data is now structured, governed, and query-ready

Phase 3: Consumption

Step 8, Dashboard and Analytics

- BI tools (Looker, Tableau, Power BI) query the warehouse

- The result: a true 360° customer view, one profile per customer, with every touchpoint unified

- Marketing, sales, support, and product all see the same data

|

Phase

|

What Happened

|

Type

|

|---|---|---|

|

Steps 1–2

|

Data extracted and landed in a data lake

|

Data Movement

|

|

Steps 3–7

|

Data profiled, mapped, cleansed, resolved, and loaded into a warehouse

|

Data Integration

|

|

Step 8

|

Business consumes unified data

|

Value Realization

|

Movement creates proximity. Integration creates coherence. Without movement, there’s nothing to integrate. Without integration, you just have four raw dumps in a lake that nobody trusts. This end-to-end lifecycle is exactly what data integration consulting engagements are designed to plan, architect, and execute.

The Modern Data Stack Perspective

The modern data stack has evolved to explicitly separate movement, transformation, and integration into distinct layers, each with its own tooling and responsibilities.

|

Layer

|

Function

|

Tools

|

|---|---|---|

|

Ingestion / Movement

|

Extract and load raw data from sources into a central store

|

Fivetran, Airbyte, Stitch, Meltano

|

|

Transformation

|

Clean, model, and restructure data inside the warehouse

|

dbt, SQLMesh, Coalesce

|

|

Integration / Semantic

|

Define business logic, relationships, metrics, and meaning on top of transformed data

|

Semantic layers (dbt Metrics, Looker LookML, AtScale), data catalogs (Atlan, Alation, DataHub)

|

|

Orchestration

|

Coordinate the sequence and dependencies across all layers

|

Airflow, Dagster, Prefect

|

The Shift-Left Trend

There’s a growing movement to push transformation and integration logic closer to consumption, rather than burying it deep in ETL pipelines:

- dbt popularized the idea of transforming data inside the warehouse using version-controlled SQL

- Semantic layers define business metrics once and enforce them across every BI tool

- Data contracts formalize expectations between data producers and consumers before data is moved

This shift means integration is no longer an afterthought, it’s becoming a first-class citizen in modern data architecture. Organizations that separate ingestion from meaning tend to accumulate technical debt in their semantic layer.

The Role of Orchestration

None of this works without orchestration. Tools like Airflow, Dagster, and Prefect act as the control plane:

- Scheduling extraction jobs (movement)

- Triggering transformation runs (integration)

- Managing dependencies, ensuring Step 3 doesn’t start until Step 2 succeeds

- Alerting on failures so issues get caught before they cascade

Where Data Integration Consulting Fits In

The modern data stack gives you the building blocks. But assembling them into a coherent architecture that actually serves your business? That’s where data integration consulting comes in:

- Which ingestion tool fits your source landscape?

- How should your dbt models be structured?

- Do you need a semantic layer, or is your warehouse modeling sufficient?

- Where do governance and lineage plug in?

- How do you orchestrate all of it without creating a maintenance nightmare?

These aren’t tool questions. They’re architecture questions. And getting them right at the start saves exponentially more than fixing them later.

When to Focus on Data Movement vs. Data Integration

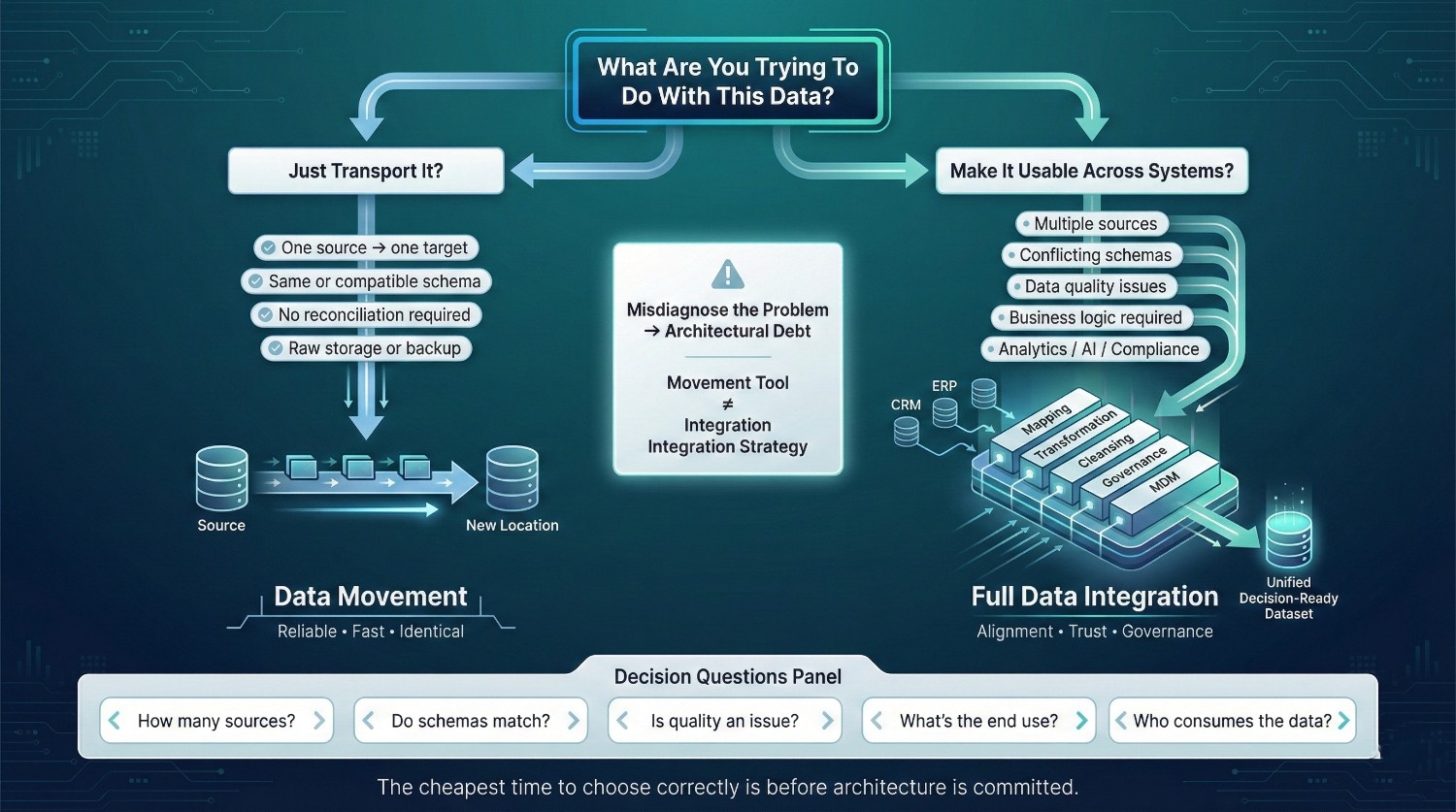

Knowing the difference between data movement and data integration is step one. Knowing when each is needed is where it becomes practically useful.

Not every data challenge requires the full weight of integration. Sometimes movement is all you need. But misidentifying which situation you’re in is one of the most common, and costly, mistakes in enterprise data strategy.

Scenarios Where Data Movement Alone Is Sufficient

There are legitimate cases where data just needs to get from Point A to Point B with no transformation,reconciliation, or business logic required.

One-Time Cloud Migration with Schema Compatibility

- You’re moving a PostgreSQL database from on-prem to AWS RDS

- The schema stays the same. The data model stays the same. Nothing needs to change.

- Tools like AWS DMS or pgdump/pgrestore handle this cleanly

- Movement is the entire job

Database Backup and Disaster Recovery

- Replicating production data to a standby instance in another region

- The goal is an identical copy, not a transformed or enriched version

- Fidelity and speed are the only metrics that matter

Feeding a Data Lake with Raw Data

- Streaming raw event data, logs, or clickstream into S3 or GCS for future exploration

- The data will be transformed later, At this stage you just need to capture and store it

- Classic ELT pattern: load first, figure out the meaning later

Syncing Identical Schemas Between Environments

- Keeping dev, staging, and production databases in sync

- Same schema, same structure, same platform

- No cross-system reconciliation needed

The Common Thread

In all of these cases:

- There’s typically one source and one target

- Schemas are compatible or identical

- No business logic or cross-system mapping is required

- The data doesn’t need to be “understood”, just only transported reliably

If your situation checks all these boxes, data movement alone is likely sufficient. But the moment any of them stops being true, you’re in integration territory.

Scenarios That Demand Full Data Integration

These are the situations where movement alone will not be sufficient, and organizations that skip integration pay the highest price.

Merging Datasets from Multiple Business Units or Acquisitions

- Two companies merge. Both have their own CRM, ERP, HR system, and finance platform.

- “Customer” means something different in each system. So does “revenue.” So does “employee.”

- You need entity resolution, schema mapping, business rule alignment, and governance

- This is a textbook data integration consulting engagement and one of the most complex scenarios

Building Cross-System Analytics and Reporting

- Your CFO wants a dashboard that combines financial data from NetSuite, pipeline data from Salesforce, and usage data from your product database

- These systems don’t share a common schema, common keys, or common definitions

- Without integration, you’ll get three versions of the truth, none of them trustworthy

Regulatory Reporting and Compliance

- GDPR, HIPAA, SOX, CCPA, regulators expect reconciled, auditable, consistent data

- “The data lives in five systems” is not an acceptable answer during an audit

- Integration isn’t optional here, it’s a legal requirement

Customer Data Platforms (CDPs) and Personalization

- Personalizing customer experiences requires a unified profile, not fragments scattered across systems

- You need identity resolution, behavioral data stitching, and real-time profile updates

- Movement gets the data into the CDP. Integration makes it usable.

AI/ML Model Training

- Machine learning models are only as good as their training data

- Models need clean, unified, well-structured feature sets, not raw dumps from six different sources with conflicting formats

- Feature engineering is data integration, mapping, transforming, and enriching raw inputs into model-ready datasets

The Common Thread

In all of these cases:

- Multiple sources with incompatible schemas and definitions

- Business logic, data quality, and governance are non-negotiable

- The end consumers, analysts, executives, models, regulators, need data that’s meaningful, not just present

- Skipping integration doesn’t save money. It creates technical debt that compounds over time.

This is where data integration consulting can deliver the highest ROI, helping organizations navigate multi-source complexity and build architectures that produce trustworthy, actionable data.

A Decision Framework

Not sure whether your situation calls for data movement, data integration, or both? Walk through these five questions:

The 5 Questions to Ask

|

No.

|

Question

|

If The Answer Is:

|

You Likely Need

|

|---|---|---|---|

|

1

|

How many sources are involved?

|

One source, one target

|

Movement

|

|

Multiple sources feeding one destination

|

Integration

|

||

|

2

|

Do the schemas match?

|

Yes, identical or highly compatible

|

Movement

|

|

No, different structures, naming, formats

|

Integration

|

||

|

3

|

Is data quality a concern?

|

No, source data is clean and reliable

|

Movement may suffice

|

|

Yes, duplicates, inconsistencies, missing values

|

Integration

|

||

|

4

|

What's the end use?

|

Storage, backup, or staging for future processing

|

Movement

|

|

Analytics, reporting, ML, or operational decisions

|

Integration

|

||

|

5

|

Who are the data consumers?

|

Engineers or systems that handle raw data

|

Movement

|

|

Analysts, executives, models, or regulators who need trusted data

|

Integration

|

The Decision Tree

Why This Framework Matters

Most organizations don’t fail because they chose the wrong tool. They fail because they misdiagnosed the problem.

- They bought a movement tool when they needed an integration platform

- They built pipelines when they needed a data model

- They hired engineers when they also needed domain experts and governance leads

A structured decision framework, ideally guided by data integration consulting, prevents these misalignments before they become expensive mistakes. The cheapest time to get this right is before the first pipeline is built.

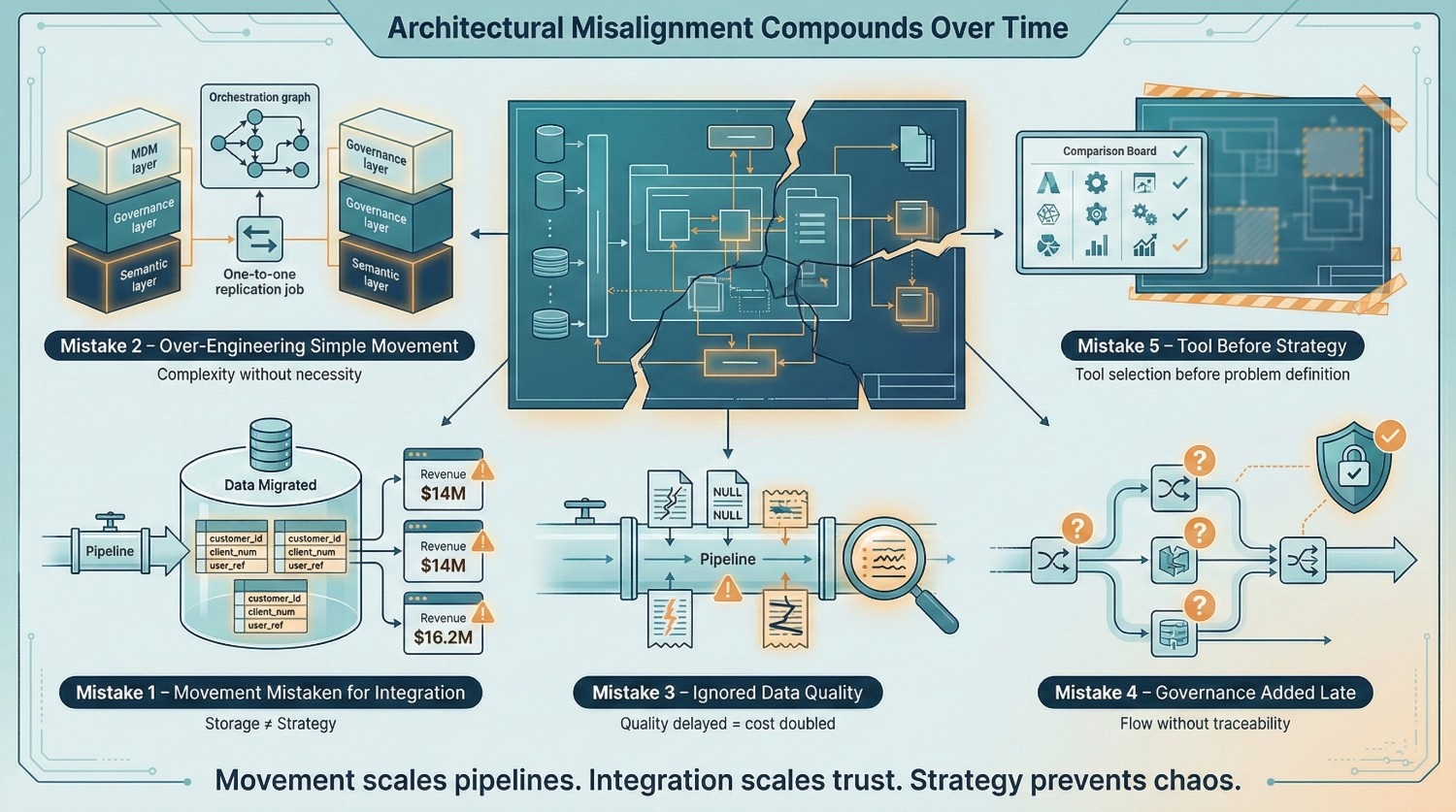

Common Mistakes and Misconceptions

Every section so far has been about understanding the right way to think about data movement and data integration. This section is about what goes wrong when you don’t.

These aren’t theoretical risks. They’re patterns that play out again and again across enterprises of every size, and they’re the exact problems that data integration consulting engagements are brought in to fix (often after significant damage has already been done).

Treating Data Movement as Data Integration

This is the single most expensive misconception in enterprise data.

The Trap

“We migrated all the data to Snowflake. We’re done.”

No. You moved data. You didn’t integrate it. The data is sitting in your warehouse, but it’s still in its original, incompatible formats. Different naming conventions. Different definitions. Different granularity. Nobody reconciled anything. Nobody mapped anything. Nobody resolved duplicates.

You don’t have a unified dataset. You have a data lake wearing a warehouse costume.

The Consequences

- Conflicting reports, Finance says Q3 revenue was $14M. Sales says $16.2M. Both pulled from the “same” warehouse. Neither is wrong, they’re just querying unreconciled source data with different definitions of “revenue.”

- Eroded trust, Once leadership gets conflicting numbers twice, they stop trusting the data entirely. Decisions go back to gut instinct and spreadsheets.

- Rework and fire drills, Teams spend weeks manually reconciling data that should have been integrated from the start

The Fix

Stop treating the data warehouse as the finish line. Movement gets data to the warehouse. Integration makes it usable inside the warehouse. These are sequential steps, not the same step.

This is the most common finding in any data integration consulting assessment, and the one with the fastest ROI to fix. A warehouse full of raw tables is not a data strategy. It’s a storage strategy. Integration is what turns storage into leverage.

Over-Engineering Simple Movement Tasks

The opposite mistake is just as wasteful.

The Trap

“We need a full integration platform with MDM, data quality rules, and a governance layer… to replicate one PostgreSQL database to a read replica.”

Not every data problem requires the full integration stack. Sometimes you genuinely just need to move data, and overcomplicating a straightforward movement task creates unnecessary cost and delay.

What Over-Engineering Looks Like

- Adding transformation layers to a simple one-to-one replication job

- Building custom orchestration for something Fivetran handles out of the box

- Involving six teams and a governance review for a basic database sync

- Spending three months architecting what should have been a two-week project

The Consequences

- Slower delivery, a simple migration takes months instead of weeks

- Higher maintenance burden, unnecessary complexity means more things that can break

- Team fatigue, engineers lose trust in the data team’s ability to right-size solutions

The Fix

Match the solution to the problem. If schemas are compatible, the source is clean, and there’s one target, keep it simple. Save the heavy integration machinery for situations that actually demand it.

A good data integration consulting partner won’t push integration when movement is all you need. If they do, they’re selling, not consulting. Architecture maturity means knowing when not to integrate. Precision beats ambition in data strategy.

Ignoring Data Quality at Both Stages

The Trap

“We’ll handle data quality during integration. No need to worry about it during movement.”

This sounds reasonable. It’s not.

Why Quality Matters During Movement

Data quality is not a cleanup phase. It is a design principle that must exist at every stage of the pipeline.

- If source data is corrupted, truncated, or incomplete before extraction, you’re moving garbage

- If encoding issues, null handling, or type mismatches aren’t caught during transit, they cascade downstream

- Discovering data quality problems during integration that should have been flagged during movement doubles the debugging time

Why Quality Matters During Integration

- Deduplication, standardization, validation, and enrichment are core integration functions

- But they only work if the raw input meets a minimum quality threshold

- You can’t deduplicate records that were corrupted during extraction. You can’t standardize fields that were truncated during load.

The Fix

Implement quality checks at both stages:

|

Stage

|

Quality Checks

|

|---|---|

|

Movement

|

Row count validation, schema drift detection, null rate monitoring, encoding verification

|

|

Integration

|

Deduplication, format standardization, business rule validation, referential integrity checks

|

Garbage in, garbage out applies to the entire pipeline, not just the integration layer. Every data integration consulting roadmap worth its cost includes quality gates at every stage, not just the end.

Neglecting Governance and Lineage

The Trap

“Let’s just get the data flowing first. We’ll add governance later.”

“Later” never comes. And by the time it does, you have:

- Dozens of pipelines with no documentation

- Transformations nobody can explain

- No record of where data came from or how it was modified

- Access controls that are either nonexistent or inconsistently applied

The Real-World Risks

- Compliance violations, A regulator asks where a specific data point originated. You can’t answer. That’s a finding.

- Security breaches, PII data was moved to a staging environment with open access. Nobody tracked it because nobody was tracking anything.

- Untraceable errors, A dashboard shows wrong numbers. Nobody knows if the issue is in the source, the extraction, the transformation, or the loading. Debugging takes weeks instead of hours.

The Fix

Governance and lineage aren’t features you bolt on at the end. They need to be embedded from day one:

- During movement, log source, destination, timestamp, row counts, and schema versions for every transfer

- During integration, track every transformation, mapping decision, and business rule applied

- Across both, enforce access controls, data classification, and retention policies

This is a non-negotiable component of any data integration consulting engagement. Governance added late becomes bureaucracy. Governance designed early becomes accelerated. If governance isn’t in the architecture from the start, it becomes exponentially harder to add later.

Choosing Tools Before Defining Strategy

The Trap

This might be the most seductive mistake of all:

“Let’s evaluate Fivetran vs. Airbyte vs. Informatica and then figure out our data strategy.”

It sounds productive. It’s backwards.

Why This Fails

- You can’t choose the right tool if you haven’t defined the problem

- Is your challenge movement, integration, or both?

- Are you dealing with one source or fifty?

- Do you need real-time streaming or daily batch?

- Is data quality a concern? Is governance required?

Without answers to these questions, every tool evaluation is a coin flip.

What Actually Happens

- The team picks a tool based on a vendor demo, a blog post, or what a peer company uses

- Six months later, the tool doesn’t fit the actual requirements

- The team builds workarounds. Then workarounds for the workarounds.

- Eventually, someone proposes ripping it out and starting over

The Fix

Strategy before tooling. Always.

The right sequence:

- Assess, What data do you have? Where does it live? What condition is it in?

- Define, What are the business outcomes you need? Analytics? Compliance? Personalization?

- Architect, What combination of movement, integration, and governance is required?

- Then select tools, based on how well they fit the architecture you’ve designed

This is the core value proposition of data integration consulting, making sure the strategy drives the tool selection, not the other way around. Tools amplify clarity. They also amplify confusion. Without strategy, they scale chaos. The best tool for the wrong architecture is still the wrong tool.

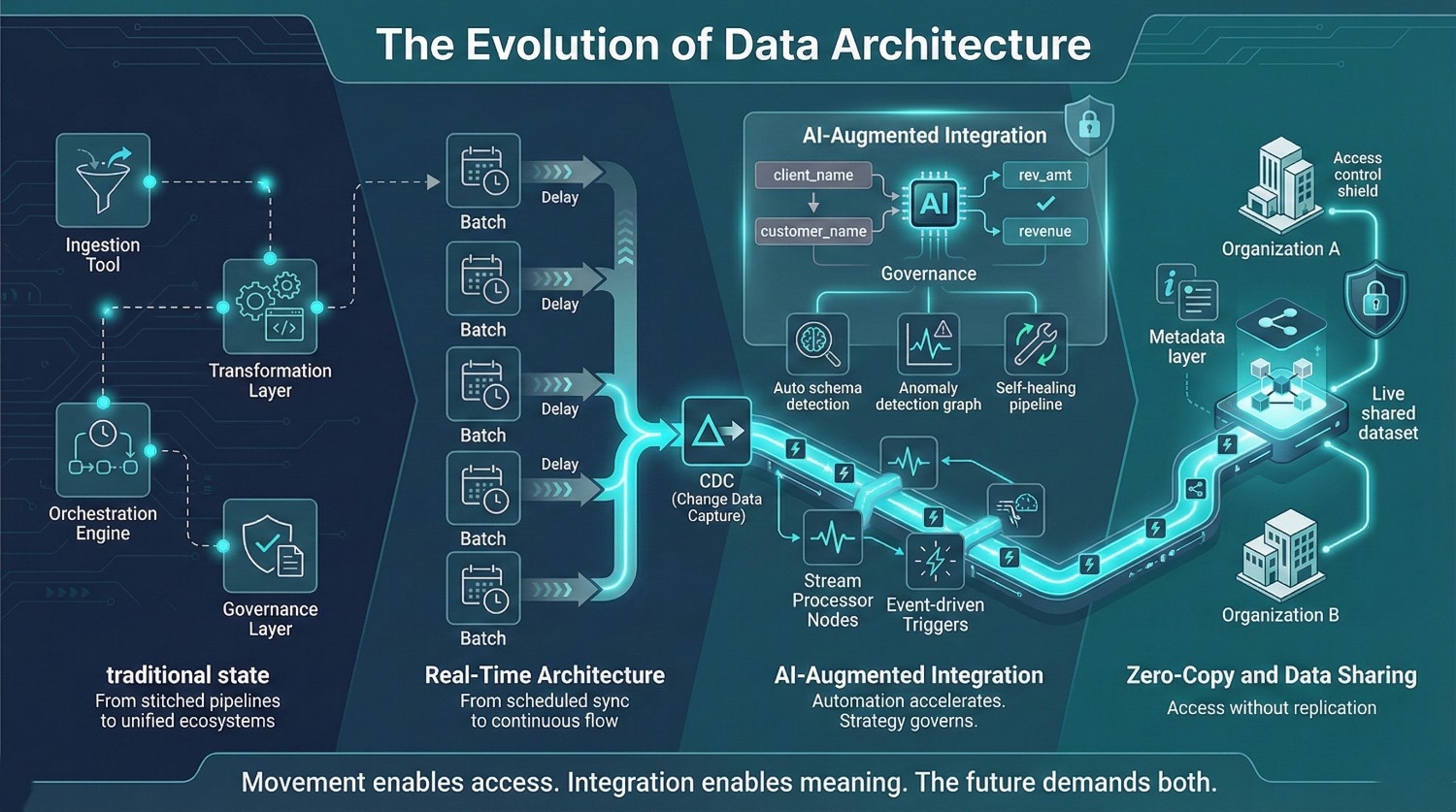

The Future of Data Movement and Data Integration

The distinction between data movement and data integration isn’t going away. But how organizations execute both is changing rapidly.

The next generation of data architecture is being shaped by platform convergence, real-time expectations, AI-driven automation, and entirely new paradigms for sharing data without moving it at all. Here’s where things are headed, and what it means for your strategy.

Convergence of Tools and Platforms

The Trend

For years, data movement and data integration lived in separate toolchains. You had Fivetran for ingestion, dbt for transformation, Informatica for integration, Airflow for orchestration, all stitched together manually.

That’s changing. The market is moving toward unified platforms that handle movement and integration within a single ecosystem.

What's Driving This

- iPaaS evolution, Platforms like MuleSoft, Boomi, and Workato are expanding from application integration into full data integration, including movement, transformation, and governance

- Data Fabric architectures, An approach that creates an intelligent, metadata-driven layer across all data sources, automating discovery, integration, and delivery regardless of where data lives

- Data Mesh, A decentralized approach where individual domains own their data as products, handling both movement and integration within their domain boundaries

What This Means in Practice

|

Architecture

|

Movement Approach

|

Integration Approach

|

Best For

|

|---|---|---|---|

|

Traditional (centralized)

|

Separate ingestion tools

|

Centralized ETL/integration platform

|

Organizations with strong central data teams

|

|

Data Fabric

|

Automated, metadata-driven ingestion

|

AI-assisted mapping and virtualization

|

Enterprises with large, distributed data estates

|

|

Data Mesh

|

Domain-owned pipelines

|

Domain-owned data products with org-wide standards

|

Large organizations with mature domain teams

|

The Consulting Angle

Convergence doesn’t mean simplicity. These unified platforms and new architectures come with their own complexity, and choosing the wrong model for your organization’s maturity level is a costly mistake.

Data integration consulting is evolving alongside these trends, helping organizations evaluate whether a centralized, fabric, or mesh approach fits their reality, not just their ambitions.

Real-Time Everything

The Shift

Batch processing isn’t dying, but it’s no longer the default assumption. The expectation across the enterprise is shifting toward real-time or near-real-time data availability:

- Marketing wants real-time personalization, not recommendations based on yesterday’s data

- Operations wants live dashboards, not reports that are 24 hours stale

- Fraud detection needs instant pattern recognition, not batch alerts after the damage is done

- Supply chain wants real-time inventory visibility, not overnight syncs

What This Means for Movement

- Streaming-first ingestion is replacing batch extraction for high-velocity data

- Tools like Apache Kafka, Amazon Kinesis, and Confluent are becoming default infrastructure, not specialty tools

- CDC (Change Data Capture) is replacing full-table replication as the standard sync mechanism

What This Means for Integration

- Event-driven integration, data is transformed and reconciled as events arrive, not in scheduled batches

- Stream processing frameworks (Flink, Spark Streaming, ksqlDB) enable transformation in motion, integrating data before it even lands

- The boundary between “movement” and “integration” blurs when both happen in the same streaming pipeline

The Challenge

Real-time architecture is powerful but unforgiving:

- Error handling is harder, bad data propagates instantly

- Schema evolution must be managed continuously, not during maintenance windows

- Governance and lineage tracking need to work at stream speed, not batch speed

This is an area where data integration consulting is becoming increasingly critical, because the cost of getting real-time architecture wrong is measured in minutes, not weeks. Real-time architecture magnifies both excellence and error. If your integration logic is weak, real-time only makes it fail faster.

AI and Automation

The Current State

AI isn’t replacing data engineers. But it’s dramatically accelerating the most tedious and error-prone parts of both movement and integration. AI reduces manual effort. It does not reduce architectural responsibility.

Where AI Is Already Making an Impact

Automated Schema Matching and Data Mapping

- AI models that analyze source and target schemas and suggest field mappings automatically

- Instead of manually mapping 500 fields across three systems, engineers review and approve AI-generated suggestions

- Reduces mapping time from weeks to hours

Intelligent Data Quality Monitoring

- ML-based anomaly detection that flags data drift, unexpected nulls, volume spikes, and distribution changes, before they break downstream systems

- Replaces brittle, rule-based quality checks with adaptive monitoring that learns your data’s normal patterns

Self-Healing Pipelines

- Pipelines that detect failures and automatically retry, reroute, or adjust without human intervention

- Schema drift handling, when a source system adds a column, the pipeline adapts instead of crashing

LLMs and Generative AI for Integration

- Natural language interfaces for querying data catalogs, “Show me all customer tables with email fields across our Salesforce and Shopify sources”

- AI-generated transformation code, describe the mapping logic in plain English, get a dbt model or SQL query in return

- Automated documentation, LLMs generate lineage descriptions, field definitions, and governance metadata from existing pipeline code

What's Coming Next

- End-to-end AI-driven integration workflows, from discovery through transformation to delivery, with humans approving rather than building

- Semantic understanding, AI that doesn’t just map client_name to customer_name but understands that they represent the same business entity even when naming conventions give no clue

- Continuous integration optimization, AI that monitors query patterns and automatically restructures data models for better performance

The Reality Check

AI accelerates the work. It doesn’t eliminate the need for strategy, governance, or architecture decisions. The organizations getting the most value from AI in their data stack are the ones that have their foundational integration strategy right first.

Data integration consulting is increasingly incorporating AI capabilities into its delivery, but the consulting itself remains essential for the strategic, architectural, and organizational decisions that AI can’t make.

Zero-Copy and Data Sharing

The Paradigm Shift

What if you didn’t have to move data at all?

That’s the promise of zero-copy data sharing, a set of technologies that allow organizations to share and access data across systems and organizations without physically replicating it. Eliminating movement does not eliminate meaning. Access to data is not the same as understanding it.

Key Technologies

|

Technology

|

What It Does

|

|---|---|

|

Snowflake Data Sharing

|

Share live, governed datasets between Snowflake accounts, no copies, no ETL, no data movement

|

|

Delta Sharing

|

An open protocol for secure, real-time sharing of data in Delta Lake format, across any platform

|

|

Data Clean Rooms

|

Environments where multiple parties can analyze combined datasets without either party seeing the other's raw data, critical for privacy-sensitive use cases like advertising and healthcare

|

What This Changes

- Reduces movement overhead, no need to extract, transfer, and load data that can be accessed in place

- Eliminates sync lag, consumers always see the latest data, not a stale copy

- Simplifies governance, one copy of the data means one set of access controls, one lineage trail

- Enables cross-organization integration, partners, vendors, and customers can share data without the complexity of traditional file exchanges or API integrations

What It Doesn't Change

Zero-copy sharing reduces or eliminates the movement problem. But it doesn’t eliminate the integration problem.

- Shared data still needs to be mapped to your internal models

- Semantic differences between your definitions and your partner’s still need to be reconciled

- Data quality in the shared source still needs to be validated

- Governance, who can see what, under what conditions, becomes more complex, not less

The Takeaway

Zero-copy is a transformative technology for reducing unnecessary data movement. But integration remains essential, the challenge just shifts from “How do we move and unify this data?” to “How do we make sense of data we can now access but didn’t create?”

This emerging landscape is where data integration consulting is heading, helping organizations navigate a world where data doesn’t need to move to be integrated, but still needs strategy, governance, and architecture to be useful.

Final Thoughts

Let's Bring It Home

If there’s one thing to take away from this post, it’s this:

Data movement gets data from Point A to Point B. Data integration makes that data meaningful, consistent, and usable across the entire organization.

They’re related. They’re complementary. But they are not the same thing, and treating them interchangeably is one of the most common and costly mistakes in enterprise data strategy.

The Core Distinction, One Last Time

|

Distinciton

|

Data Movement

|

Data Integration

|

|---|---|---|

|

What it does

|

Transports data between systems

|

Unifies data into a coherent, trustworthy asset

|

|

What it prioritizes

|

Speed, reliability, fidelity

|

Consistency, context, business value

|

|

Where it stops

|

Data arrives at the destination

|

Data is ready for decisions

|

Why This Matters for Your Organization

- Movement is necessary, nothing happens without getting data from source to destination

- But movement is not sufficient, data sitting in a warehouse in its raw, unreconciled form isn’t an asset. It’s a liability dressed up as infrastructure.

- Integration is where business value gets unlocked, unified customer views, trustworthy reporting, compliant audit trails, AI-ready datasets

- Getting this distinction right is the foundation of every robust, scalable, and trustworthy data strategy

Organizations that understand this build architectures that work. Organizations that don’t spend years duct-taping pipelines together and wondering why nobody trusts the numbers.

What to Do Next

Before investing in another tool, another migration, or another pipeline, audit your current data workflows with this distinction in mind:

- Where are you moving data but not integrating it?

- Where are reports pulling from unreconciled sources?

- Where is “the data is in the warehouse” being mistaken for “the data is ready”?

- Where are teams building workarounds because the integration layer doesn’t exist?

These are the questions that data integration consulting starts with, and they’re questions you can start asking internally today.

Frequently Asked Questions (FAQs)

Data movement is the process of transporting data from one system, location, or environment to another. The focus is purely on getting data from Point A to Point B, reliably, quickly, and intact.

Data integration goes much further. It involves combining data from multiple disparate sources into a unified, consistent, and meaningful view. This includes:

- Schema mapping

- Data transformation and enrichment

- Cleansing and deduplication

- Business rule application

- Governance and lineage tracking

In short, movement is about transport. Integration is about making data usable.

Yes, and it happens all the time. Common examples include:

- Replicating a database for disaster recovery

- Migrating a data warehouse to the cloud with the same schema

- Streaming raw event data into a data lake for future processing

- Syncing identical environments (dev → staging → production)

In all these cases, data is moved but not transformed, reconciled, or unified. It’s still in its original form, just in a new location.

Technically, yes, through technologies like data virtualization and zero-copy data sharing. These approaches create a unified view across sources without physically moving or replicating data. Tools like Denodo, Snowflake Data Sharing, and Delta Sharing enable this.

However, most real-world integration workflows still involve some degree of movement, even if it’s just extracting data into a staging layer for transformation.

Several reasons:

- Vendor marketing, many tools advertise “integration” when they really only handle ingestion and replication

- Visible progress bias, moving data feels like a tangible accomplishment, so teams stop there

- Terminology overlap, both concepts involve pipelines, connectors, and data flows, which makes them easy to conflate

- Lack of strategic framing, without a clear data strategy, teams default to solving the most obvious problem (transport) and miss the harder one (unification)

This confusion is one of the top reasons organizations invest in data integration consulting, to get the diagnosis right before committing to a solution.

Data integration consulting is a specialized discipline focused on helping organizations design and implement strategies for unifying data across disparate systems. A typical engagement includes:

- Assessment, auditing existing data sources, pipelines, and quality issues

- Architecture design, defining the right combination of movement, transformation, and integration approaches

- Tool selection, recommending platforms based on actual requirements, not vendor hype

- Implementation support, building and deploying integration workflows

- Governance framework, establishing lineage, access controls, and quality standards

The goal isn’t just to move data, it’s to turn fragmented data into a trusted, usable business asset.

There are clear signals that indicate it’s time:

- Multiple source systems with incompatible schemas and definitions

- Conflicting reports, different teams get different numbers from the “same” data

- Post-merger or acquisition, two companies’ data ecosystems need to be unified

- Compliance pressure, regulators are asking for reconciled, auditable data you can’t produce

- AI/ML ambitions, models need clean, unified training data that doesn’t exist yet

- Failed migrations, data was moved to the cloud but nobody can use it effectively

If any of these sound familiar, data integration consulting isn’t a nice-to-have, it’s the fastest path to fixing the root problem.

It’s both, depending on what you’re doing with it.

- The Extract and Load stages are data movement, getting data out of sources and into a target

- The Transform stage can be either:

- Movement-level transformation, simple format conversion, compression, or serialization

- Integration-level transformation, schema mapping, business rule application, cleansing, enrichment, and entity resolution

If your ETL pipeline just replicates data with minor formatting changes, that’s movement. If it’s mapping fields across five source systems, deduplicating records, and applying business logic, that’s integration.

The short answer: your data exists in one place but doesn’t work as one system.

The long answer, you’ll likely experience:

- Dashboards showing conflicting numbers across departments

- Analysts spending 60%+ of their time cleaning and reconciling data manually

- AI/ML models trained on inconsistent data producing unreliable outputs

- Compliance teams unable to produce audit-ready reports

- A growing distrust of data across the organization

Data movement without integration is like stocking a library with books in 15 different languages, with no catalog and no organization system. Everything is technically there. Nobody can find or use anything.

AI is transforming the most time-consuming parts of integration:

- Automated schema matching, AI suggests field mappings across sources, reducing manual effort from weeks to hours

- Intelligent quality monitoring, ML models detect anomalies and data drift before they break pipelines

- Self-healing pipelines, systems that automatically adapt to schema changes instead of crashing

- LLM-powered data catalogs, natural language search across your entire data estate

- AI-generated transformation code, describe what you need in plain English, get working dbt models or SQL in return

But AI doesn’t replace the need for strategy, governance, or architecture decisions. It accelerates execution, not planning. The organizations getting the most value are the ones that pair AI tooling with solid data integration consulting foundations.